Investigating African-American Vernacular English in Transformer-Based Text Generation

Sophie Groenwold, Lily Ou, Aesha Parekh, Samhita Honnavalli, Sharon Levy, Diba Mirza, William Yang Wang

Computational Social Science and Social Media Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

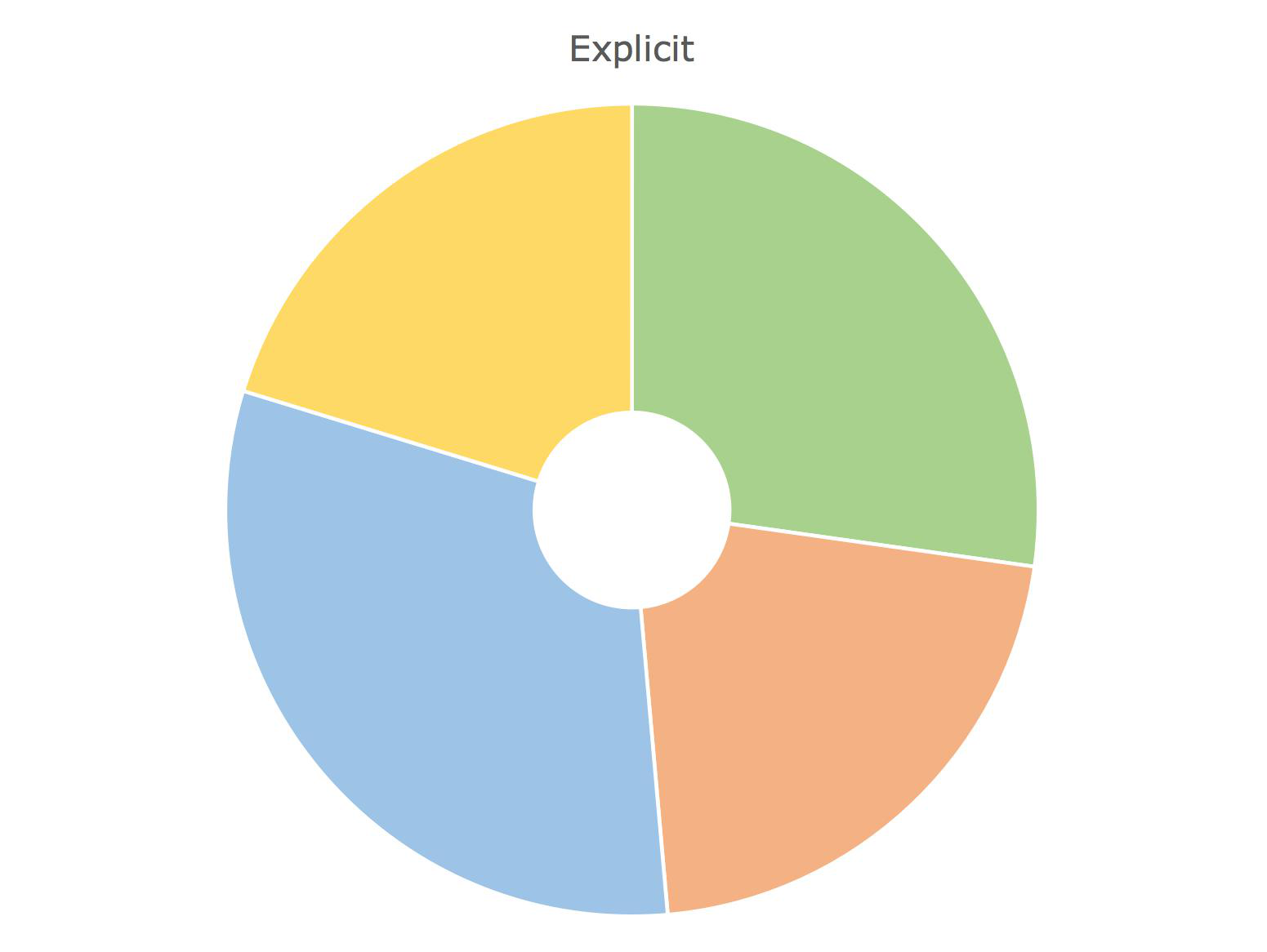

The growth of social media has encouraged the written use of African American Vernacular English (AAVE), which has traditionally been used only in oral contexts. However, NLP models have historically been developed using dominant English varieties, such as Standard American English (SAE), due to text corpora availability. We investigate the performance of GPT-2 on AAVE text by creating a dataset of intent-equivalent parallel AAVE/SAE tweet pairs, thereby isolating syntactic structure and AAVE- or SAE-specific language for each pair. We evaluate each sample and its GPT-2 generated text with pretrained sentiment classifiers and find that while AAVE text results in more classifications of negative sentiment than SAE, the use of GPT-2 generally increases occurrences of positive sentiment for both. Additionally, we conduct human evaluation of AAVE and SAE text generated with GPT-2 to compare contextual rigor and overall quality.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

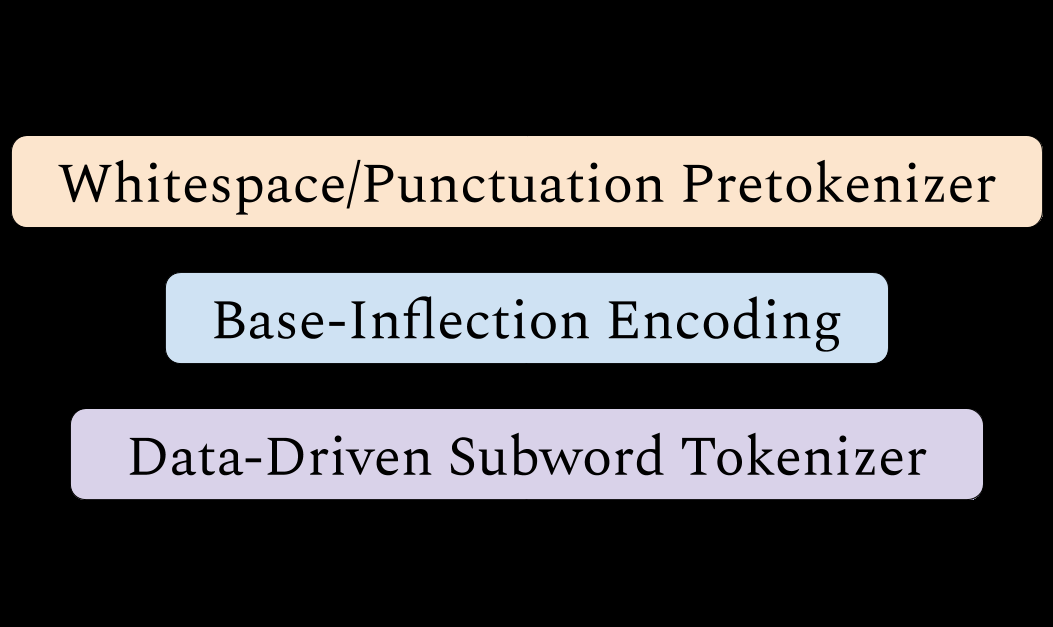

Mind Your Inflections! Improving NLP for Non-Standard Englishes with Base-Inflection Encoding

Samson Tan, Shafiq Joty, Lav Varshney, Min-Yen Kan,

CMU-MOSEAS: A Multimodal Language Dataset for Spanish, Portuguese, German and French

AmirAli Bagher Zadeh, Yansheng Cao, Simon Hessner, Paul Pu Liang, Soujanya Poria, Louis-Philippe Morency,

Investigating representations of verb bias in neural language models

Robert Hawkins, Takateru Yamakoshi, Thomas Griffiths, Adele Goldberg,

TED-CDB: A Large-Scale Chinese Discourse Relation Dataset on TED Talks

Wanqiu Long, Bonnie Webber, Deyi Xiong,