Investigating representations of verb bias in neural language models

Robert Hawkins, Takateru Yamakoshi, Thomas Griffiths, Adele Goldberg

Linguistic Theories, Cognitive Modeling and Psycholinguistics Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

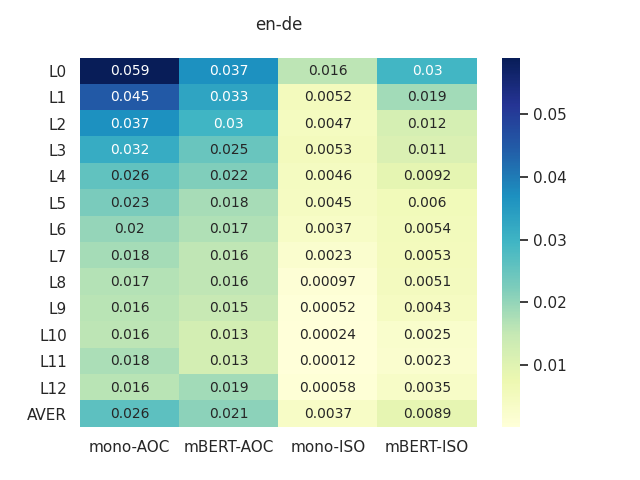

Languages typically provide more than one grammatical construction to express certain types of messages. A speaker's choice of construction is known to depend on multiple factors, including the choice of main verb -- a phenomenon known as verb bias. Here we introduce DAIS, a large benchmark dataset containing 50K human judgments for 5K distinct sentence pairs in the English dative alternation. This dataset includes 200 unique verbs and systematically varies the definiteness and length of arguments. We use this dataset, as well as an existing corpus of naturally occurring data, to evaluate how well recent neural language models capture human preferences. Results show that larger models perform better than smaller models, and transformer architectures (e.g. GPT-2) tend to out-perform recurrent architectures (e.g. LSTMs) even under comparable parameter and training settings. Additional analyses of internal feature representations suggest that transformers may better integrate specific lexical information with grammatical constructions.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

BLiMP: The Benchmark of Linguistic Minimal Pairs for English

Alex Warstadt, Alicia Parrish, Haokun Liu, Anhad Monananey, Wei Peng, Sheng-Fu Wang, Samuel Bowman,

Learning Music Helps You Read: Using Transfer to Study Linguistic Structure in Language Models

Isabel Papadimitriou, Dan Jurafsky,

Investigating Cross-Linguistic Adjective Ordering Tendencies with a Latent-Variable Model

Jun Yen Leung, Guy Emerson, Ryan Cotterell,

Probing Pretrained Language Models for Lexical Semantics

Ivan Vulić, Edoardo Maria Ponti, Robert Litschko, Goran Glavaš, Anna Korhonen,