Generating Diverse Translation from Model Distribution with Dropout

Xuanfu Wu, Yang Feng, Chenze Shao

Machine Translation and Multilinguality Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

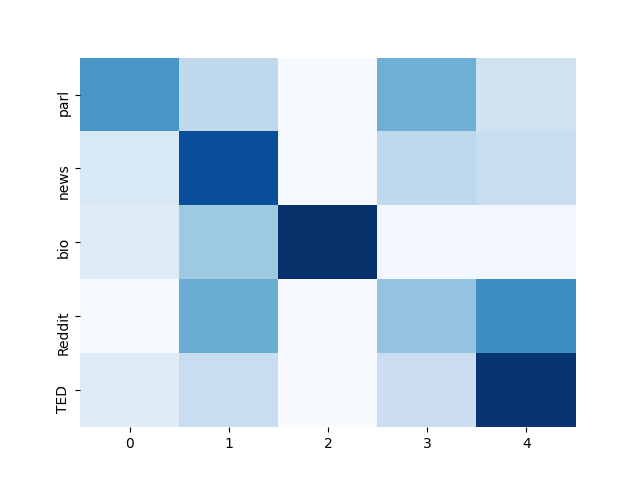

Despite the improvement of translation quality, neural machine translation (NMT) often suffers from the lack of diversity in its generation. In this paper, we propose to generate diverse translations by deriving a large number of possible models with Bayesian modelling and sampling models from them for inference. The possible models are obtained by applying concrete dropout to the NMT model and each of them has specific confidence for its prediction, which corresponds to a posterior model distribution under specific training data in the principle of Bayesian modeling. With variational inference, the posterior model distribution can be approximated with a variational distribution, from which the final models for inference are sampled. We conducted experiments on Chinese-English and English-German translation tasks and the results shows that our method makes a better trade-off between diversity and accuracy.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Distilling Multiple Domains for Neural Machine Translation

Anna Currey, Prashant Mathur, Georgiana Dinu,

On the Sparsity of Neural Machine Translation Models

Yong Wang, Longyue Wang, Victor Li, Zhaopeng Tu,

Pronoun-Targeted Fine-tuning for NMT with Hybrid Losses

Prathyusha Jwalapuram, Shafiq Joty, Youlin Shen,

Improving Text Generation with Student-Forcing Optimal Transport

Jianqiao Li, Chunyuan Li, Guoyin Wang, Hao Fu, Yuhchen Lin, Liqun Chen, Yizhe Zhang, Chenyang Tao, Ruiyi Zhang, Wenlin Wang, Dinghan Shen, Qian Yang, Lawrence Carin,