How to Make Neural Natural Language Generation as Reliable as Templates in Task-Oriented Dialogue

Henry Elder, Alexander O'Connor, Jennifer Foster

Language Generation Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

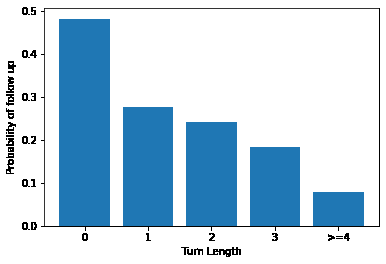

Neural Natural Language Generation (NLG) systems are well known for their unreliability. To overcome this issue, we propose a data augmentation approach which allows us to restrict the output of a network and guarantee reliability. While this restriction means generation will be less diverse than if randomly sampled, we include experiments that demonstrate the tendency of existing neural generation approaches to produce dull and repetitive text, and we argue that reliability is more important than diversity for this task. The system trained using this approach scored 100\% in semantic accuracy on the E2E NLG Challenge dataset, the same as a template system.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Uncertainty-Aware Semantic Augmentation for Neural Machine Translation

Xiangpeng Wei, Heng Yu, Yue Hu, Rongxiang Weng, Luxi Xing, Weihua Luo,

Neural Conversational QA: Learning to Reason vs Exploiting Patterns

Nikhil Verma, Abhishek Sharma, Dhiraj Madan, Danish Contractor, Harshit Kumar, Sachindra Joshi,

Improving Text Generation with Student-Forcing Optimal Transport

Jianqiao Li, Chunyuan Li, Guoyin Wang, Hao Fu, Yuhchen Lin, Liqun Chen, Yizhe Zhang, Chenyang Tao, Ruiyi Zhang, Wenlin Wang, Dinghan Shen, Qian Yang, Lawrence Carin,

Planning and Generating Natural and Diverse Disfluent Texts as Augmentation for Disfluency Detection

Jingfeng Yang, Diyi Yang, Zhaoran Ma,