Explainable Automated Fact-Checking for Public Health Claims

Neema Kotonya, Francesca Toni

NLP Applications Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

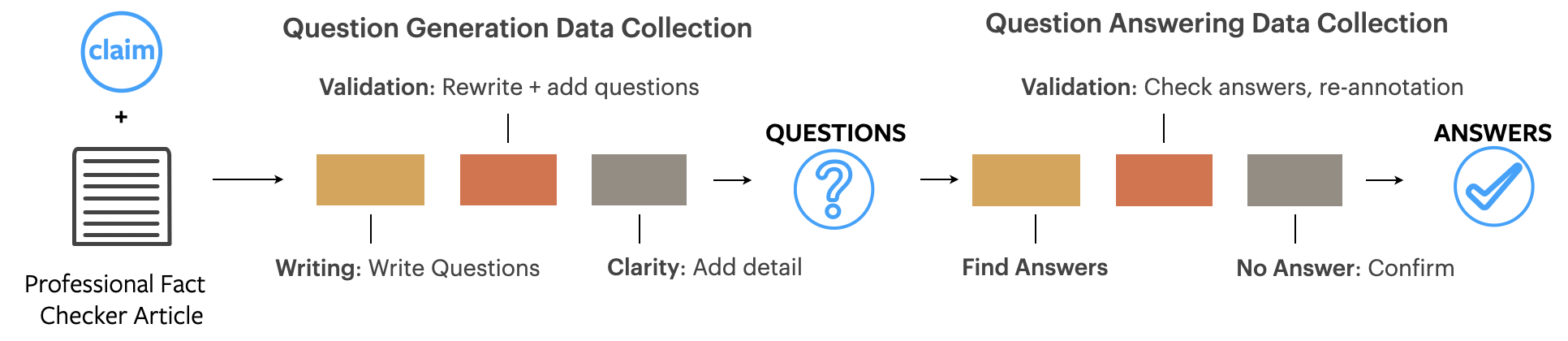

Fact-checking is the task of verifying the veracity of claims by assessing their assertions against credible evidence. The vast majority of fact-checking studies focus exclusively on political claims. Very little research explores fact-checking for other topics, specifically subject matters for which expertise is required. We present the first study of explainable fact-checking for claims which require specific expertise. For our case study we choose the setting of public health. To support this case study we construct a new dataset PUBHEALTH of 11.8K claims accompanied by journalist crafted, gold standard explanations (i.e., judgments) to support the fact-check labels for claims. We explore two tasks: veracity prediction and explanation generation. We also define and evaluate, with humans and computationally, three coherence properties of explanation quality. Our results indicate that, by training on in-domain data, gains can be made in explainable, automated fact-checking for claims which require specific expertise.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Generating Fact Checking Briefs

Angela Fan, Aleksandra Piktus, Fabio Petroni, Guillaume Wenzek, Marzieh Saeidi, Andreas Vlachos, Antoine Bordes, Sebastian Riedel,

Hierarchical Evidence Set Modeling for Automated Fact Extraction and Verification

Shyam Subramanian, Kyumin Lee,

What Does My QA Model Know? Devising Controlled Probes using Expert

Kyle Richardson, Ashish Sabharwal,

Evaluating the Factual Consistency of Abstractive Text Summarization

Wojciech Kryscinski, Bryan McCann, Caiming Xiong, Richard Socher,