Data Boost: Text Data Augmentation Through Reinforcement Learning Guided Conditional Generation

Ruibo Liu, Guangxuan Xu, Chenyan Jia, Weicheng Ma, Lili Wang, Soroush Vosoughi

NLP Applications Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

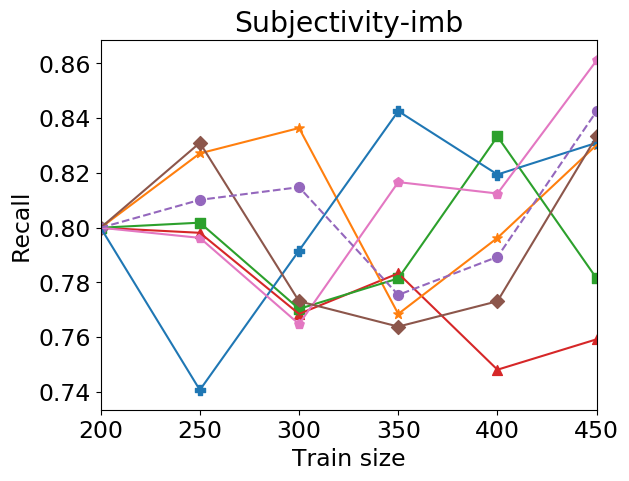

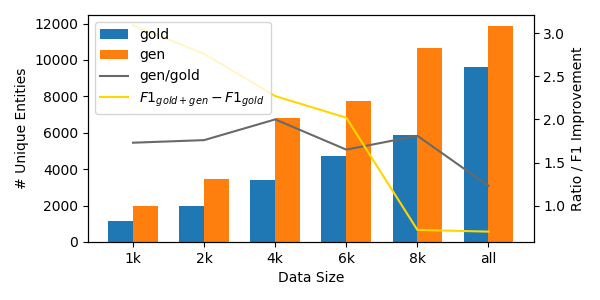

Data augmentation is proven to be effective in many NLU tasks, especially for those suffering from data scarcity. In this paper, we present a powerful and easy to deploy text augmentation framework, Data Boost, which augments data through reinforcement learning guided conditional generation. We evaluate Data Boost on three diverse text classification tasks under five different classifier architectures. The result shows that Data Boost can boost the performance of classifiers especially in low-resource data scenarios. For instance, Data Boost improves F1 for the three tasks by 8.7% on average when given only 10% of the whole data for training. We also compare Data Boost with six prior text augmentation methods. Through human evaluations (N=178), we confirm that Data Boost augmentation has comparable quality as the original data with respect to readability and class consistency.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

DAGA: Data Augmentation with a Generation Approach forLow-resource Tagging Tasks

Bosheng Ding, Linlin Liu, Lidong Bing, Canasai Kruengkrai, Thien Hai Nguyen, Shafiq Joty, Luo Si, Chunyan Miao,

New Protocols and Negative Results for Textual Entailment Data Collection

Samuel R. Bowman, Jennimaria Palomaki, Livio Baldini Soares, Emily Pitler,

Active Learning for BERT: An Empirical Study

Liat Ein-Dor, Alon Halfon, Ariel Gera, Eyal Shnarch, Lena Dankin, Leshem Choshen, Marina Danilevsky, Ranit Aharonov, Yoav Katz, Noam Slonim,