Pre-training Entity Relation Encoder with Intra-span and Inter-span Information

Yijun Wang, Changzhi Sun, Yuanbin Wu, Junchi Yan, Peng Gao, Guotong Xie

Information Extraction Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

In this paper, we integrate span-related information into pre-trained encoder for entity relation extraction task. Instead of using general-purpose sentence encoder (e.g., existing universal pre-trained models), we introduce a span encoder and a span pair encoder to the pre-training network, which makes it easier to import intra-span and inter-span information into the pre-trained model. To learn the encoders, we devise three customized pre-training objectives from different perspectives, which target on tokens, spans, and span pairs. In particular, a span encoder is trained to recover a random shuffling of tokens in a span, and a span pair encoder is trained to predict positive pairs that are from the same sentences and negative pairs that are from different sentences using contrastive loss. Experimental results show that the proposed pre-training method outperforms distantly supervised pre-training, and achieves promising performance on two entity relation extraction benchmark datasets (ACE05, SciERC).

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

MAD-X: An Adapter-Based Framework for Multi-Task Cross-Lingual Transfer

Jonas Pfeiffer, Ivan Vulić, Iryna Gurevych, Sebastian Ruder,

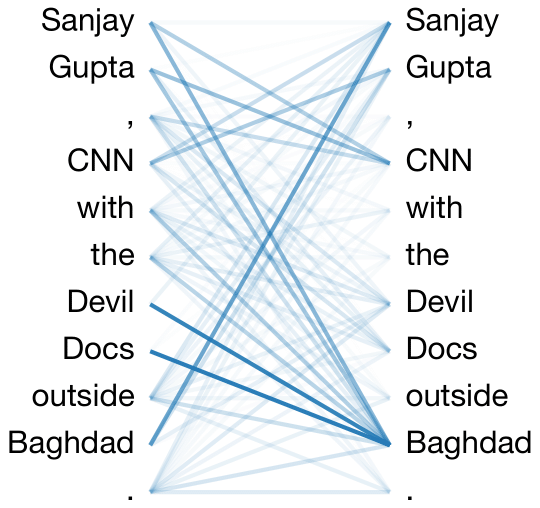

A Supervised Word Alignment Method based on Cross-Language Span Prediction using Multilingual BERT

Masaaki Nagata, Katsuki Chousa, Masaaki Nishino,