Domain Knowledge Empowered Structured Neural Net for End-to-End Event Temporal Relation Extraction

Rujun Han, Yichao Zhou, Nanyun Peng

Information Extraction Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

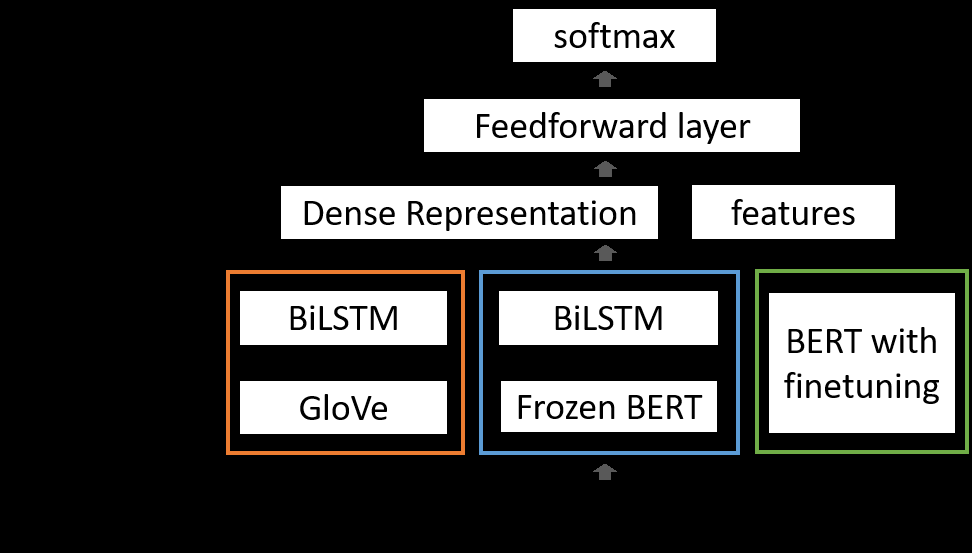

Extracting event temporal relations is a critical task for information extraction and plays an important role in natural language understanding. Prior systems leverage deep learning and pre-trained language models to improve the performance of the task. However, these systems often suffer from two shortcomings: 1) when performing maximum a posteriori (MAP) inference based on neural models, previous systems only used structured knowledge that is assumed to be absolutely correct, i.e., hard constraints; 2) biased predictions on dominant temporal relations when training with a limited amount of data. To address these issues, we propose a framework that enhances deep neural network with distributional constraints constructed by probabilistic domain knowledge. We solve the constrained inference problem via Lagrangian Relaxation and apply it to end-to-end event temporal relation extraction tasks. Experimental results show our framework is able to improve the baseline neural network models with strong statistical significance on two widely used datasets in news and clinical domains.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Learning from Context or Names? An Empirical Study on Neural Relation Extraction

Hao Peng, Tianyu Gao, Xu Han, Yankai Lin, Peng Li, Zhiyuan Liu, Maosong Sun, Jie Zhou,

Exploring Contextualized Neural Language Models for Temporal Dependency Parsing

Hayley Ross, Jonathon Cai, Bonan Min,

Low-Resource Domain Adaptation for Compositional Task-Oriented Semantic Parsing

Xilun Chen, Asish Ghoshal, Yashar Mehdad, Luke Zettlemoyer, Sonal Gupta,