Fact or Fiction: Verifying Scientific Claims

David Wadden, Shanchuan Lin, Kyle Lo, Lucy Lu Wang, Madeleine van Zuylen, Arman Cohan, Hannaneh Hajishirzi

NLP Applications Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

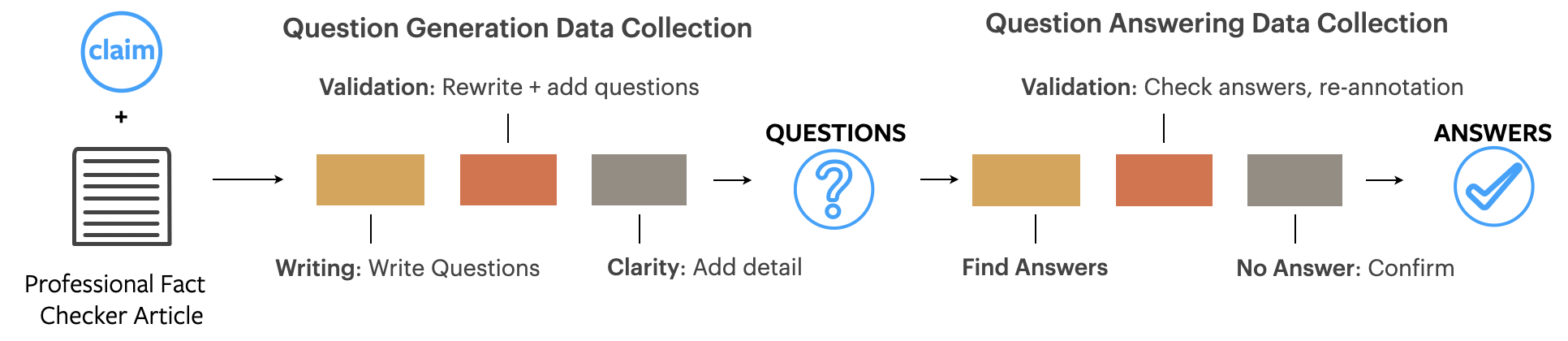

We introduce scientific claim verification, a new task to select abstracts from the research literature containing evidence that SUPPORTS or REFUTES a given scientific claim, and to identify rationales justifying each decision. To study this task, we construct SciFact, a dataset of 1.4K expert-written scientific claims paired with evidence-containing abstracts annotated with labels and rationales. We develop baseline models for SciFact, and demonstrate that simple domain adaptation techniques substantially improve performance compared to models trained on Wikipedia or political news. We show that our system is able to verify claims related to COVID-19 by identifying evidence from the CORD-19 corpus. Our experiments indicate that SciFact will provide a challenging testbed for the development of new systems designed to retrieve and reason over corpora containing specialized domain knowledge. Data and code for this new task are publicly available at https://github.com/allenai/scifact. A leaderboard and COVID-19 fact-checking demo are available at https://scifact.apps.allenai.org.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Hierarchical Evidence Set Modeling for Automated Fact Extraction and Verification

Shyam Subramanian, Kyumin Lee,

Generating Fact Checking Briefs

Angela Fan, Aleksandra Piktus, Fabio Petroni, Guillaume Wenzek, Marzieh Saeidi, Andreas Vlachos, Antoine Bordes, Sebastian Riedel,

What Does My QA Model Know? Devising Controlled Probes using Expert

Kyle Richardson, Ashish Sabharwal,

Evaluating the Factual Consistency of Abstractive Text Summarization

Wojciech Kryscinski, Bryan McCann, Caiming Xiong, Richard Socher,