Controllable Meaning Representation to Text Generation: Linearization and Data Augmentation Strategies

Chris Kedzie, Kathleen McKeown

Language Generation Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

We study the degree to which neural sequence-to-sequence models exhibit fine-grained controllability when performing natural language generation from a meaning representation. Using two task-oriented dialogue generation benchmarks, we systematically compare the effect of four input linearization strategies on controllability and faithfulness. Additionally, we evaluate how a phrase-based data augmentation method can improve performance. We find that properly aligning input sequences during training leads to highly controllable generation, both when training from scratch or when fine-tuning a larger pre-trained model. Data augmentation further improves control on difficult, randomly generated utterance plans.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Improving Text Generation with Student-Forcing Optimal Transport

Jianqiao Li, Chunyuan Li, Guoyin Wang, Hao Fu, Yuhchen Lin, Liqun Chen, Yizhe Zhang, Chenyang Tao, Ruiyi Zhang, Wenlin Wang, Dinghan Shen, Qian Yang, Lawrence Carin,

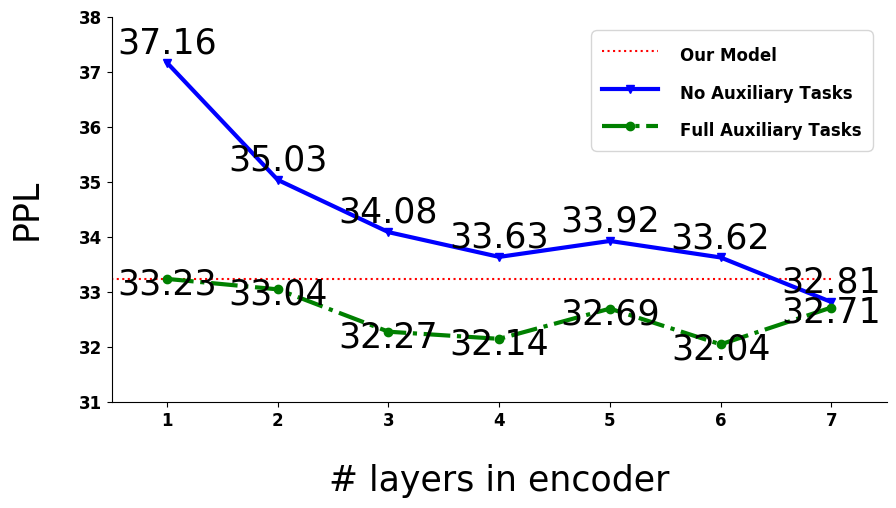

Learning a Simple and Effective Model for Multi-turn Response Generation with Auxiliary Tasks

Yufan Zhao, Can Xu, Wei Wu,

Knowledge-Grounded Dialogue Generation with Pre-trained Language Models

Xueliang Zhao, Wei Wu, Can Xu, Chongyang Tao, Dongyan Zhao, Rui Yan,