Neural Topic Modeling with Cycle-Consistent Adversarial Training

Xuemeng Hu, Rui Wang, Deyu Zhou, Yuxuan Xiong

Information Retrieval and Text Mining Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Advances on deep generative models have attracted significant research interest in neural topic modeling. The recently proposed Adversarial-neural Topic Model models topics with an adversarially trained generator network and employs Dirichlet prior to capture the semantic patterns in latent topics. It is effective in discovering coherent topics but unable to infer topic distributions for given documents or utilize available document labels. To overcome such limitations, we propose Topic Modeling with Cycle-consistent Adversarial Training (ToMCAT) and its supervised version sToMCAT. ToMCAT employs a generator network to interpret topics and an encoder network to infer document topics. Adversarial training and cycle-consistent constraints are used to encourage the generator and the encoder to produce realistic samples that coordinate with each other. sToMCAT extends ToMCAT by incorporating document labels into the topic modeling process to help discover more coherent topics. The effectiveness of the proposed models is evaluated on unsupervised/supervised topic modeling and text classification. The experimental results show that our models can produce both coherent and informative topics, outperforming a number of competitive baselines.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

A Neural Generative Model for Joint Learning Topics and Topic-Specific Word Embeddings

Lixing Zhu, Deyu Zhou, Yulan He,

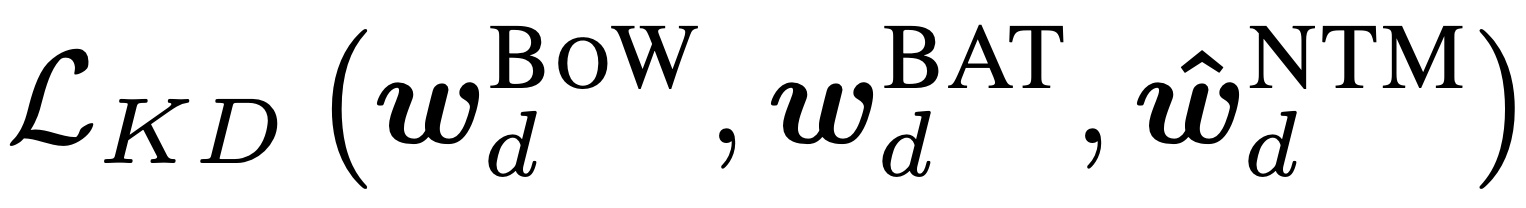

Improving Neural Topic Models using Knowledge Distillation

Alexander Miserlis Hoyle, Pranav Goel, Philip Resnik,