Analyzing Individual Neurons in Pre-trained Language Models

Nadir Durrani, Hassan Sajjad, Fahim Dalvi, Yonatan Belinkov

Interpretability and Analysis of Models for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

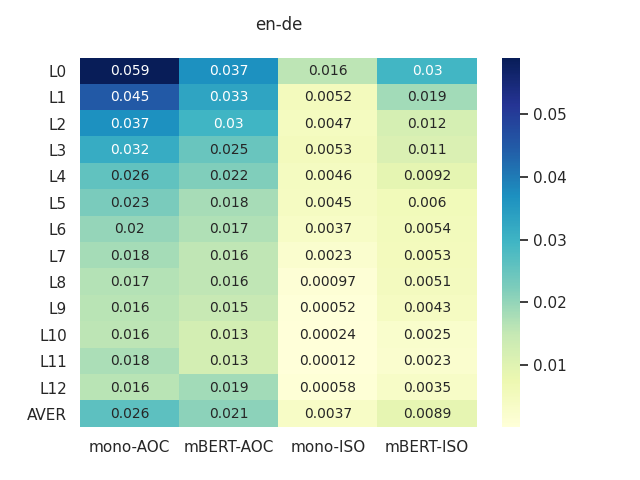

While a lot of analysis has been carried to demonstrate linguistic knowledge captured by the representations learned within deep NLP models, very little attention has been paid towards individual neurons.We carry outa neuron-level analysis using core linguistic tasks of predicting morphology, syntax and semantics, on pre-trained language models, with questions like: i) do individual neurons in pre-trained models capture linguistic information? ii) which parts of the network learn more about certain linguistic phenomena? iii) how distributed or focused is the information? and iv) how do various architectures differ in learning these properties? We found small subsets of neurons to predict linguistic tasks, with lower level tasks (such as morphology) localized in fewer neurons, compared to higher level task of predicting syntax. Our study also reveals interesting cross architectural comparisons. For example, we found neurons in XLNet to be more localized and disjoint when predicting properties compared to BERT and others, where they are more distributed and coupled.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Structural Supervision Improves Few-Shot Learning and Syntactic Generalization in Neural Language Models

Ethan Wilcox, Peng Qian, Richard Futrell, Ryosuke Kohita, Roger Levy, Miguel Ballesteros,

Probing Pretrained Language Models for Lexical Semantics

Ivan Vulić, Edoardo Maria Ponti, Robert Litschko, Goran Glavaš, Anna Korhonen,

On the Ability and Limitations of Transformers to Recognize Formal Languages

Satwik Bhattamishra, Kabir Ahuja, Navin Goyal,

Learning Which Features Matter: RoBERTa Acquires a Preference for Linguistic Generalizations (Eventually)

Alex Warstadt, Yian Zhang, Xiaocheng Li, Haokun Liu, Samuel R. Bowman,