To BERT or Not to BERT: Comparing Task-specific and Task-agnostic Semi-Supervised Approaches for Sequence Tagging

Kasturi Bhattacharjee, Miguel Ballesteros, Rishita Anubhai, Smaranda Muresan, Jie Ma, Faisal Ladhak, Yaser Al-Onaizan

Interpretability and Analysis of Models for NLP Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

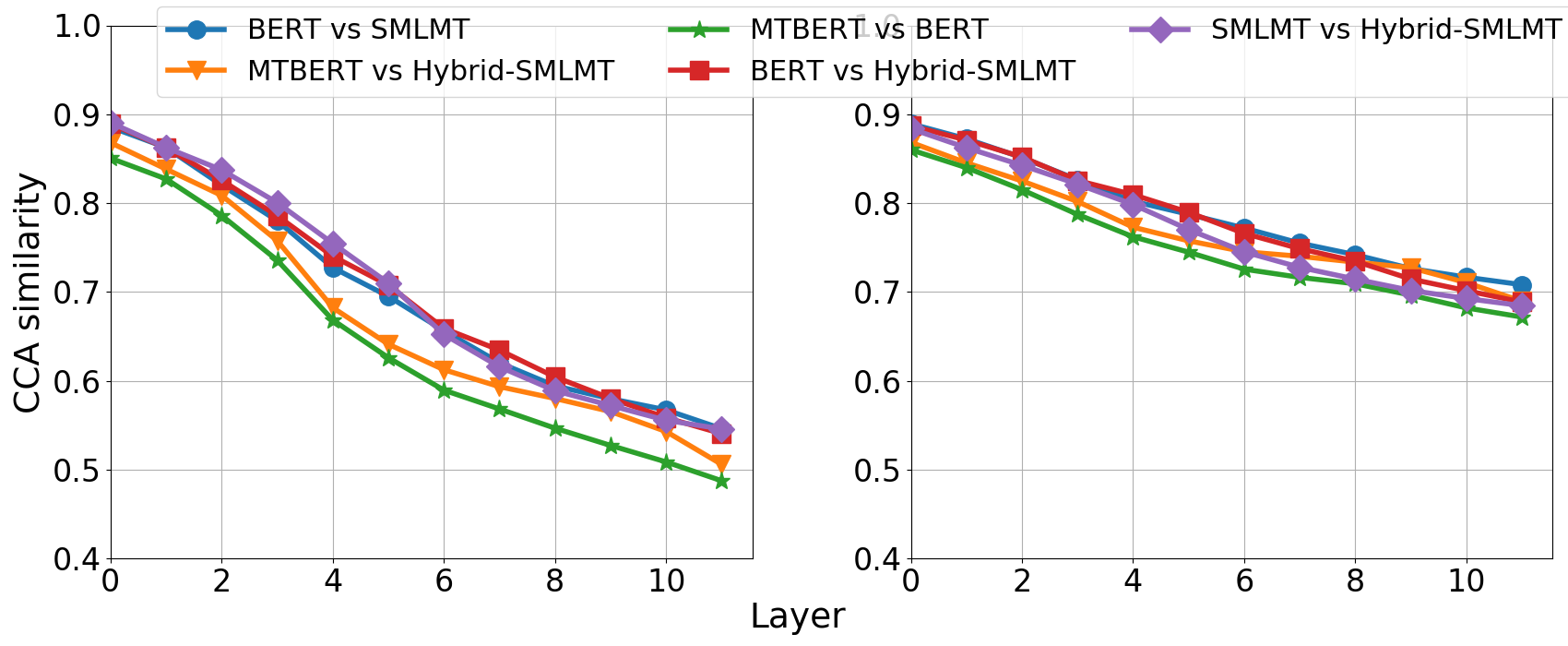

Leveraging large amounts of unlabeled data using Transformer-like architectures, like BERT, has gained popularity in recent times owing to their effectiveness in learning general representations that can then be further fine-tuned for downstream tasks to much success. However, training these models can be costly both from an economic and environmental standpoint. In this work, we investigate how to effectively use unlabeled data: by exploring the task-specific semi-supervised approach, Cross-View Training (CVT) and comparing it with task-agnostic BERT in multiple settings that include domain and task relevant English data. CVT uses a much lighter model architecture and we show that it achieves similar performance to BERT on a set of sequence tagging tasks, with lesser financial and environmental impact.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Cold-start Active Learning through Self-supervised Language Modeling

Michelle Yuan, Hsuan-Tien Lin, Jordan Boyd-Graber,

Multi-Stage Pre-training for Low-Resource Domain Adaptation

Rong Zhang, Revanth Gangi Reddy, Md Arafat Sultan, Vittorio Castelli, Anthony Ferritto, Radu Florian, Efsun Sarioglu Kayi, Salim Roukos, Avi Sil, Todd Ward,

Self-Supervised Meta-Learning for Few-Shot Natural Language Classification Tasks

Trapit Bansal, Rishikesh Jha, Tsendsuren Munkhdalai, Andrew McCallum,

BERT-EMD: Many-to-Many Layer Mapping for BERT Compression with Earth Mover's Distance

Jianquan Li, Xiaokang Liu, Honghong Zhao, Ruifeng Xu, Min Yang, Yaohong Jin,