MedDialog: Large-scale Medical Dialogue Datasets

Guangtao Zeng, Wenmian Yang, Zeqian Ju, Yue Yang, Sicheng Wang, Ruisi Zhang, Meng Zhou, Jiaqi Zeng, Xiangyu Dong, Ruoyu Zhang, Hongchao Fang, Penghui Zhu, Shu Chen, Pengtao Xie

Dialog and Interactive Systems Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Medical dialogue systems are promising in assisting in telemedicine to increase access to healthcare services, improve the quality of patient care, and reduce medical costs. To facilitate the research and development of medical dialogue systems, we build large-scale medical dialogue datasets -- MedDialog, which contain 1) a Chinese dataset with 3.4 million conversations between patients and doctors, 11.3 million utterances, 660.2 million tokens, covering 172 specialties of diseases, and 2) an English dataset with 0.26 million conversations, 0.51 million utterances, 44.53 million tokens, covering 96 specialties of diseases. To our best knowledge, MedDialog is the largest medical dialogue dataset to date. We pretrain several dialogue generation models on the Chinese MedDialog dataset, including Transformer, GPT, BERT-GPT, and compare their performance. It is shown that models trained on MedDialog are able to generate clinically correct and doctor-like medical dialogues. We also study the transferability of models trained on MedDialog to low-resource medical dialogue generation tasks. It is shown that via transfer learning which finetunes the models pretrained on MedDialog, the performance on medical dialogue generation tasks with small datasets can be greatly improved, as shown in human evaluation and automatic evaluation. The datasets and code are available at https://github.com/UCSD-AI4H/Medical-Dialogue-System

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

RiSAWOZ: A Large-Scale Multi-Domain Wizard-of-Oz Dataset with Rich Semantic Annotations for Task-Oriented Dialogue Modeling

Jun Quan, Shian Zhang, Qian Cao, Zizhong Li, Deyi Xiong,

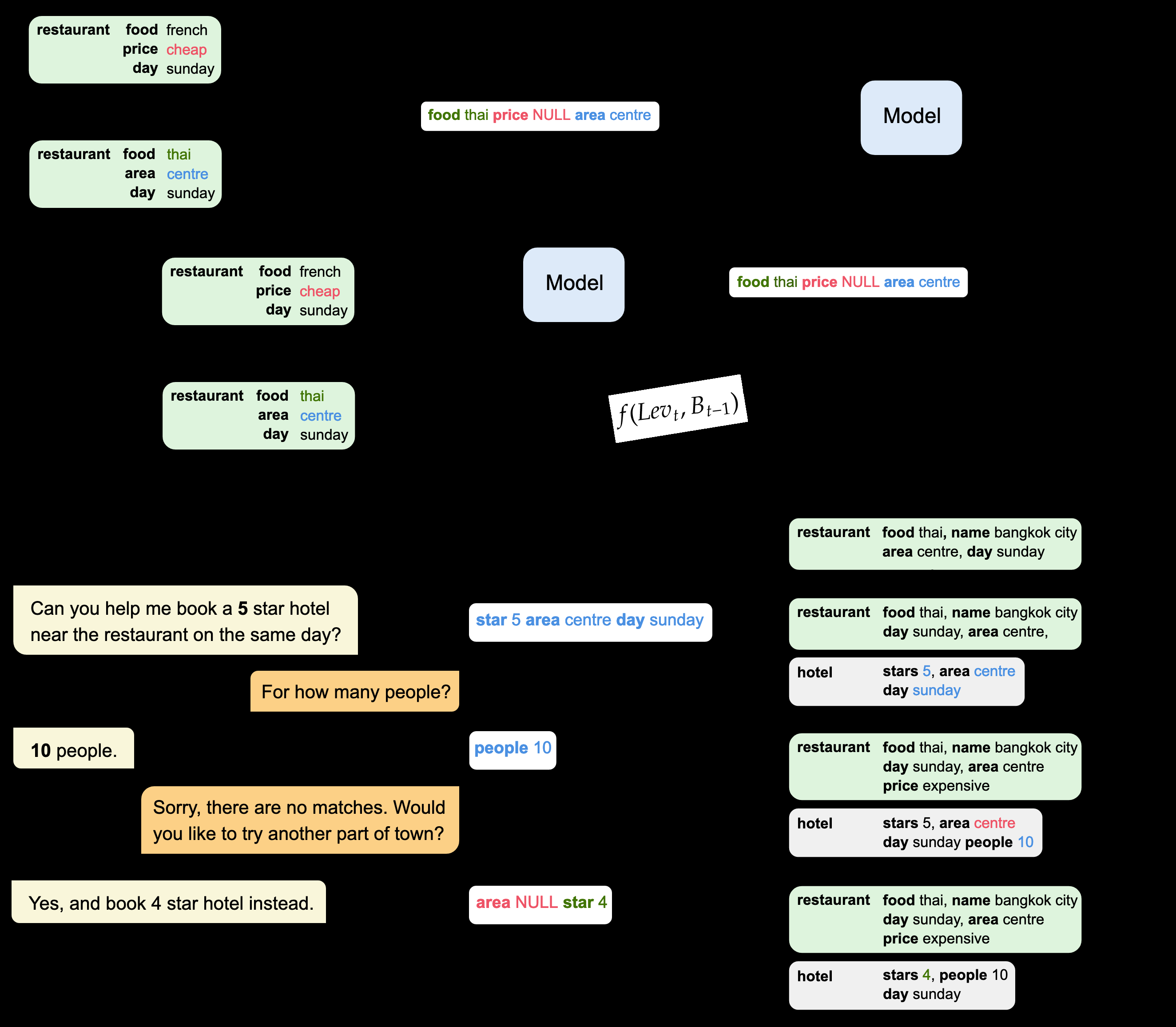

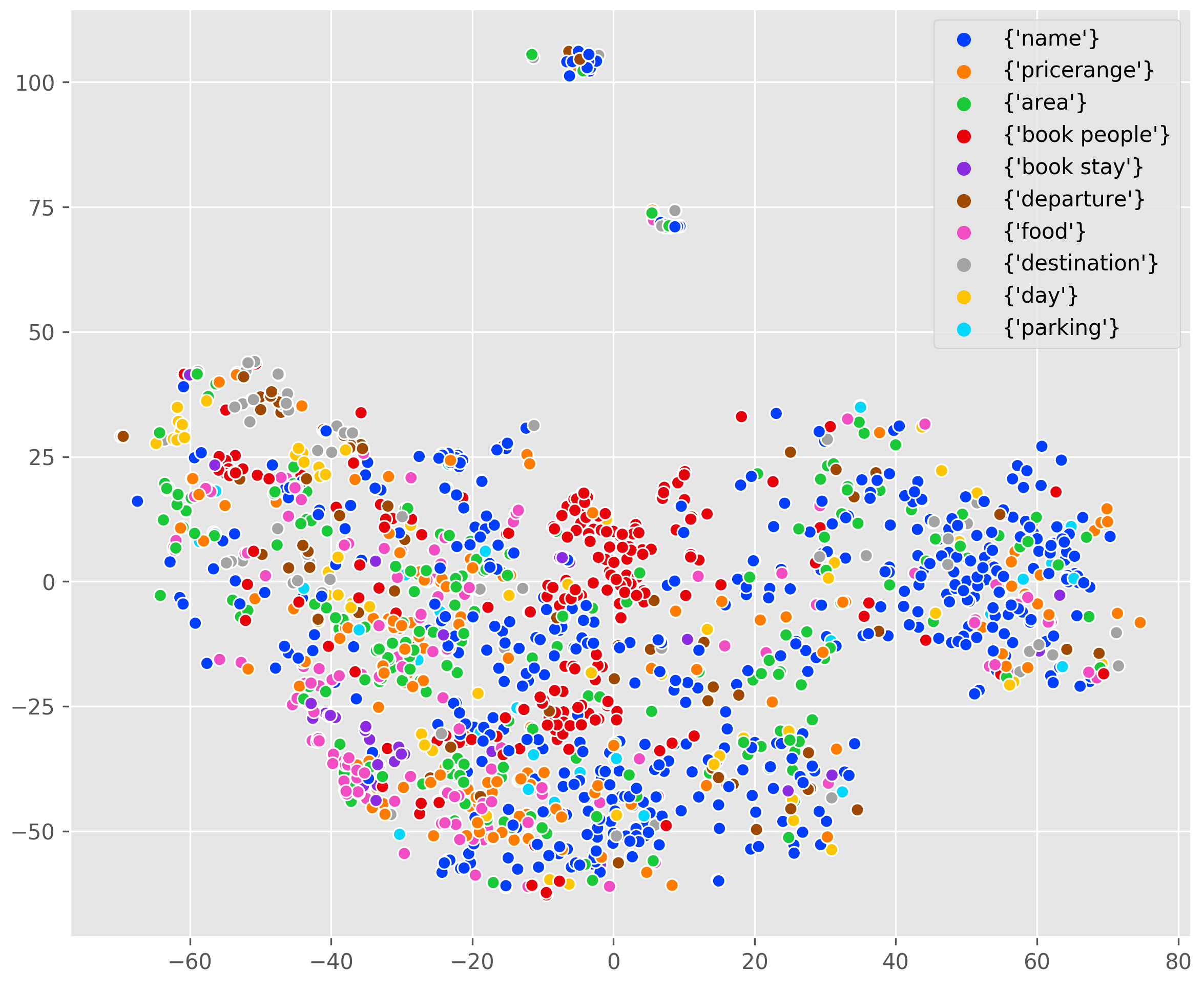

MinTL: Minimalist Transfer Learning for Task-Oriented Dialogue Systems

Zhaojiang Lin, Andrea Madotto, Genta Indra Winata, Pascale Fung,

TOD-BERT: Pre-trained Natural Language Understanding for Task-Oriented Dialogue

Chien-Sheng Wu, Steven C.H. Hoi, Richard Socher, Caiming Xiong,

Cross Copy Network for Dialogue Generation

Changzhen Ji, Xin Zhou, Yating Zhang, Xiaozhong Liu, Changlong Sun, Conghui Zhu, Tiejun Zhao,