CAT-Gen: Improving Robustness in NLP Models via Controlled Adversarial Text Generation

Tianlu Wang, Xuezhi Wang, Yao Qin, Ben Packer, Kang Li, Jilin Chen, Alex Beutel, Ed Chi

Language Generation Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

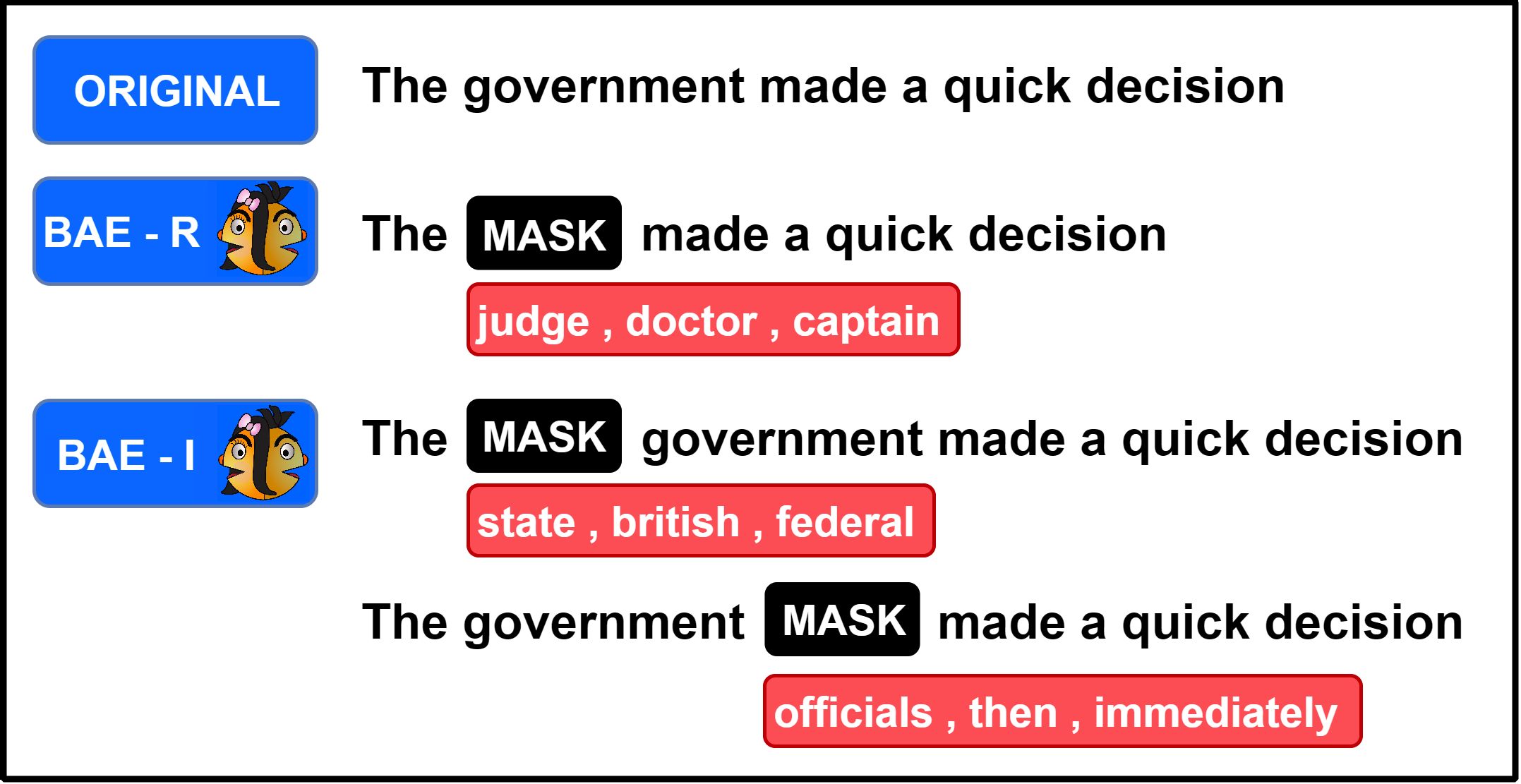

NLP models are shown to suffer from robustness issues, i.e., a model's prediction can be easily changed under small perturbations to the input. In this work, we present a Controlled Adversarial Text Generation (CAT-Gen) model that, given an input text, generates adversarial texts through controllable attributes that are known to be invariant to task labels. For example, in order to attack a model for sentiment classification over product reviews, we can use the product categories as the controllable attribute which would not change the sentiment of the reviews. Experiments on real-world NLP datasets demonstrate that our method can generate more diverse and fluent adversarial texts, compared to many existing adversarial text generation approaches. We further use our generated adversarial examples to improve models through adversarial training, and we demonstrate that our generated attacks are more robust against model re-training and different model architectures.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

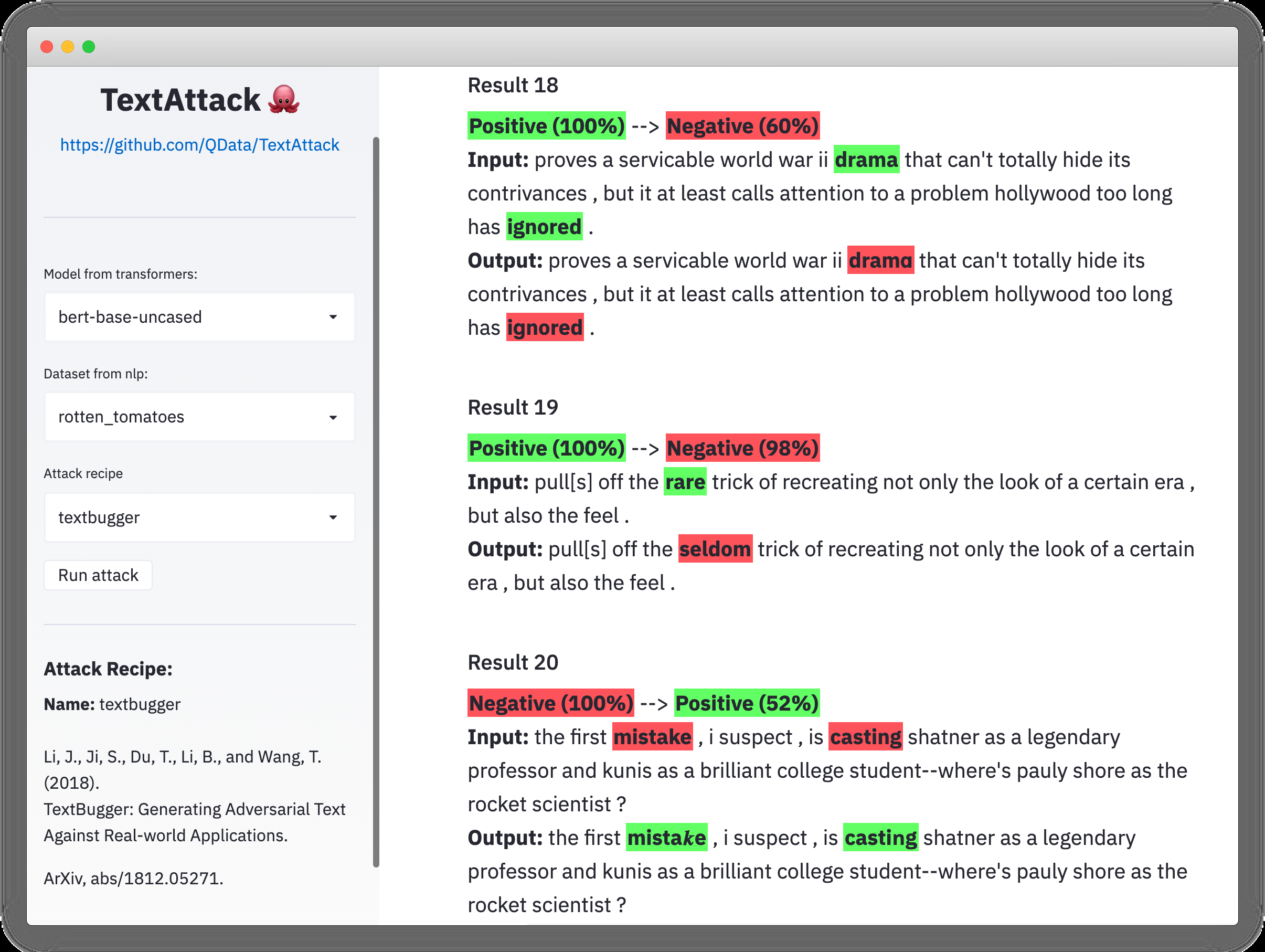

TextAttack: A Framework for Adversarial Attacks, Data Augmentation, and Adversarial Training in NLP

John Morris, Eli Lifland, Jin Yong Yoo, Jake Grigsby, Di Jin, Yanjun Qi,

Adversarial Attack and Defense of Structured Prediction Models

Wenjuan Han, Liwen Zhang, Yong Jiang, Kewei Tu,

Adversarial Self-Supervised Data-Free Distillation for Text Classification

Xinyin Ma, Yongliang Shen, Gongfan Fang, Chen Chen, Chenghao Jia, Weiming Lu,