Adversarial Attack and Defense of Structured Prediction Models

Wenjuan Han, Liwen Zhang, Yong Jiang, Kewei Tu

Syntax: Tagging, Chunking, and Parsing Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Building an effective adversarial attacker and elaborating on countermeasures for adversarial attacks for natural language processing (NLP) have attracted a lot of research in recent years. However, most of the existing approaches focus on classification problems. In this paper, we investigate attacks and defenses for structured prediction tasks in NLP. Besides the difficulty of perturbing discrete words and the sentence fluency problem faced by attackers in any NLP tasks, there is a specific challenge to attackers of structured prediction models: the structured output of structured prediction models is sensitive to small perturbations in the input. To address these problems, we propose a novel and unified framework that learns to attack a structured prediction model using a sequence-to-sequence model with feedbacks from multiple reference models of the same structured prediction task. Based on the proposed attack, we further reinforce the victim model with adversarial training, making its prediction more robust and accurate. We evaluate the proposed framework in dependency parsing and part-of-speech tagging. Automatic and human evaluations show that our proposed framework succeeds in both attacking state-of-the-art structured prediction models and boosting them with adversarial training.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

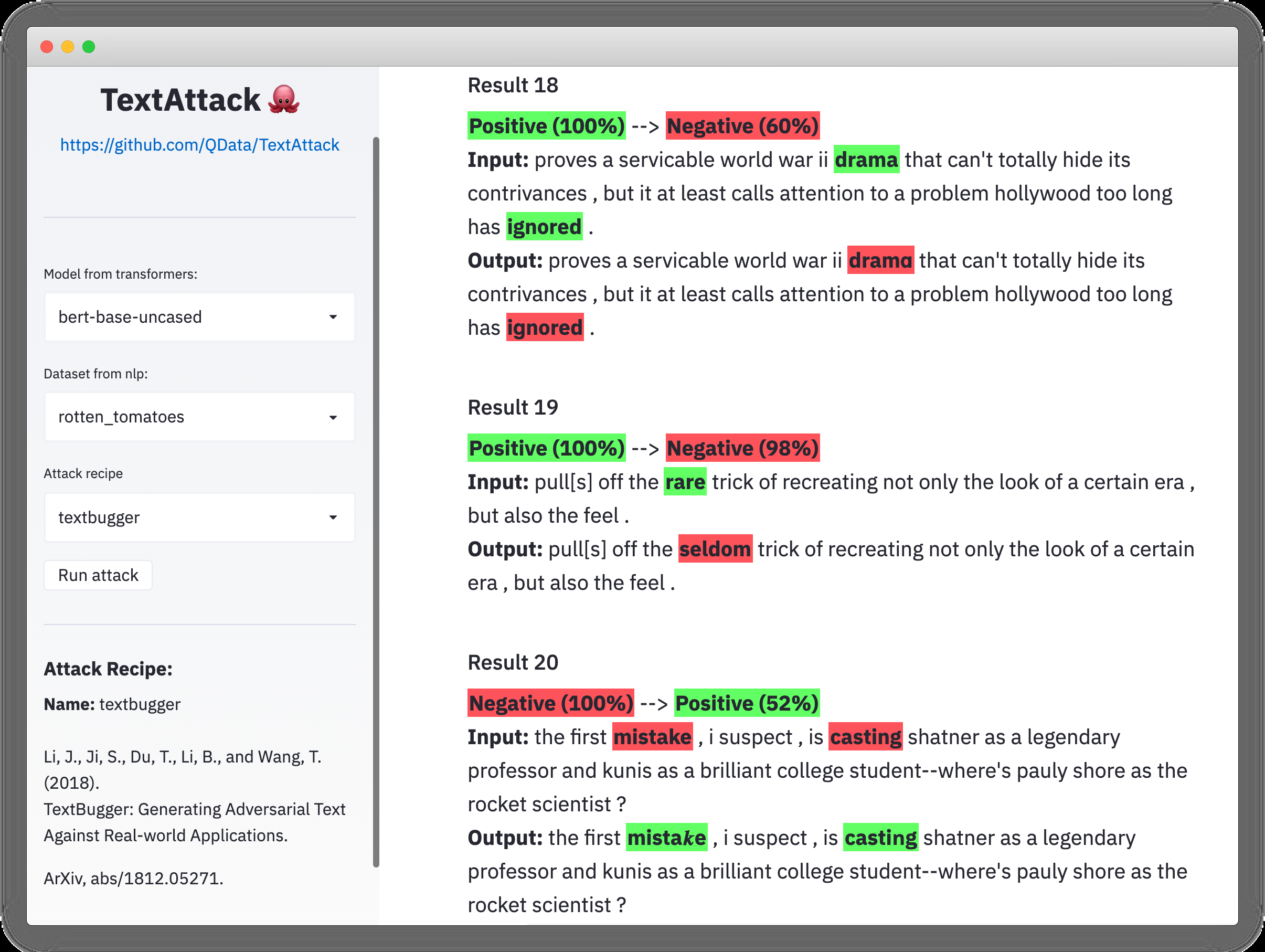

TextAttack: A Framework for Adversarial Attacks, Data Augmentation, and Adversarial Training in NLP

John Morris, Eli Lifland, Jin Yong Yoo, Jake Grigsby, Di Jin, Yanjun Qi,

CAT-Gen: Improving Robustness in NLP Models via Controlled Adversarial Text Generation

Tianlu Wang, Xuezhi Wang, Yao Qin, Ben Packer, Kang Li, Jilin Chen, Alex Beutel, Ed Chi,

BERT-ATTACK: Adversarial Attack Against BERT Using BERT

Linyang Li, Ruotian Ma, Qipeng Guo, Xiangyang Xue, Xipeng Qiu,

Generating Label Cohesive and Well-Formed Adversarial Claims

Pepa Atanasova, Dustin Wright, Isabelle Augenstein,