DORB: Dynamically Optimizing Multiple Rewards with Bandits

Ramakanth Pasunuru, Han Guo, Mohit Bansal

Machine Learning for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Policy gradients-based reinforcement learning has proven to be a promising approach for directly optimizing non-differentiable evaluation metrics for language generation tasks. However, optimizing for a specific metric reward leads to improvements in mostly that metric only, suggesting that the model is gaming the formulation of that metric in a particular way without often achieving real qualitative improvements. Hence, it is more beneficial to make the model optimize multiple diverse metric rewards jointly. While appealing, this is challenging because one needs to manually decide the importance and scaling weights of these metric rewards. Further, it is important to consider using a dynamic combination and curriculum of metric rewards that flexibly changes over time. Considering the above aspects, in our work, we automate the optimization of multiple metric rewards simultaneously via a multi-armed bandit approach (DORB), where at each round, the bandit chooses which metric reward to optimize next, based on expected arm gains. We use the Exp3 algorithm for bandits and formulate two approaches for bandit rewards: (1) Single Multi-reward Bandit (SM-Bandit); (2) Hierarchical Multi-reward Bandit (HM-Bandit). We empirically show the effectiveness of our approaches via various automatic metrics and human evaluation on two important NLG tasks: question generation and data-to-text generation. Finally, we present interpretable analyses of the learned bandit curriculum over the optimized rewards.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Ensemble Distillation for Structured Prediction: Calibrated, Accurate, Fast—Choose Three

Steven Reich, David Mueller, Nicholas Andrews,

Multi-document Summarization with Maximal Marginal Relevance-guided Reinforcement Learning

Yuning Mao, Yanru Qu, Yiqing Xie, Xiang Ren, Jiawei Han,

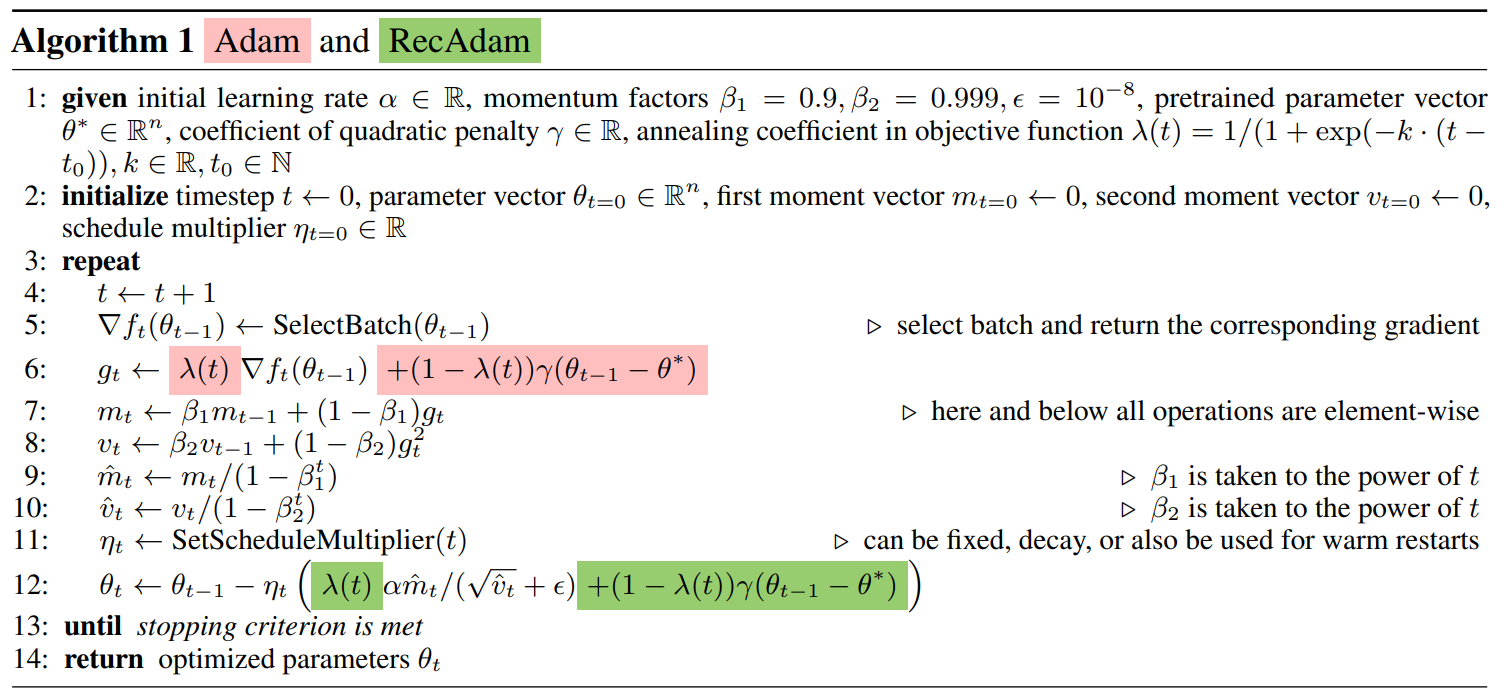

Recall and Learn: Fine-tuning Deep Pretrained Language Models with Less Forgetting

Sanyuan Chen, Yutai Hou, Yiming Cui, Wanxiang Che, Ting Liu, Xiangzhan Yu,

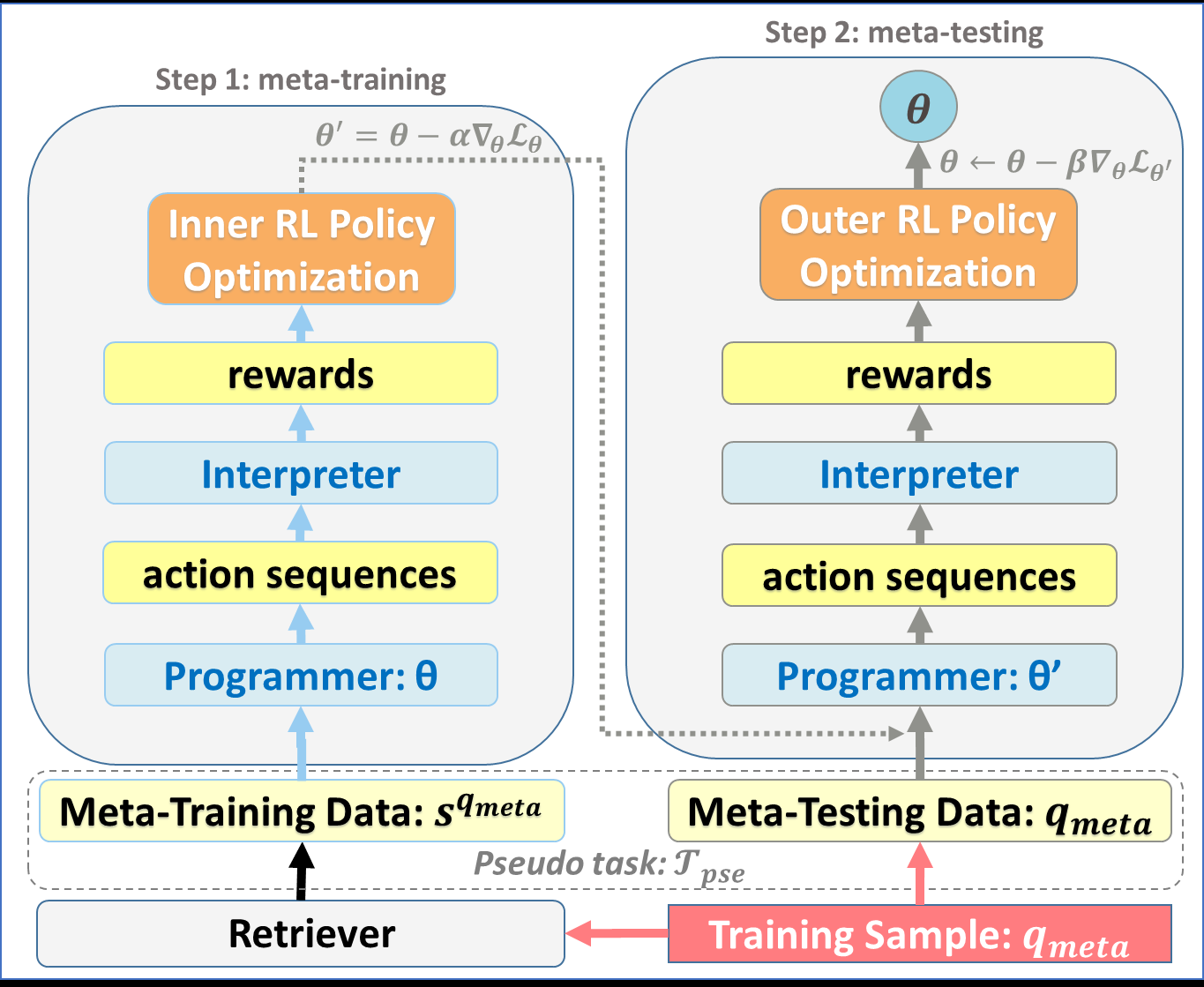

Few-Shot Complex Knowledge Base Question Answering via Meta Reinforcement Learning

Yuncheng Hua, Yuan-Fang Li, Gholamreza Haffari, Guilin Qi, Tongtong Wu,