Bridging the Gap between Prior and Posterior Knowledge Selection for Knowledge-Grounded Dialogue Generation

Xiuyi Chen, Fandong Meng, Peng Li, Feilong Chen, Shuang Xu, Bo Xu, Jie Zhou

Dialog and Interactive Systems Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

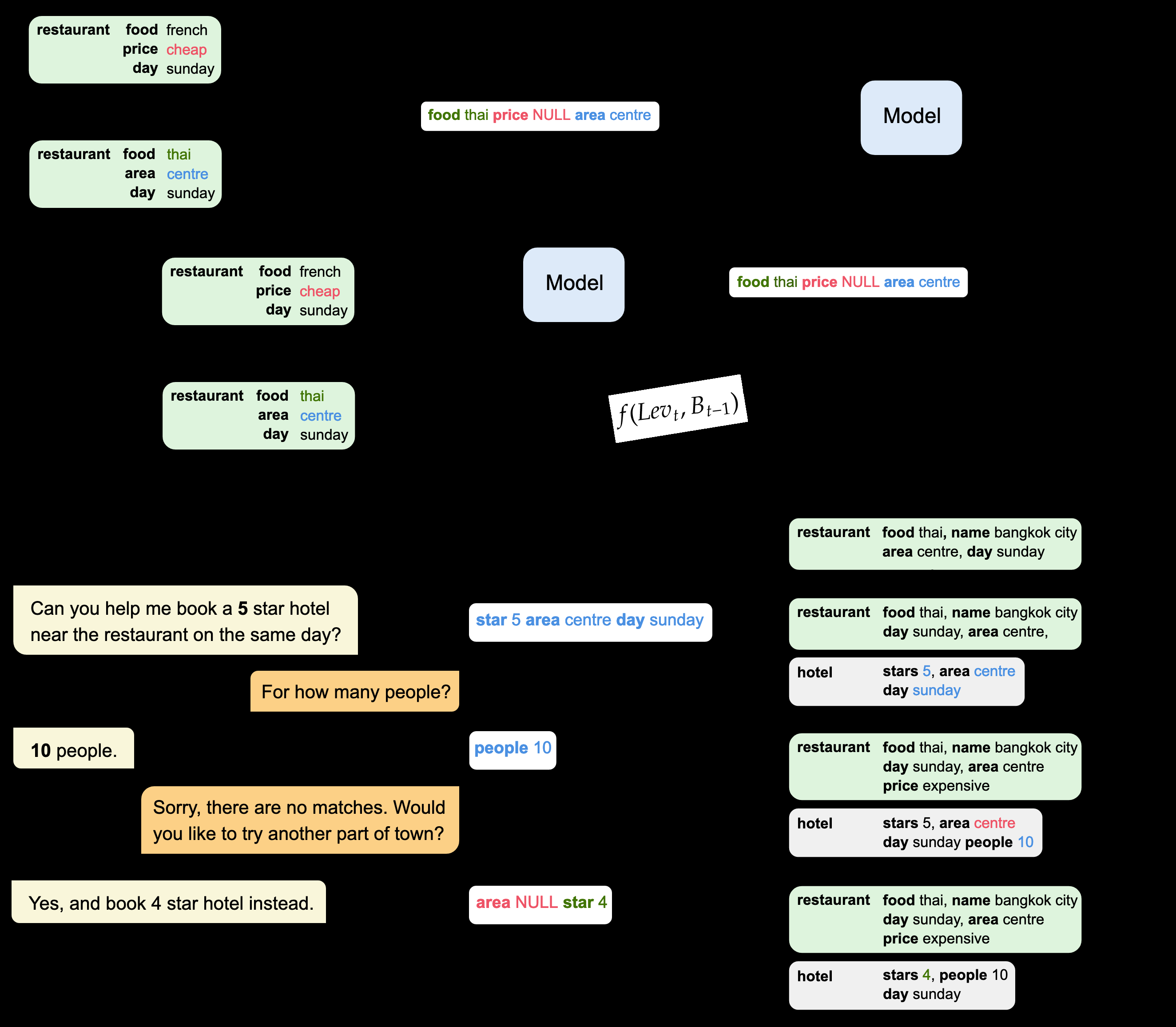

Knowledge selection plays an important role in knowledge-grounded dialogue, which is a challenging task to generate more informative responses by leveraging external knowledge. Recently, latent variable models have been proposed to deal with the diversity of knowledge selection by using both prior and posterior distributions over knowledge and achieve promising performance. However, these models suffer from a huge gap between prior and posterior knowledge selection. Firstly, the prior selection module may not learn to select knowledge properly because of lacking the necessary posterior information. Secondly, latent variable models suffer from the exposure bias that dialogue generation is based on the knowledge selected from the posterior distribution at training but from the prior distribution at inference. Here, we deal with these issues on two aspects: (1) We enhance the prior selection module with the necessary posterior information obtained from the specially designed Posterior Information Prediction Module (PIPM); (2) We propose a Knowledge Distillation Based Training Strategy (KDBTS) to train the decoder with the knowledge selected from the prior distribution, removing the exposure bias of knowledge selection. Experimental results on two knowledge-grounded dialogue datasets show that both PIPM and KDBTS achieve performance improvement over the state-of-the-art latent variable model and their combination shows further improvement.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Knowledge-Grounded Dialogue Generation with Pre-trained Language Models

Xueliang Zhao, Wei Wu, Can Xu, Chongyang Tao, Dongyan Zhao, Rui Yan,

MinTL: Minimalist Transfer Learning for Task-Oriented Dialogue Systems

Zhaojiang Lin, Andrea Madotto, Genta Indra Winata, Pascale Fung,

Generating Dialogue Responses from a Semantic Latent Space

Wei-Jen Ko, Avik Ray, Yilin Shen, Hongxia Jin,

Cross Copy Network for Dialogue Generation

Changzhen Ji, Xin Zhou, Yating Zhang, Xiaozhong Liu, Changlong Sun, Conghui Zhu, Tiejun Zhao,