Filtering Noisy Dialogue Corpora by Connectivity and Content Relatedness

Reina Akama, Sho Yokoi, Jun Suzuki, Kentaro Inui

Dialog and Interactive Systems Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

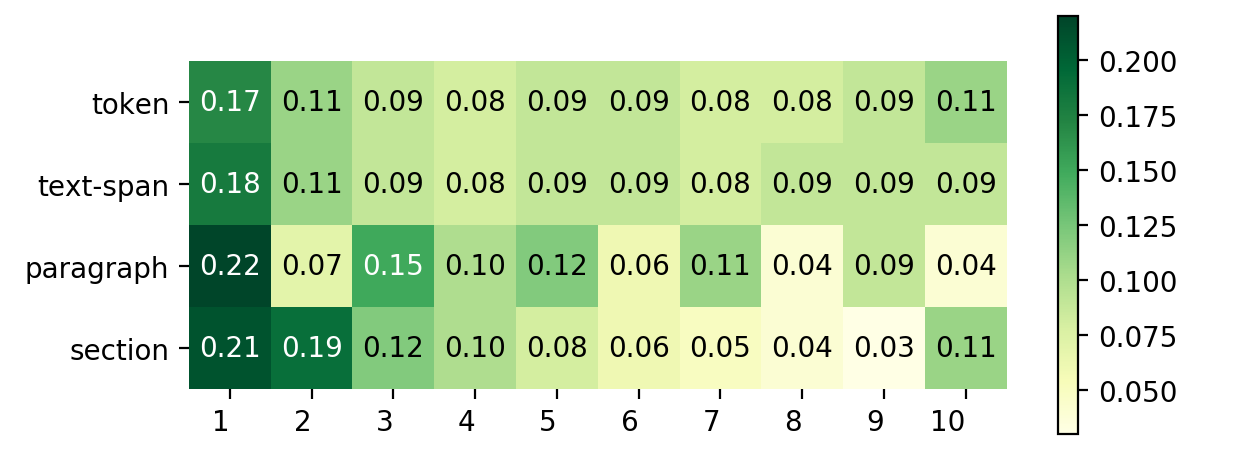

Large-scale dialogue datasets have recently become available for training neural dialogue agents. However, these datasets have been reported to contain a non-negligible number of unacceptable utterance pairs. In this paper, we propose a method for scoring the quality of utterance pairs in terms of their connectivity and relatedness. The proposed scoring method is designed based on findings widely shared in the dialogue and linguistics research communities. We demonstrate that it has a relatively good correlation with the human judgment of dialogue quality. Furthermore, the method is applied to filter out potentially unacceptable utterance pairs from a large-scale noisy dialogue corpus to ensure its quality. We experimentally confirm that training data filtered by the proposed method improves the quality of neural dialogue agents in response generation.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Dialogue Distillation: Open-Domain Dialogue Augmentation Using Unpaired Data

Rongsheng Zhang, Yinhe Zheng, Jianzhi Shao, Xiaoxi Mao, Yadong Xi, Minlie Huang,

Multi-turn Response Selection using Dialogue Dependency Relations

Qi Jia, Yizhu Liu, Siyu Ren, Kenny Zhu, Haifeng Tang,

doc2dial: A Goal-Oriented Document-Grounded Dialogue Dataset

Song Feng, Hui Wan, Chulaka Gunasekara, Siva Patel, Sachindra Joshi, Luis Lastras,