Question Directed Graph Attention Network for Numerical Reasoning over Text

Kunlong Chen, Weidi Xu, Xingyi Cheng, Zou Xiaochuan, Yuyu Zhang, Le Song, Taifeng Wang, Yuan Qi, Wei Chu

Question Answering Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

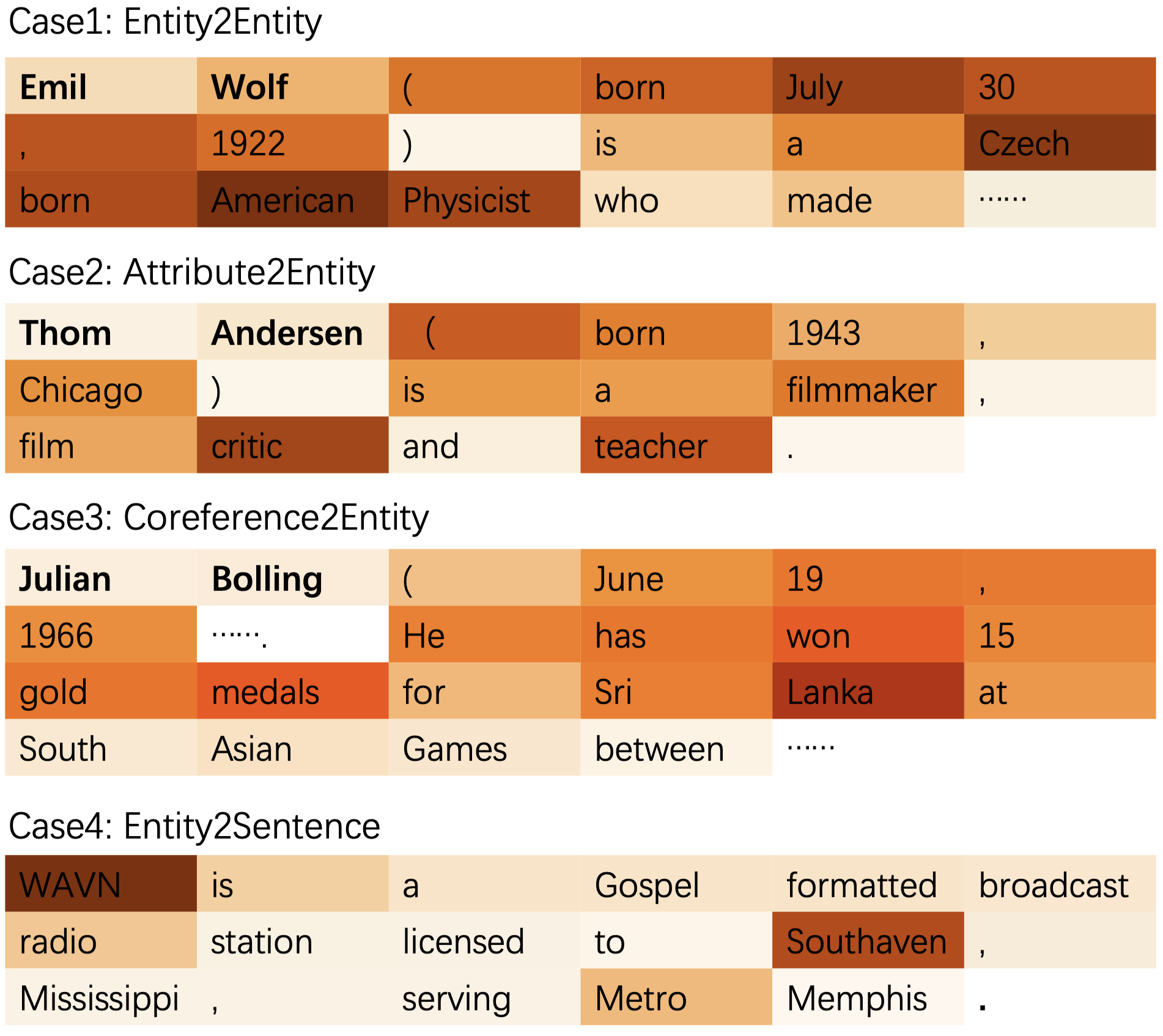

Numerical reasoning over texts, such as addition, subtraction, sorting and counting, is a challenging machine reading comprehension task, since it requires both natural language understanding and arithmetic computation. To address this challenge, we propose a heterogeneous graph representation for the context of the passage and question needed for such reasoning, and design a question directed graph attention network to drive multi-step numerical reasoning over this context graph. Our model, which combines deep learning and graph reasoning, achieves remarkable results in benchmark datasets such as DROP.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Multi-Step Inference for Reasoning Over Paragraphs

Jiangming Liu, Matt Gardner, Shay B. Cohen, Mirella Lapata,

Is Graph Structure Necessary for Multi-hop Question Answering?

Nan Shao, Yiming Cui, Ting Liu, Shijin Wang, Guoping Hu,

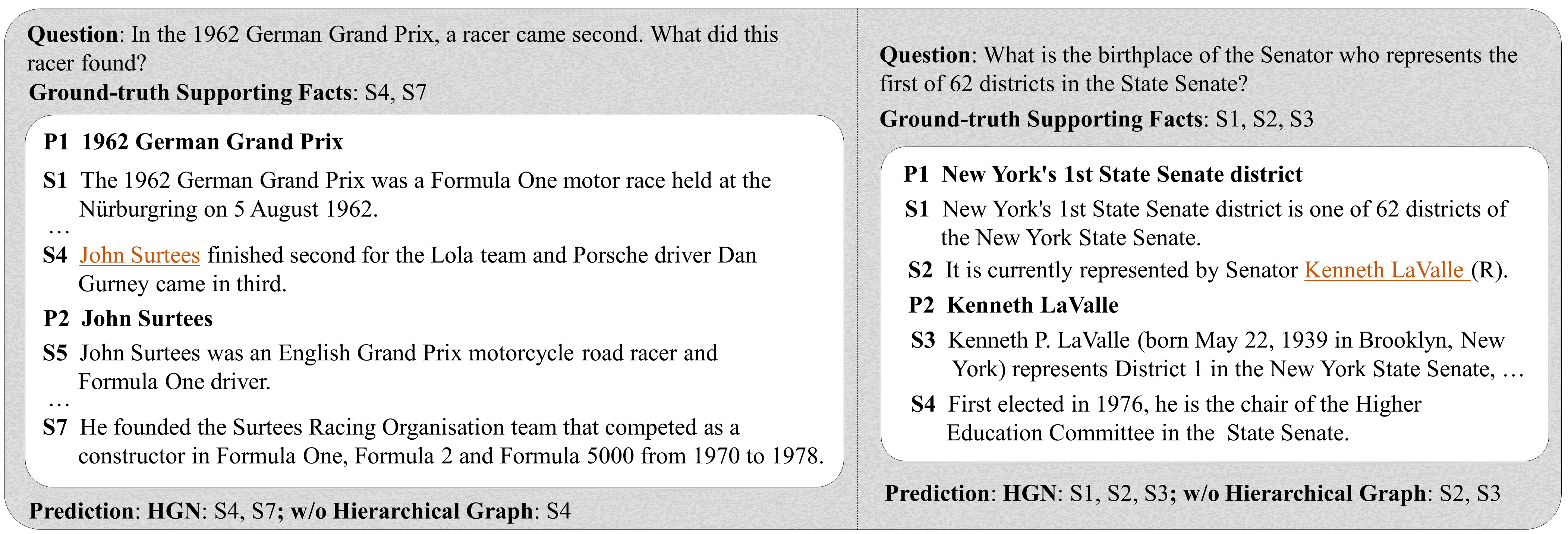

Hierarchical Graph Network for Multi-hop Question Answering

Yuwei Fang, Siqi Sun, Zhe Gan, Rohit Pillai, Shuohang Wang, Jingjing Liu,

Be More with Less: Hypergraph Attention Networks for Inductive Text Classification

Kaize Ding, Jianling Wang, Jundong Li, Dingcheng Li, Huan Liu,