Please Mind the Root: Decoding Arborescences for Dependency Parsing

Ran Zmigrod, Tim Vieira, Ryan Cotterell

Syntax: Tagging, Chunking, and Parsing Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

The connection between dependency trees and spanning trees is exploited by the NLP community to train and to decode graph-based dependency parsers. However, the NLP literature has missed an important difference between the two structures: only one edge may emanate from the root in a dependency tree. We analyzed the output of state-of-the-art parsers on many languages from the Universal Dependency Treebank: although these parsers are often able to learn that trees which violate the constraint should be assigned lower probabilities, their ability to do so unsurprisingly de-grades as the size of the training set decreases.In fact, the worst constraint-violation rate we observe is 24%. Prior work has proposed an inefficient algorithm to enforce the constraint, which adds a factor of n to the decoding runtime. We adapt an algorithm due to Gabow and Tarjan (1984) to dependency parsing, which satisfies the constraint without compromising the original runtime.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

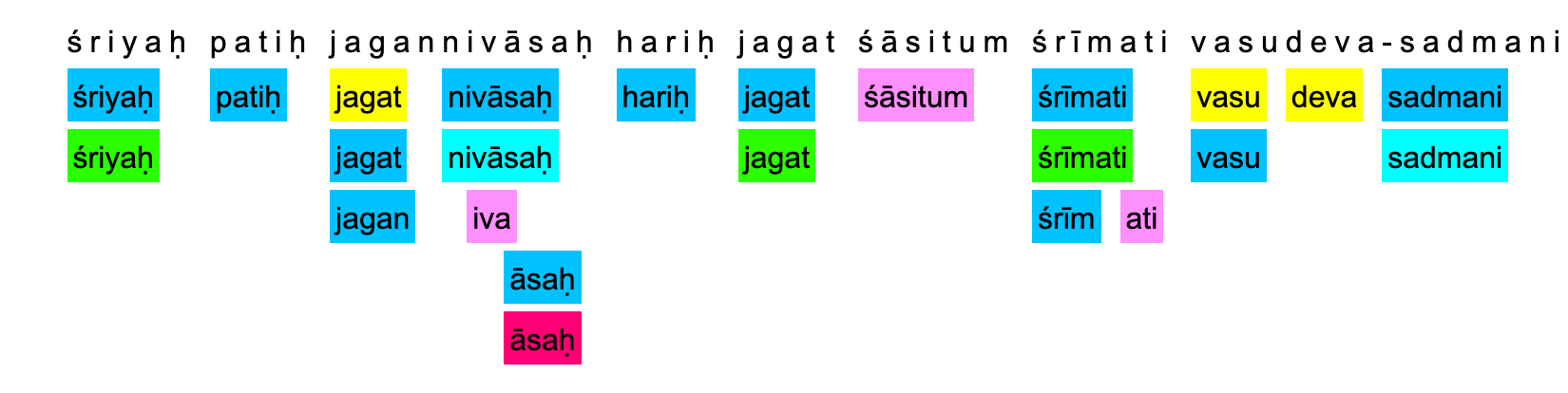

Keep it Surprisingly Simple: A Simple First Order Graph Based Parsing Model for Joint Morphosyntactic Parsing in Sanskrit

Amrith Krishna, Ashim Gupta, Deepak Garasangi, Pavankumar Satuluri, Pawan Goyal,