Incremental Event Detection via Knowledge Consolidation Networks

Pengfei Cao, Yubo Chen, Jun Zhao, Taifeng Wang

Information Extraction Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

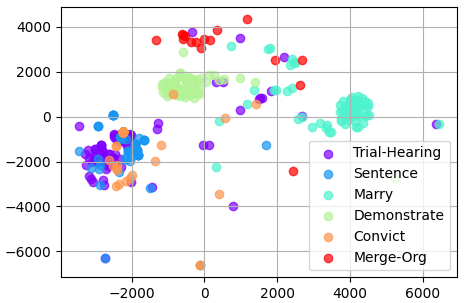

Conventional approaches to event detection usually require a fixed set of pre-defined event types. Such a requirement is often challenged in real-world applications, as new events continually occur. Due to huge computation cost and storage budge, it is infeasible to store all previous data and re-train the model with all previous data and new data, every time new events arrive. We formulate such challenging scenarios as incremental event detection, which requires a model to learn new classes incrementally without performance degradation on previous classes. However, existing incremental learning methods cannot handle semantic ambiguity and training data imbalance problems between old and new classes in the task of incremental event detection. In this paper, we propose a Knowledge Consolidation Network (KCN) to address the above issues. Specifically, we devise two components, prototype enhanced retrospection and hierarchical distillation, to mitigate the adverse effects of semantic ambiguity and class imbalance, respectively. Experimental results demonstrate the effectiveness of the proposed method, outperforming the state-of-the-art model by 19% and 13.4% of whole F1 score on ACE benchmark and TAC KBP benchmark, respectively.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

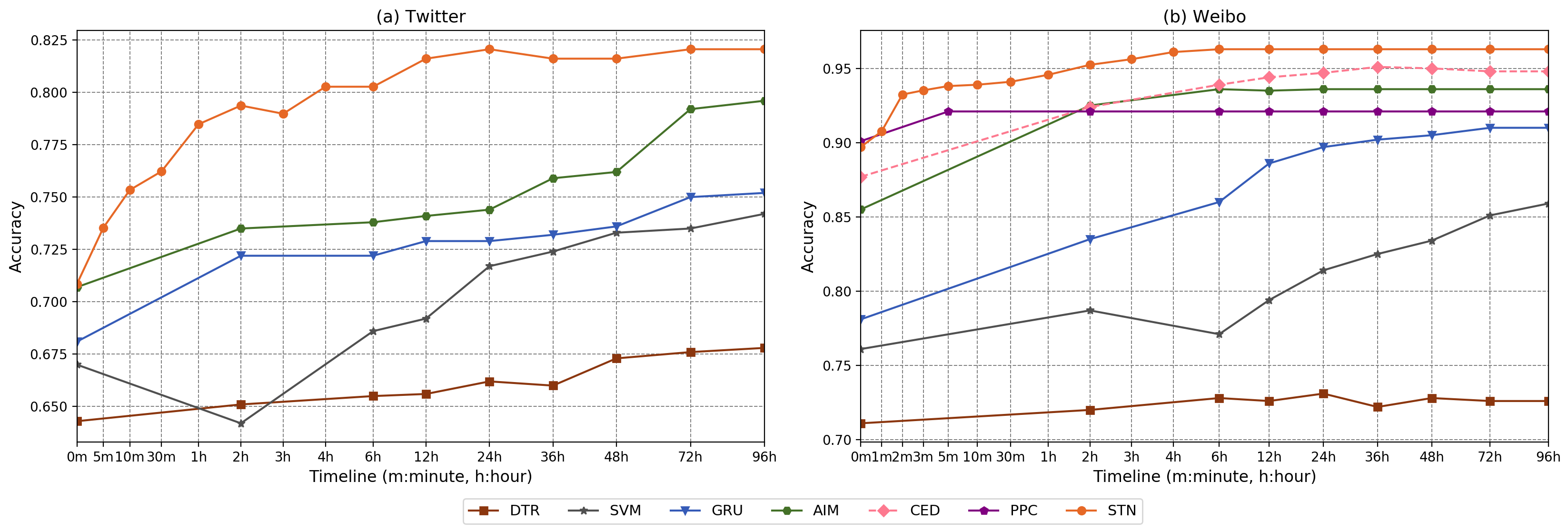

A State-independent and Time-evolving Network for Early Rumor Detection in Social Media

Rui Xia, Kaizhou Xuan, Jianfei Yu,

Joint Constrained Learning for Event-Event Relation Extraction

Haoyu Wang, Muhao Chen, Hongming Zhang, Dan Roth,

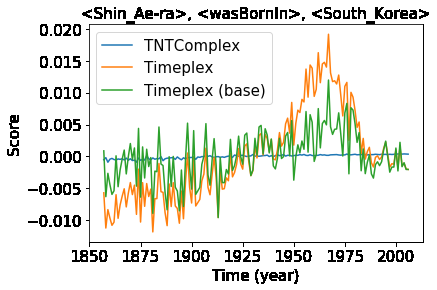

Temporal Knowledge Base Completion: New Algorithms and Evaluation Protocols

Prachi Jain, Sushant Rathi, Mausam, Soumen Chakrabarti,