SeqMix: Augmenting Active Sequence Labeling via Sequence Mixup

Rongzhi Zhang, Yue Yu, Chao Zhang

Information Extraction Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

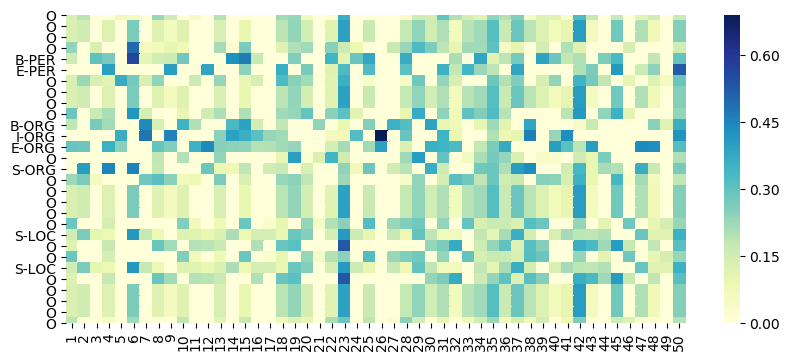

Active learning is an important technique for low-resource sequence labeling tasks. However, current active sequence labeling methods use the queried samples alone in each iteration, which is an inefficient way of leveraging human annotations. We propose a simple but effective data augmentation method to improve label efficiency of active sequence labeling. Our method, SeqMix, simply augments the queried samples by generating extra labeled sequences in each iteration. The key difficulty is to generate plausible sequences along with token-level labels. In SeqMix, we address this challenge by performing mixup for both sequences and token-level labels of the queried samples. Furthermore, we design a discriminator during sequence mixup, which judges whether the generated sequences are plausible or not. Our experiments on Named Entity Recognition and Event Detection tasks show that SeqMix can improve the standard active sequence labeling method by $2.27\%$--$3.75\%$ in terms of $F_1$ scores. The code and data for SeqMix can be found at https://github.com/rz-zhang/SeqMix.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Semantic Label Smoothing for Sequence to Sequence Problems

Michal Lukasik, Himanshu Jain, Aditya Menon, Seungyeon Kim, Srinadh Bhojanapalli, Felix Yu, Sanjiv Kumar,

An Exploration of Arbitrary-Order Sequence Labeling via Energy-Based Inference Networks

Lifu Tu, Tianyu Liu, Kevin Gimpel,

Uncertainty-Aware Label Refinement for Sequence Labeling

Tao Gui, Jiacheng Ye, Qi Zhang, Zhengyan Li, Zichu Fei, Yeyun Gong, Xuanjing Huang,