Structure Aware Negative Sampling in Knowledge Graphs

Kian Ahrabian, Aarash Feizi, Yasmin Salehi, William L. Hamilton, Avishek Joey Bose

Machine Learning for NLP Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

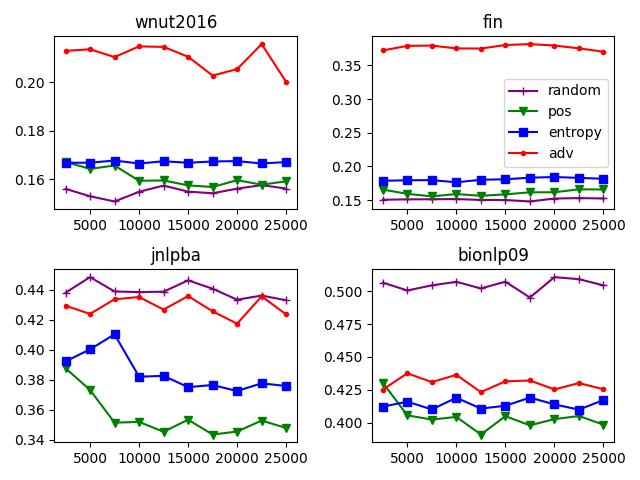

Learning low-dimensional representations for entities and relations in knowledge graphs using contrastive estimation represents a scalable and effective method for inferring connectivity patterns. A crucial aspect of contrastive learning approaches is the choice of corruption distribution that generates hard negative samples, which force the embedding model to learn discriminative representations and find critical characteristics of observed data. While earlier methods either employ too simple corruption distributions, i.e. uniform, yielding easy uninformative negatives or sophisticated adversarial distributions with challenging optimization schemes, they do not explicitly incorporate known graph structure resulting in suboptimal negatives. In this paper, we propose Structure Aware Negative Sampling (SANS), an inexpensive negative sampling strategy that utilizes the rich graph structure by selecting negative samples from a node's $k$-hop neighborhood. Empirically, we demonstrate that SANS finds semantically meaningful negatives and is competitive with SOTA approaches while requires no additional parameters nor difficult adversarial optimization.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Disentangle-based Continual Graph Representation Learning

Xiaoyu Kou, Yankai Lin, Shaobo Liu, Peng Li, Jie Zhou, Yan Zhang,

Effective Unsupervised Domain Adaptation with Adversarially Trained Language Models

Thuy-Trang Vu, Dinh Phung, Gholamreza Haffari,

Adversarial Self-Supervised Data-Free Distillation for Text Classification

Xinyin Ma, Yongliang Shen, Gongfan Fang, Chen Chen, Chenghao Jia, Weiming Lu,

Evaluating the Calibration of Knowledge Graph Embeddings for Trustworthy Link Prediction

Tara Safavi, Danai Koutra, Edgar Meij,