Utility is in the Eye of the User: A Critique of NLP Leaderboards

Kawin Ethayarajh, Dan Jurafsky

NLP Applications Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

Benchmarks such as GLUE have helped drive advances in NLP by incentivizing the creation of more accurate models. While this leaderboard paradigm has been remarkably successful, a historical focus on performance-based evaluation has been at the expense of other qualities that the NLP community values in models, such as compactness, fairness, and energy efficiency. In this opinion paper, we study the divergence between what is incentivized by leaderboards and what is useful in practice through the lens of microeconomic theory. We frame both the leaderboard and NLP practitioners as consumers and the benefit they get from a model as its utility to them. With this framing, we formalize how leaderboards -- in their current form -- can be poor proxies for the NLP community at large. For example, a highly inefficient model would provide less utility to practitioners but not to a leaderboard, since it is a cost that only the former must bear. To allow practitioners to better estimate a model's utility to them, we advocate for more transparency on leaderboards, such as the reporting of statistics that are of practical concern (e.g., model size, energy efficiency, and inference latency).

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

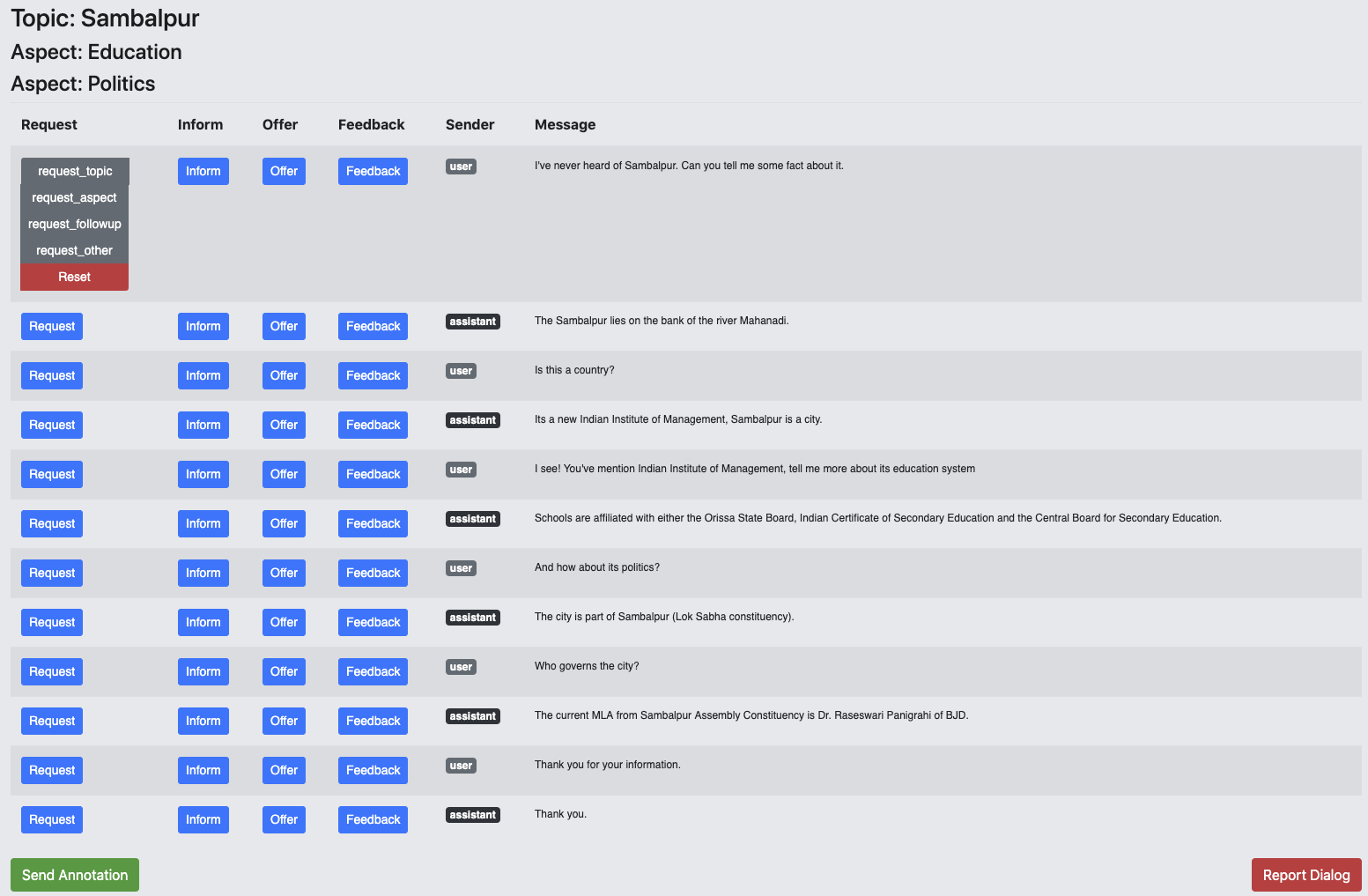

Information Seeking in the Spirit of Learning: A Dataset for Conversational Curiosity

Pedro Rodriguez, Paul Crook, Seungwhan Moon, Zhiguang Wang,

DORB: Dynamically Optimizing Multiple Rewards with Bandits

Ramakanth Pasunuru, Han Guo, Mohit Bansal,

Incremental Processing in the Age of Non-Incremental Encoders: An Empirical Assessment of Bidirectional Models for Incremental NLU

Brielen Madureira, David Schlangen,