If beam search is the answer, what was the question?

Clara Meister, Ryan Cotterell, Tim Vieira

Language Generation Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

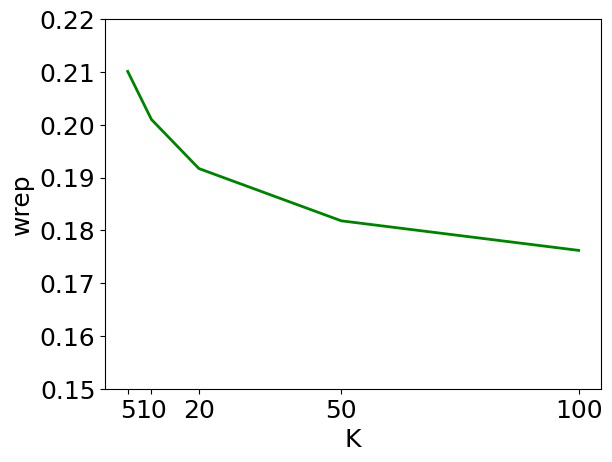

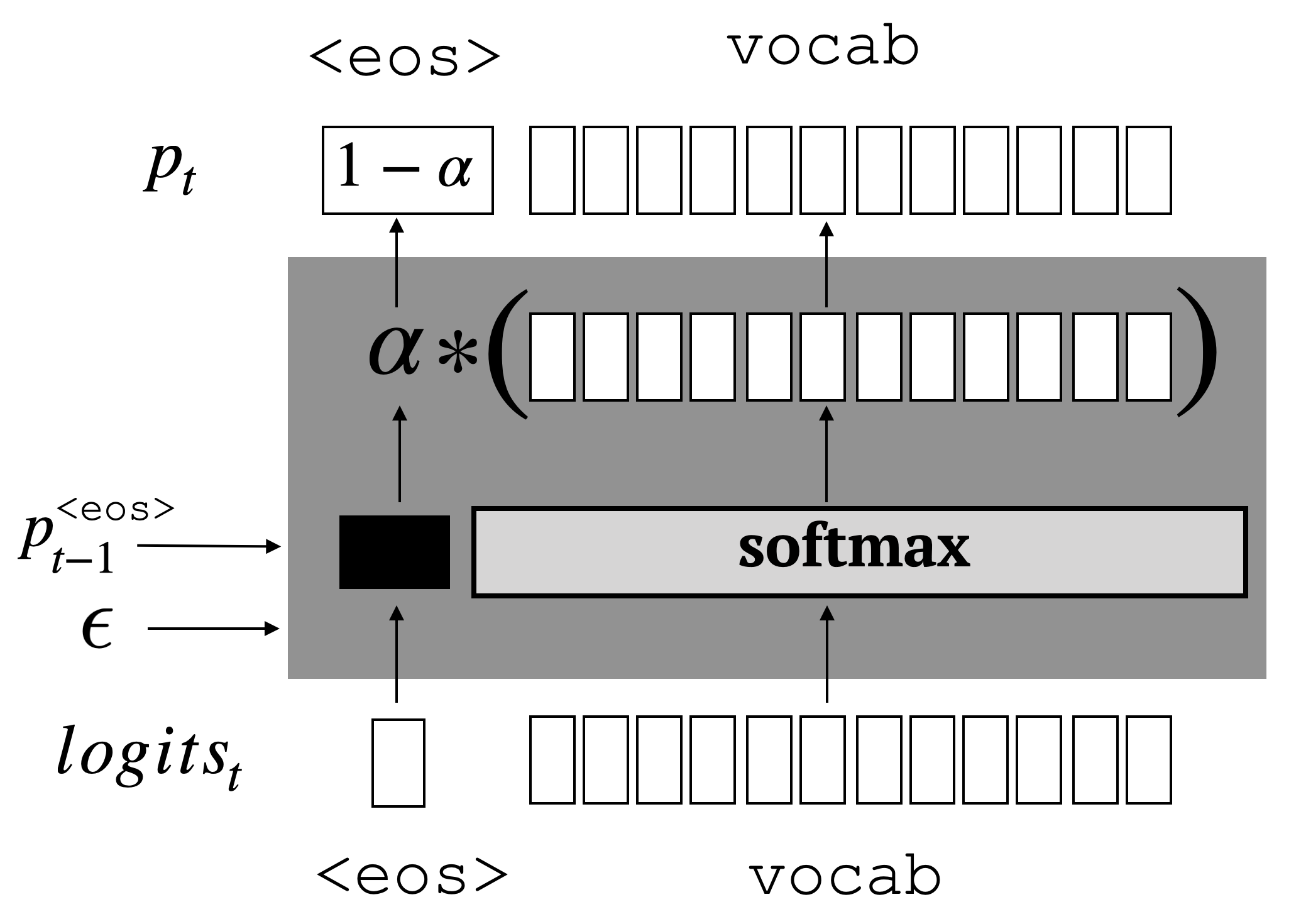

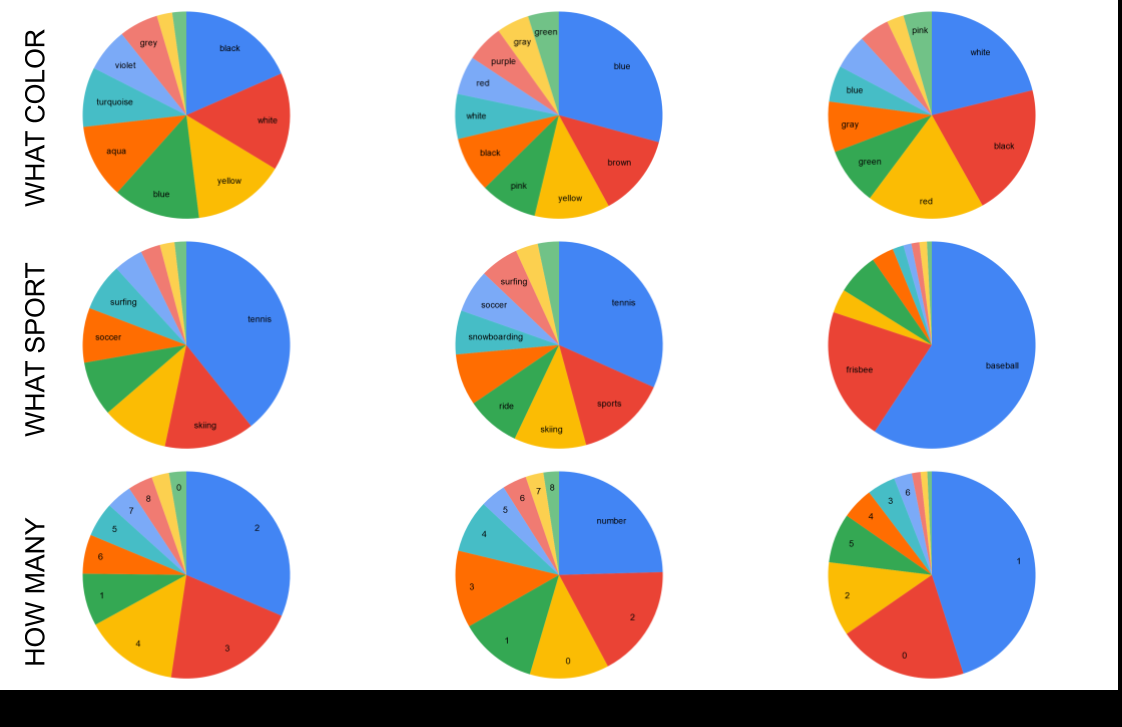

Quite surprisingly, exact maximum a posteriori (MAP) decoding of neural language generators frequently leads to low-quality results. Rather, most state-of-the-art results on language generation tasks are attained using beam search despite its overwhelmingly high search error rate. This implies that the MAP objective alone does not express the properties we desire in text, which merits the question: if beam search is the answer, what was the question? We frame beam search as the exact solution to a different decoding objective in order to gain insights into why high probability under a model alone may not indicate adequacy. We find that beam search enforces uniform information density in text, a property motivated by cognitive science. We suggest a set of decoding objectives that explicitly enforce this property and find that exact decoding with these objectives alleviates the problems encountered when decoding poorly calibrated language generation models. Additionally, we analyze the text produced using various decoding strategies and see that, in our neural machine translation experiments, the extent to which this property is adhered to strongly correlates with BLEU.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Consistency of a Recurrent Language Model With Respect to Incomplete Decoding

Sean Welleck, Ilia Kulikov, Jaedeok Kim, Richard Yuanzhe Pang, Kyunghyun Cho,

MUTANT: A Training Paradigm for Out-of-Distribution Generalization in Visual Question Answering

Tejas Gokhale, Pratyay Banerjee, Chitta Baral, Yezhou Yang,

An Information Bottleneck Approach for Controlling Conciseness in Rationale Extraction

Bhargavi Paranjape, Mandar Joshi, John Thickstun, Hannaneh Hajishirzi, Luke Zettlemoyer,