Zero-Shot Crosslingual Sentence Simplification

Jonathan Mallinson, Rico Sennrich, Mirella Lapata

Language Generation Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

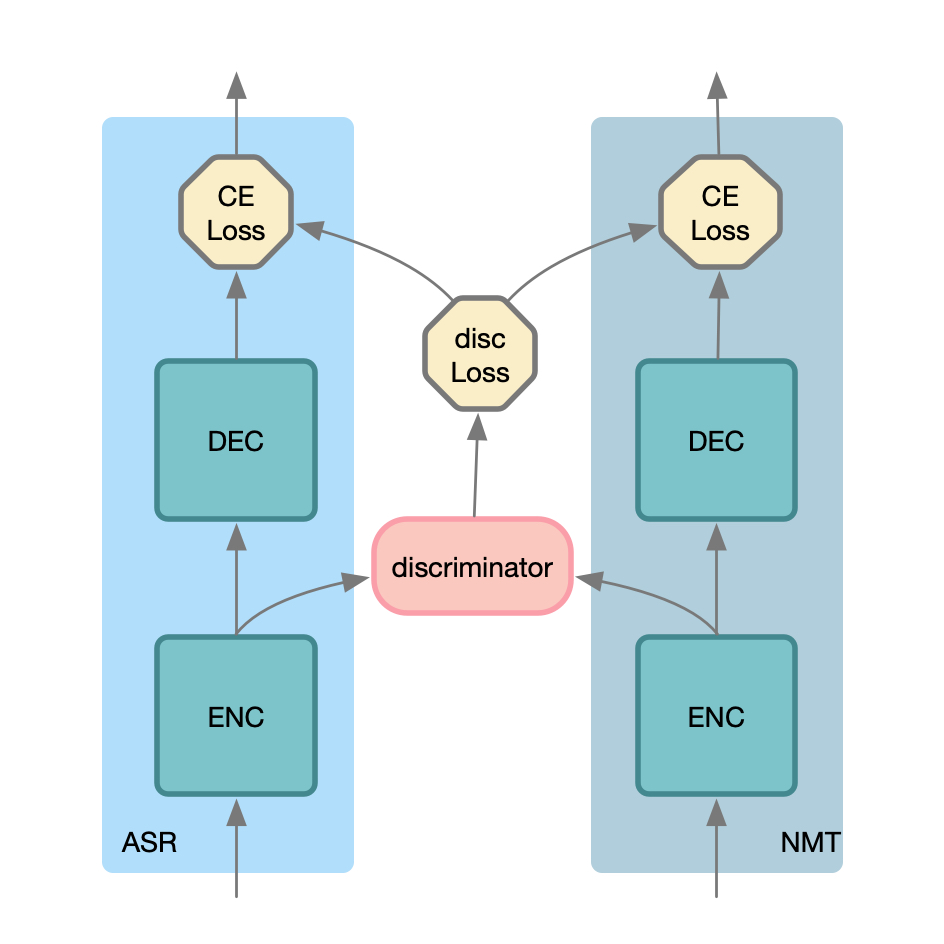

Sentence simplification aims to make sentences easier to read and understand. Recent approaches have shown promising results with encoder-decoder models trained on large amounts of parallel data which often only exists in English. We propose a zero-shot modeling framework which transfers simplification knowledge from English to another language (for which no parallel simplification corpus exists) while generalizing across languages and tasks. A shared transformer encoder constructs language-agnostic representations, with a combination of task-specific encoder layers added on top (e.g., for translation and simplification). Empirical results using both human and automatic metrics show that our approach produces better simplifications than unsupervised and pivot-based methods.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Monolingual Adapters for Zero-Shot Neural Machine Translation

Jerin Philip, Alexandre Berard, Matthias Gallé, Laurent Besacier,

Uncertainty-Aware Semantic Augmentation for Neural Machine Translation

Xiangpeng Wei, Heng Yu, Yue Hu, Rongxiang Weng, Luxi Xing, Weihua Luo,

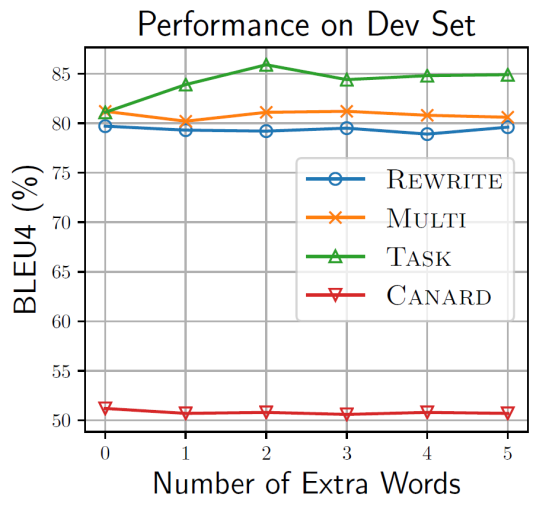

Incomplete Utterance Rewriting as Semantic Segmentation

Qian Liu, Bei Chen, Jian-Guang Lou, Bin Zhou, Dongmei Zhang,

Effectively pretraining a speech translation decoder with Machine Translation data

Ashkan Alinejad, Anoop Sarkar,