Train No Evil: Selective Masking for Task-Guided Pre-Training

Yuxian Gu, Zhengyan Zhang, Xiaozhi Wang, Zhiyuan Liu, Maosong Sun

Sentiment Analysis, Stylistic Analysis, and Argument Mining Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

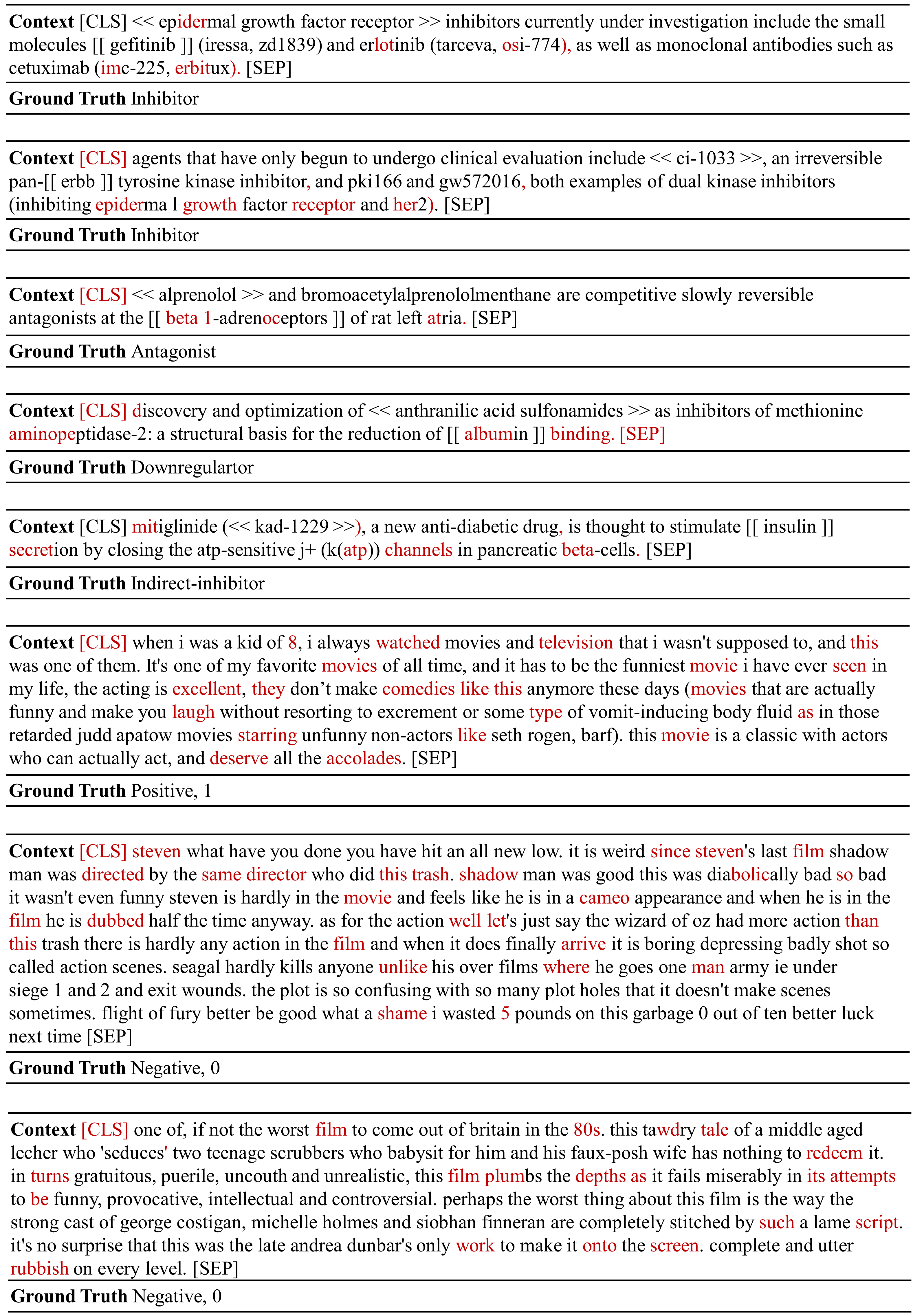

Recently, pre-trained language models mostly follow the pre-train-then-fine-tuning paradigm and have achieved great performance on various downstream tasks. However, since the pre-training stage is typically task-agnostic and the fine-tuning stage usually suffers from insufficient supervised data, the models cannot always well capture the domain-specific and task-specific patterns. In this paper, we propose a three-stage framework by adding a task-guided pre-training stage with selective masking between general pre-training and fine-tuning. In this stage, the model is trained by masked language modeling on in-domain unsupervised data to learn domain-specific patterns and we propose a novel selective masking strategy to learn task-specific patterns. Specifically, we design a method to measure the importance of each token in sequences and selectively mask the important tokens. Experimental results on two sentiment analysis tasks show that our method can achieve comparable or even better performance with less than 50\% of computation cost, which indicates our method is both effective and efficient. The source code of this paper can be obtained from \url{https://github.com/thunlp/SelectiveMasking}.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Neural Mask Generator: Learning to Generate Adaptive Word Maskings for Language Model Adaptation

Minki Kang, Moonsu Han, Sung Ju Hwang,

Meta Fine-Tuning Neural Language Models for Multi-Domain Text Mining

Chengyu Wang, Minghui Qiu, Jun Huang, Xiaofeng He,

Self-Supervised Meta-Learning for Few-Shot Natural Language Classification Tasks

Trapit Bansal, Rishikesh Jha, Tsendsuren Munkhdalai, Andrew McCallum,