AnswerFact: Fact Checking in Product Question Answering

Wenxuan Zhang, Yang Deng, Jing Ma, Wai Lam

Question Answering Long Paper

Abstract:

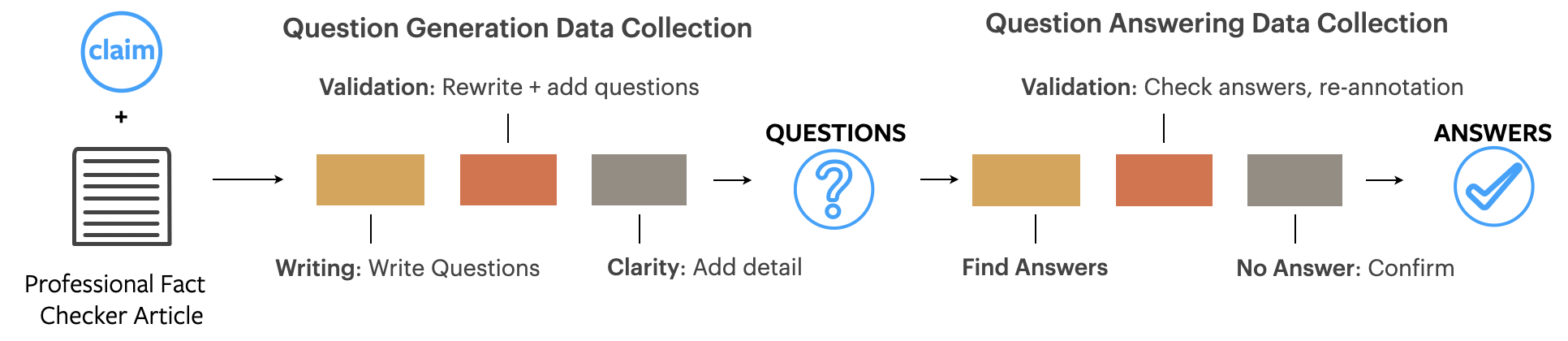

Product-related question answering platforms nowadays are widely employed in many E-commerce sites, providing a convenient way for potential customers to address their concerns during online shopping. However, the misinformation in the answers on those platforms poses unprecedented challenges for users to obtain reliable and truthful product information, which may even cause a commercial loss in E-commerce business. To tackle this issue, we investigate to predict the veracity of answers in this paper and introduce AnswerFact, a large scale fact checking dataset from product question answering forums. Each answer is accompanied by its veracity label and associated evidence sentences, providing a valuable testbed for evidence-based fact checking tasks in QA settings. We further propose a novel neural model with tailored evidence ranking components to handle the concerned answer veracity prediction problem. Extensive experiments are conducted with our proposed model and various existing fact checking methods, showing that our method outperforms all baselines on this task.

Connected Papers in EMNLP2020

Similar Papers

F1 is Not Enough! Models and Evaluation Towards User-Centered Explainable Question Answering

Hendrik Schuff, Heike Adel, Ngoc Thang Vu,

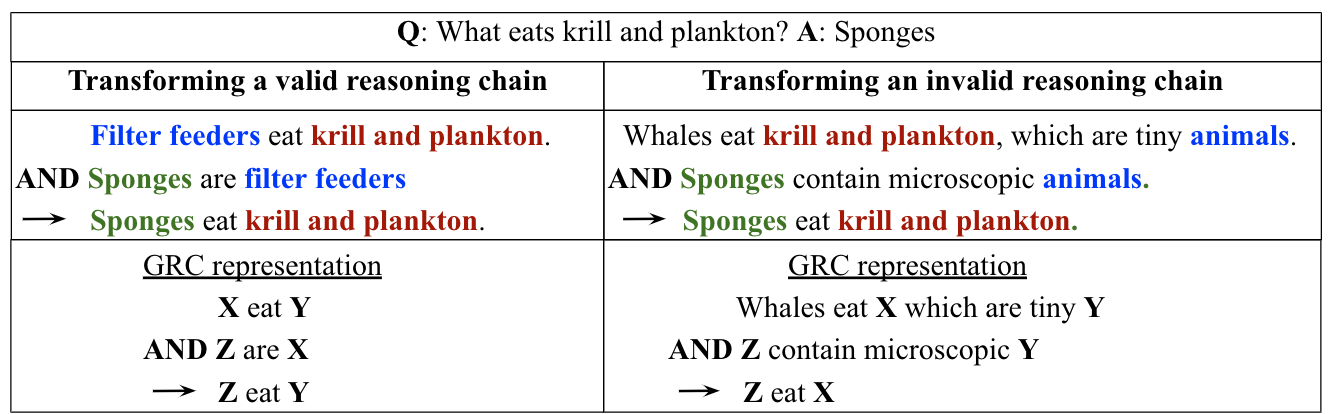

Learning to Explain: Datasets and Models for Identifying Valid Reasoning Chains in Multihop Question-Answering

Harsh Jhamtani, Peter Clark,

ProtoQA: A Question Answering Dataset for Prototypical Common-Sense Reasoning

Michael Boratko, Xiang Li, Tim O'Gorman, Rajarshi Das, Dan Le, Andrew McCallum,

Generating Fact Checking Briefs

Angela Fan, Aleksandra Piktus, Fabio Petroni, Guillaume Wenzek, Marzieh Saeidi, Andreas Vlachos, Antoine Bordes, Sebastian Riedel,