Nurse is Closer to Woman than Surgeon? Mitigating Gender-Biased Proximities in Word Embeddings

Vaibhav Kumar, Tenzin Bhotia, Vaibhav Kumar, Tanmoy Chakraborty

Semantics: Lexical Semantics Tacl Paper

You can open the pre-recorded video in a separate window.

Abstract:

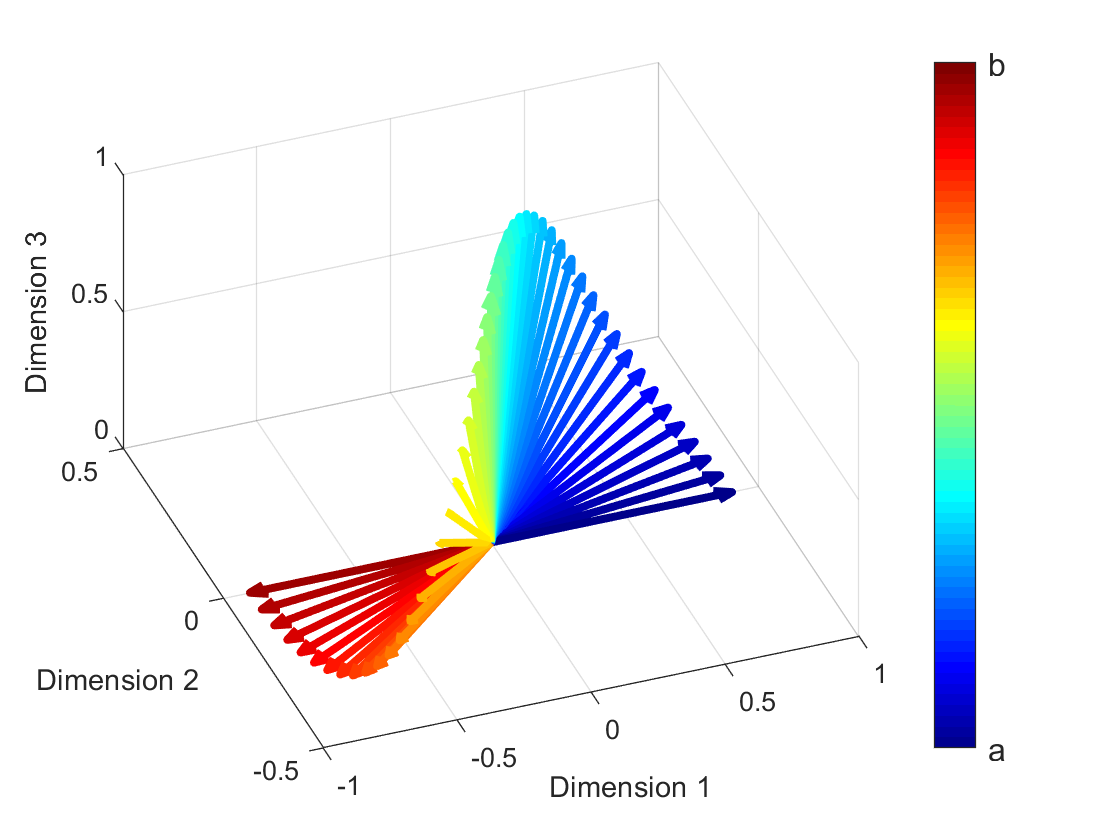

Word embeddings are the standard model for semantic and syntactic representations of words. Unfortunately, these models have been shown to exhibit undesirable word associations resulting from gender, racial, and religious biases. Existing post-processing methods for debiasing word embeddings are unable to mitigate gender bias hidden in the spatial arrangement of word vectors. In this paper, we propose RAN-Debias, a novel gender debiasing methodology which not only eliminates the bias present in a word vector but also alters the spatial distribution of its neighbouring vectors, achieving a bias-free setting while maintaining minimal semantic offset. We also propose a new bias evaluation metric - Gender-based Illicit Proximity Estimate (GIPE), which measures the extent of undue proximity in word vectors resulting from the presence of gender-based predilections. Experiments based on a suite of evaluation metrics show that RAN-Debias significantly outperforms the state-of-the-art in reducing proximity bias (GIPE) by at least 42.02%. It also reduces direct bias, adding minimal semantic disturbance, and achieves the best performance in a downstream application task (coreference resolution).

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Methods for Numeracy-Preserving Word Embeddings

Dhanasekar Sundararaman, Shijing Si, Vivek Subramanian, Guoyin Wang, Devamanyu Hazarika, Lawrence Carin,

Debiasing knowledge graph embeddings

Joseph Fisher, Arpit Mittal, Dave Palfrey, Christos Christodoulopoulos,

Compositional Demographic Word Embeddings

Charles Welch, Jonathan K. Kummerfeld, Verónica Pérez-Rosas, Rada Mihalcea,