Enhancing Aspect Term Extraction with Soft Prototypes

Zhuang Chen, Tieyun Qian

Information Extraction Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

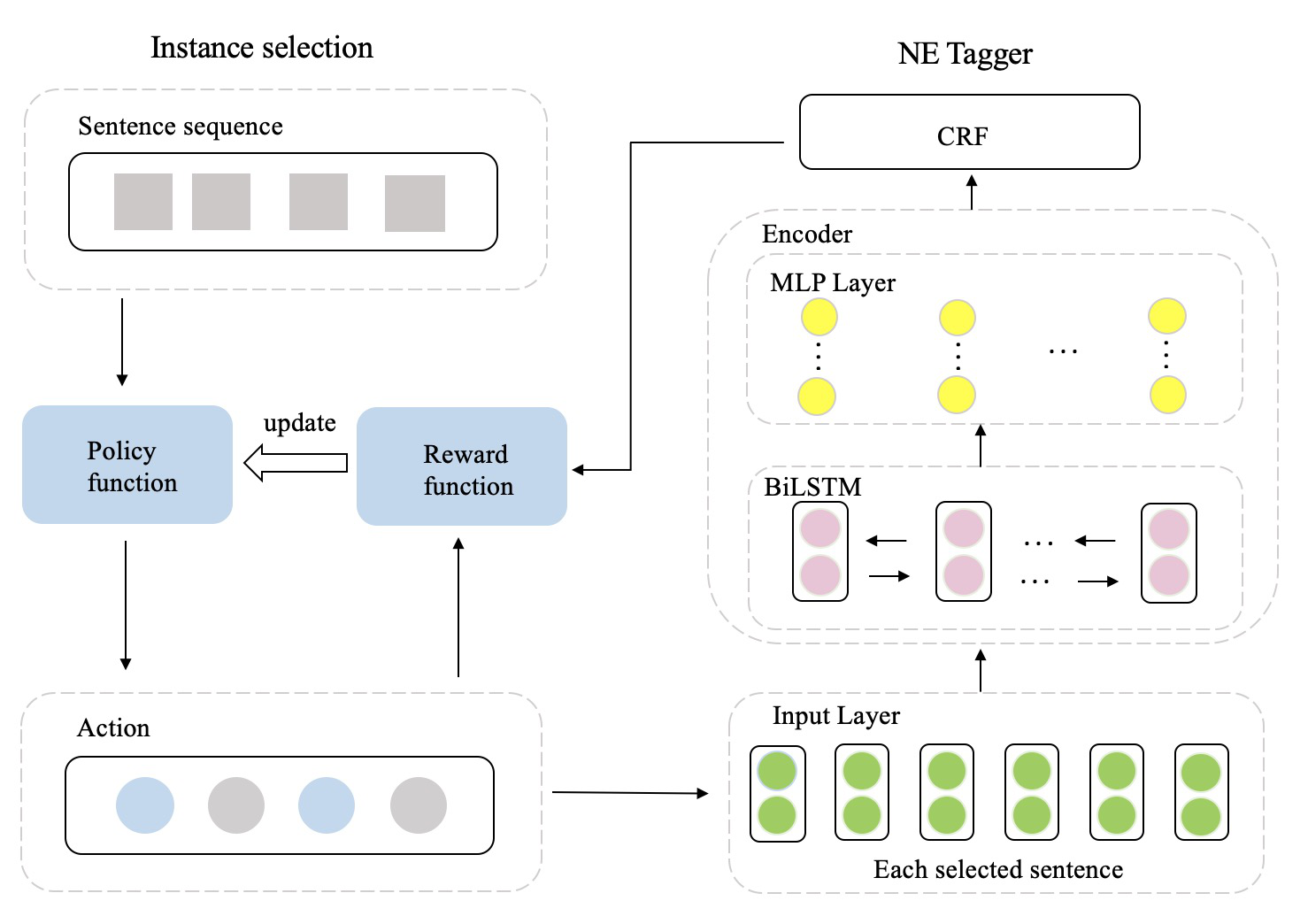

Aspect term extraction (ATE) aims to extract aspect terms from a review sentence that users have expressed opinions on. Existing studies mostly focus on designing neural sequence taggers to extract linguistic features from the token level. However, since the aspect terms and context words usually exhibit long-tail distributions, these taggers often converge to an inferior state without enough sample exposure. In this paper, we propose to tackle this problem by correlating words with each other through soft prototypes. These prototypes, generated by a soft retrieval process, can introduce global knowledge from internal or external data and serve as the supporting evidence for discovering the aspect terms. Our proposed model is a general framework and can be combined with almost all sequence taggers. Experiments on four SemEval datasets show that our model boosts the performance of three typical ATE methods by a large margin.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Learning from Context or Names? An Empirical Study on Neural Relation Extraction

Hao Peng, Tianyu Gao, Xu Han, Yankai Lin, Peng Li, Zhiyuan Liu, Maosong Sun, Jie Zhou,

Summarizing Text on Any Aspects: A Knowledge-Informed Weakly-Supervised Approach

Bowen Tan, Lianhui Qin, Eric Xing, Zhiting Hu,

Modeling Content Importance for Summarization with Pre-trained Language Models

Liqiang Xiao, Lu Wang, Hao He, Yaohui Jin,