Word class flexibility: A deep contextualized approach

Bai Li, Guillaume Thomas, Yang Xu, Frank Rudzicz

Linguistic Theories, Cognitive Modeling and Psycholinguistics Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

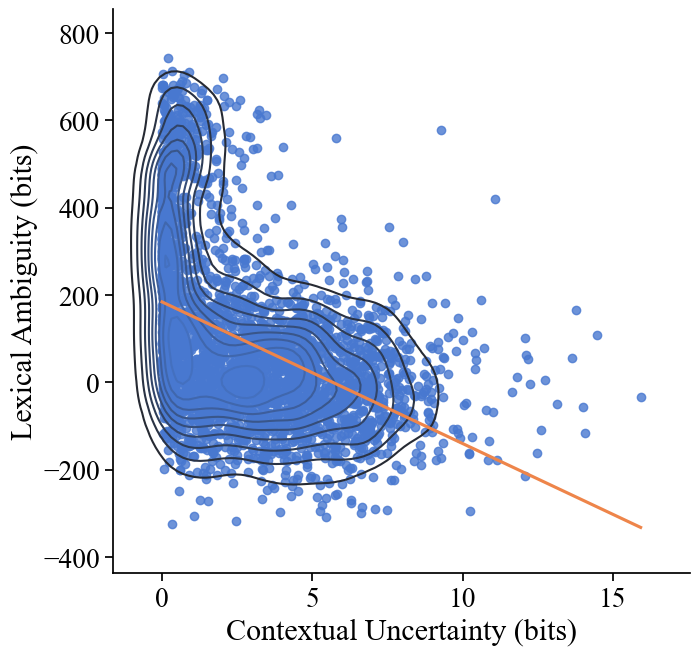

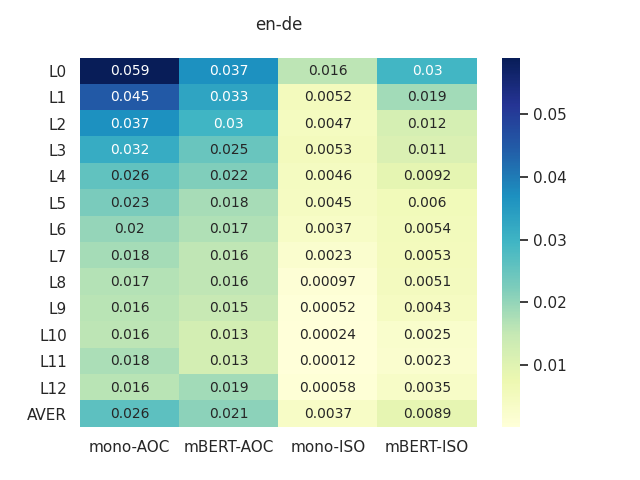

Word class flexibility refers to the phenomenon whereby a single word form is used across different grammatical categories. Extensive work in linguistic typology has sought to characterize word class flexibility across languages, but quantifying this phenomenon accurately and at scale has been fraught with difficulties. We propose a principled methodology to explore regularity in word class flexibility. Our method builds on recent work in contextualized word embeddings to quantify semantic shift between word classes (e.g., noun-to-verb, verb-to-noun), and we apply this method to 37 languages. We find that contextualized embeddings not only capture human judgment of class variation within words in English, but also uncover shared tendencies in class flexibility across languages. Specifically, we find greater semantic variation when flexible lemmas are used in their dominant word class, supporting the view that word class flexibility is a directional process. Our work highlights the utility of deep contextualized models in linguistic typology.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Probing Pretrained Language Models for Lexical Semantics

Ivan Vulić, Edoardo Maria Ponti, Robert Litschko, Goran Glavaš, Anna Korhonen,

Generationary or “How We Went beyond Word Sense Inventories and Learned to Gloss”

Michele Bevilacqua, Marco Maru, Roberto Navigli,

Speakers Fill Lexical Semantic Gaps with Context

Tiago Pimentel, Rowan Hall Maudslay, Damian Blasi, Ryan Cotterell,