Where Are You? Localization from Embodied Dialog

Meera Hahn, Jacob Krantz, Dhruv Batra, Devi Parikh, James Rehg, Stefan Lee, Peter Anderson

Language Grounding to Vision, Robotics and Beyond Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

We present WHERE ARE YOU? (WAY), a dataset of ~6k dialogs in which two humans -- an Observer and a Locator -- complete a cooperative localization task. The Observer is spawned at random in a 3D environment and can navigate from first-person views while answering questions from the Locator. The Locator must localize the Observer in a detailed top-down map by asking questions and giving instructions. Based on this dataset, we define three challenging tasks: Localization from Embodied Dialog or LED (localizing the Observer from dialog history), Embodied Visual Dialog (modeling the Observer), and Cooperative Localization (modeling both agents). In this paper, we focus on the LED task -- providing a strong baseline model with detailed ablations characterizing both dataset biases and the importance of various modeling choices. Our best model achieves 32.7% success at identifying the Observer's location within 3m in unseen buildings, vs. 70.4% for human Locators.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

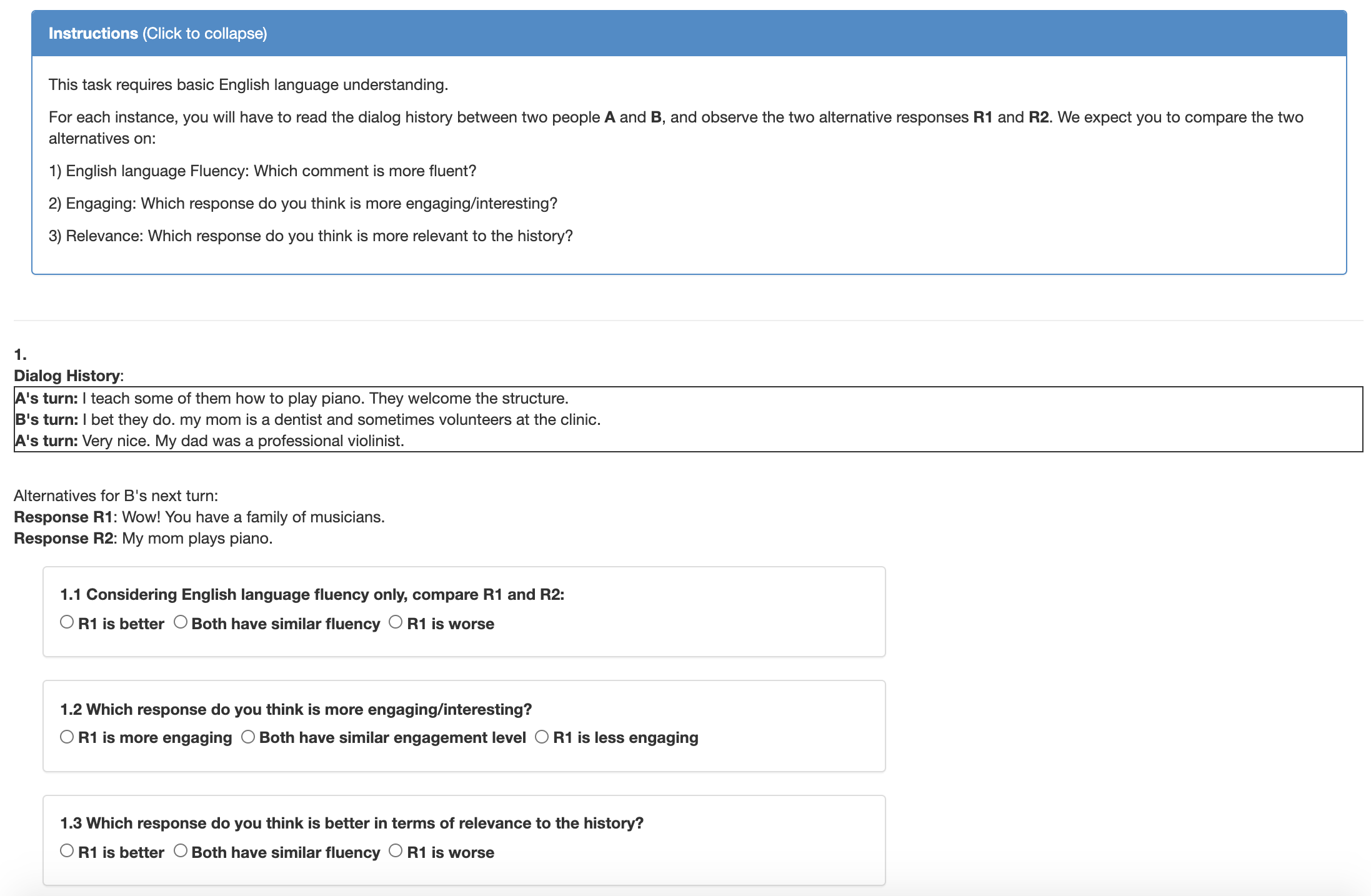

VD-BERT: A Unified Vision and Dialog Transformer with BERT

Yue Wang, Shafiq Joty, Michael Lyu, Irwin King, Caiming Xiong, Steven C.H. Hoi,

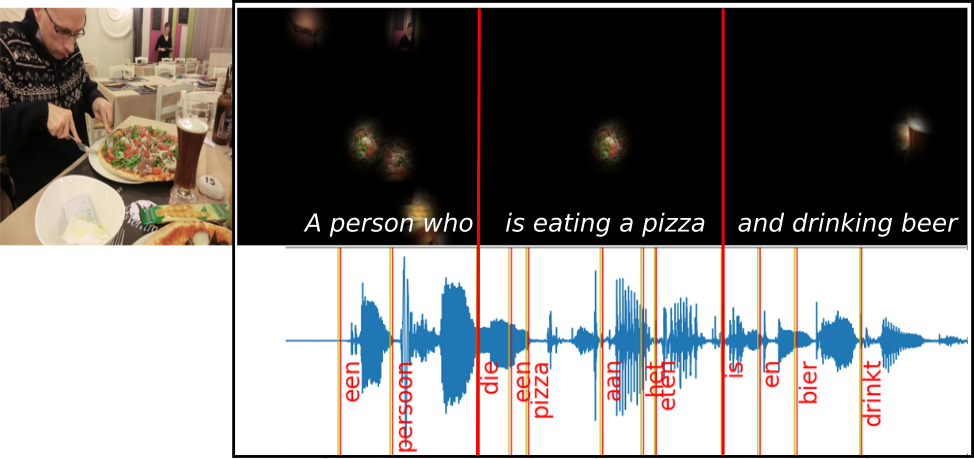

Generating Image Descriptions via Sequential Cross-Modal Alignment Guided by Human Gaze

Ece Takmaz, Sandro Pezzelle, Lisa Beinborn, Raquel Fernández,

Like hiking? You probably enjoy nature: Persona-grounded Dialog with Commonsense Expansions

Bodhisattwa Prasad Majumder, Harsh Jhamtani, Taylor Berg-Kirkpatrick, Julian McAuley,

Sub-Instruction Aware Vision-and-Language Navigation

Yicong Hong, Cristian Rodriguez, Qi Wu, Stephen Gould,