Cross-lingual Spoken Language Understanding with Regularized Representation Alignment

Zihan Liu, Genta Indra Winata, Peng Xu, Zhaojiang Lin, Pascale Fung

Dialog and Interactive Systems Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Despite the promising results of current cross-lingual models for spoken language understanding systems, they still suffer from imperfect cross-lingual representation alignments between the source and target languages, which makes the performance sub-optimal. To cope with this issue, we propose a regularization approach to further align word-level and sentence-level representations across languages without any external resource. First, we regularize the representation of user utterances based on their corresponding labels. Second, we regularize the latent variable model (Liu et al., 2019) by leveraging adversarial training to disentangle the latent variables. Experiments on the cross-lingual spoken language understanding task show that our model outperforms current state-of-the-art methods in both few-shot and zero-shot scenarios, and our model, trained on a few-shot setting with only 3\% of the target language training data, achieves comparable performance to the supervised training with all the training data.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

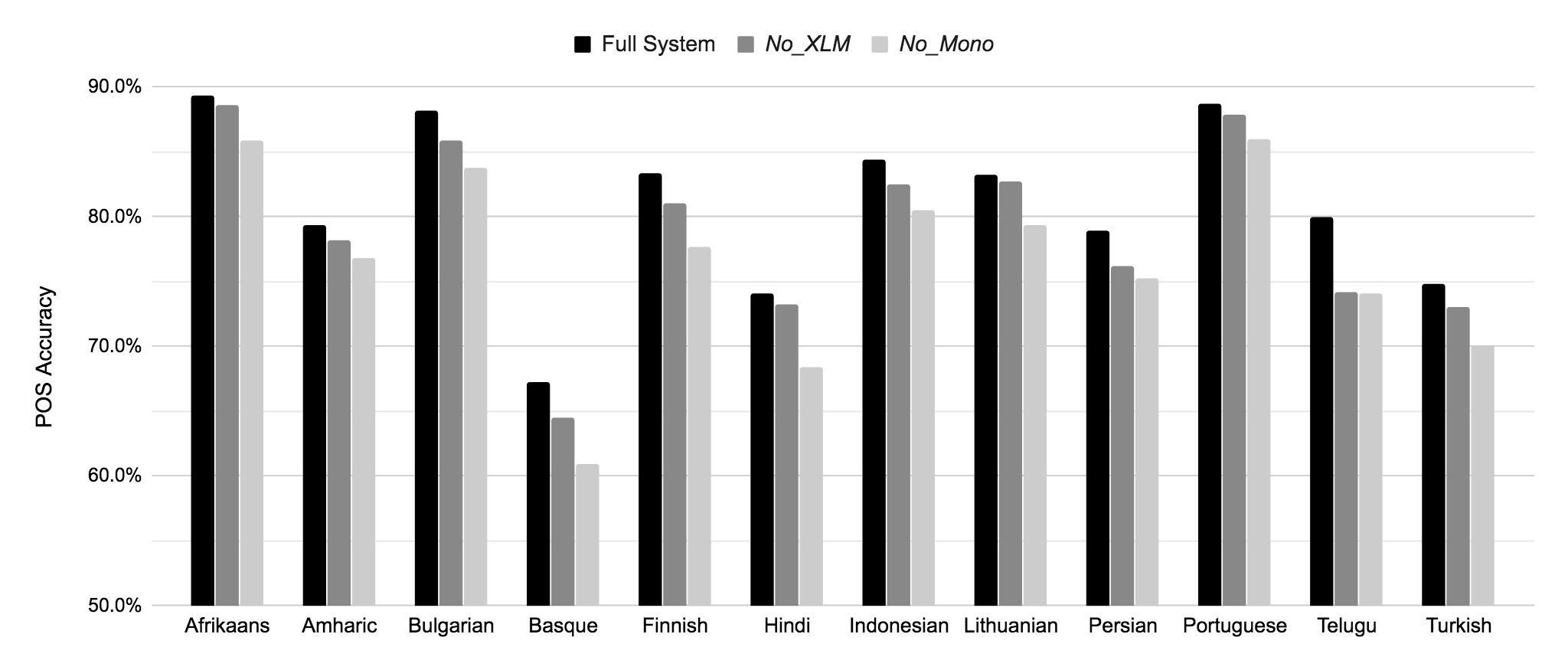

Unsupervised Cross-Lingual Part-of-Speech Tagging for Truly Low-Resource Scenarios

Ramy Eskander, Smaranda Muresan, Michael Collins,

SLM: Learning a Discourse Language Representation with Sentence Unshuffling

Haejun Lee, Drew A. Hudson, Kangwook Lee, Christopher D. Manning,

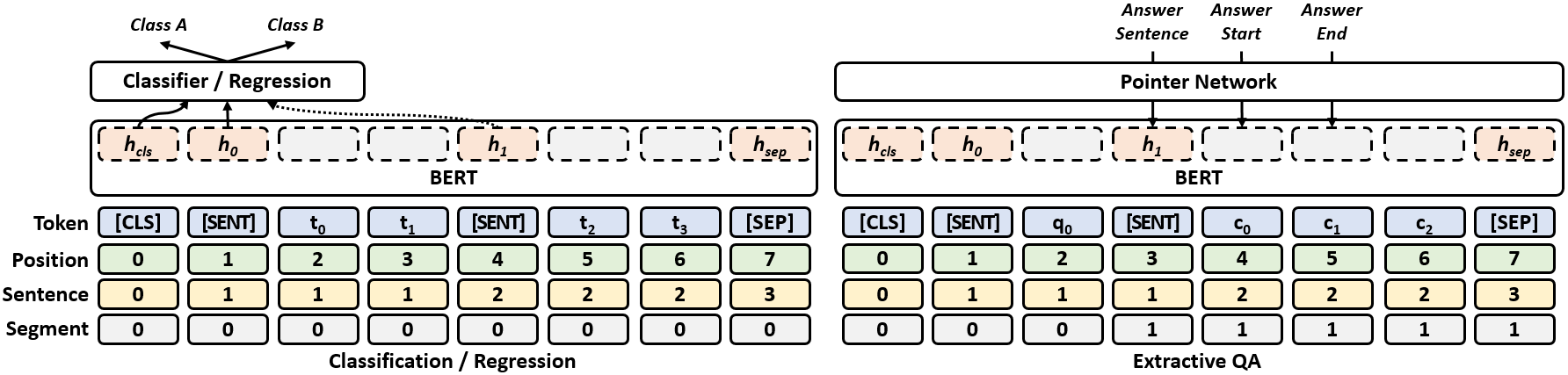

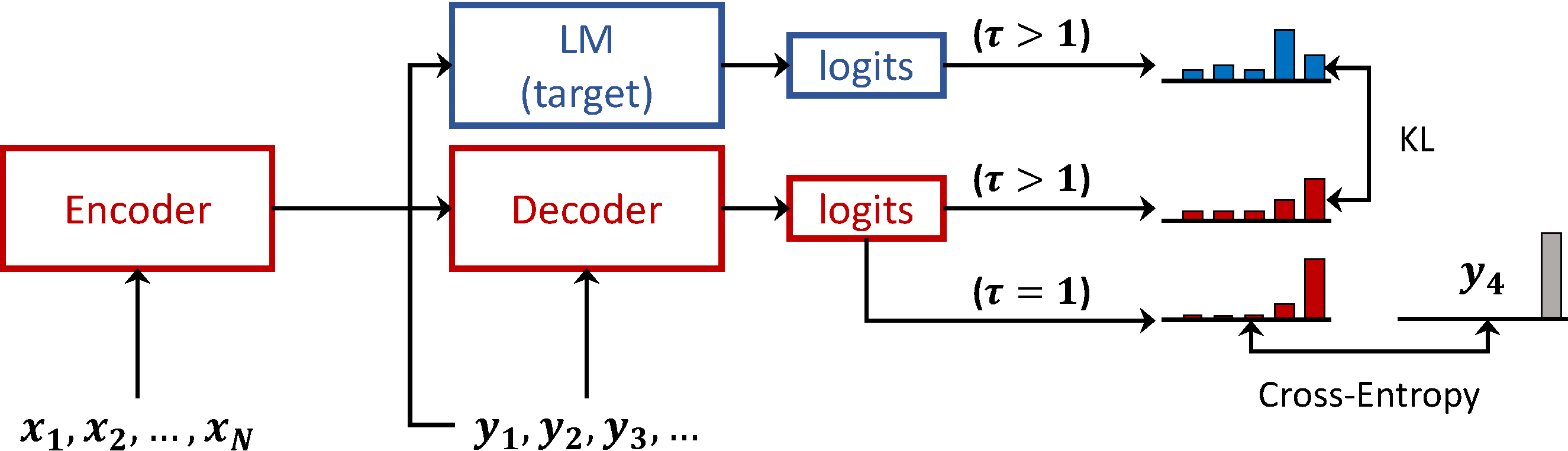

Language Model Prior for Low-Resource Neural Machine Translation

Christos Baziotis, Barry Haddow, Alexandra Birch,

Reusing a Pretrained Language Model on Languages with Limited Corpora for Unsupervised NMT

Alexandra Chronopoulou, Dario Stojanovski, Alexander Fraser,