Contrastive Distillation on Intermediate Representations for Language Model Compression

Siqi Sun, Zhe Gan, Yuwei Fang, Yu Cheng, Shuohang Wang, Jingjing Liu

Machine Learning for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

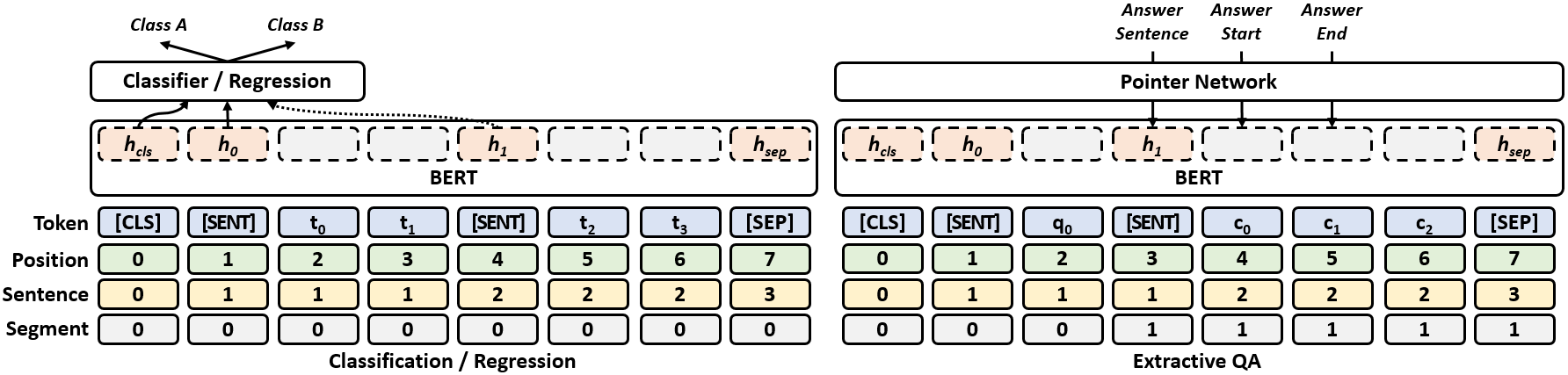

Existing language model compression methods mostly use a simple L_2 loss to distill knowledge in the intermediate representations of a large BERT model to a smaller one. Although widely used, this objective by design assumes that all the dimensions of hidden representations are independent, failing to capture important structural knowledge in the intermediate layers of the teacher network. To achieve better distillation efficacy, we propose Contrastive Distillation on Intermediate Representations (CoDIR), a principled knowledge distillation framework where the student is trained to distill knowledge through intermediate layers of the teacher via a contrastive objective. By learning to distinguish positive sample from a large set of negative samples, CoDIR facilitates the student's exploitation of rich information in teacher's hidden layers. CoDIR can be readily applied to compress large-scale language models in both pre-training and finetuning stages, and achieves superb performance on the GLUE benchmark, outperforming state-of-the-art compression methods.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Syntactic Structure Distillation Pretraining for Bidirectional Encoders

Adhiguna Kuncoro, Lingpeng Kong, Daniel Fried, Dani Yogatama, Laura Rimell, Chris Dyer, Phil Blunsom,

SLM: Learning a Discourse Language Representation with Sentence Unshuffling

Haejun Lee, Drew A. Hudson, Kangwook Lee, Christopher D. Manning,

oLMpics - On what Language Model Pre-training Captures

Alon Talmor, Yanai Elazar, Yoav Goldberg, Jonathan Berant,