Learning Music Helps You Read: Using Transfer to Study Linguistic Structure in Language Models

Isabel Papadimitriou, Dan Jurafsky

Interpretability and Analysis of Models for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

We propose transfer learning as a method for analyzing the encoding of grammatical structure in neural language models. We train LSTMs on non-linguistic data and evaluate their performance on natural language to assess which kinds of data induce generalizable structural features that LSTMs can use for natural language. We find that training on non-linguistic data with latent structure (MIDI music or Java code) improves test performance on natural language, despite no overlap in surface form or vocabulary. To pinpoint the kinds of abstract structure that models may be encoding to lead to this improvement, we run similar experiments with two artificial parentheses languages: one which has a hierarchical recursive structure, and a control which has paired tokens but no recursion. Surprisingly, training a model on either of these artificial languages leads the same substantial gains when testing on natural language. Further experiments on transfer between natural languages controlling for vocabulary overlap show that zero-shot performance on a test language is highly correlated with typological syntactic similarity to the training language, suggesting that representations induced by pre-training correspond to the cross-linguistic syntactic properties. Our results provide insights into the ways that neural models represent abstract syntactic structure, and also about the kind of structural inductive biases which allow for natural language acquisition.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Investigating representations of verb bias in neural language models

Robert Hawkins, Takateru Yamakoshi, Thomas Griffiths, Adele Goldberg,

SLM: Learning a Discourse Language Representation with Sentence Unshuffling

Haejun Lee, Drew A. Hudson, Kangwook Lee, Christopher D. Manning,

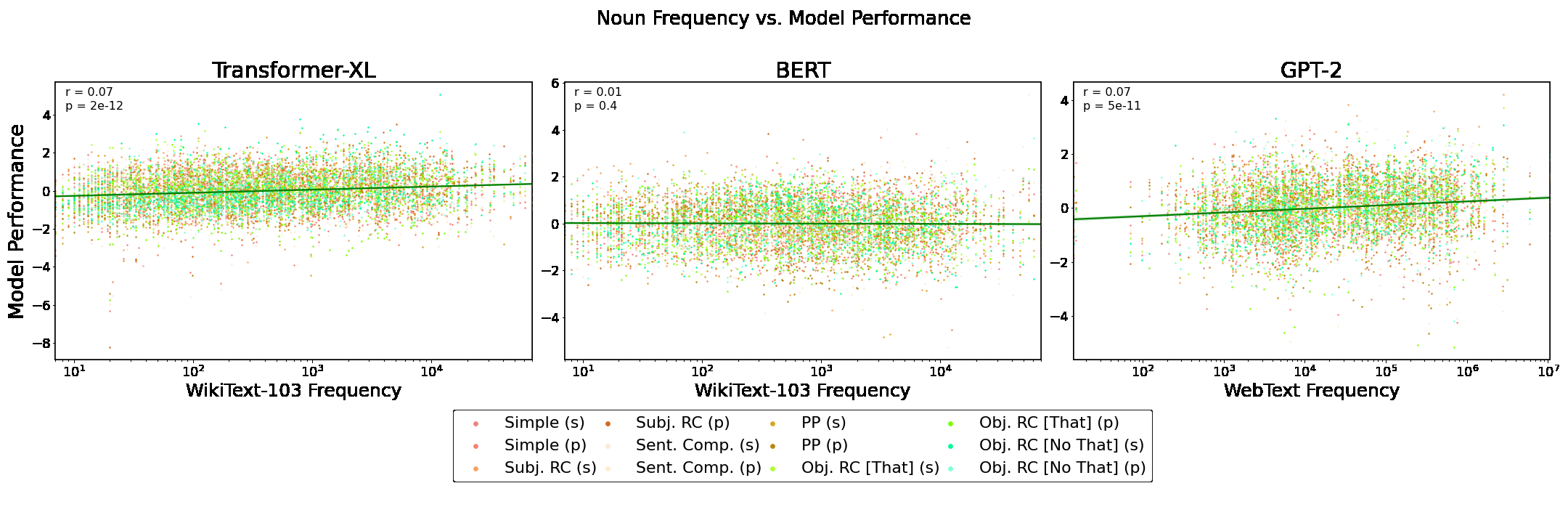

Word Frequency Does Not Predict Grammatical Knowledge in Language Models

Charles Yu, Ryan Sie, Nicolas Tedeschi, Leon Bergen,

Reusing a Pretrained Language Model on Languages with Limited Corpora for Unsupervised NMT

Alexandra Chronopoulou, Dario Stojanovski, Alexander Fraser,