LOGAN: Local Group Bias Detection by Clustering

Jieyu Zhao, Kai-Wei Chang

Interpretability and Analysis of Models for NLP Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

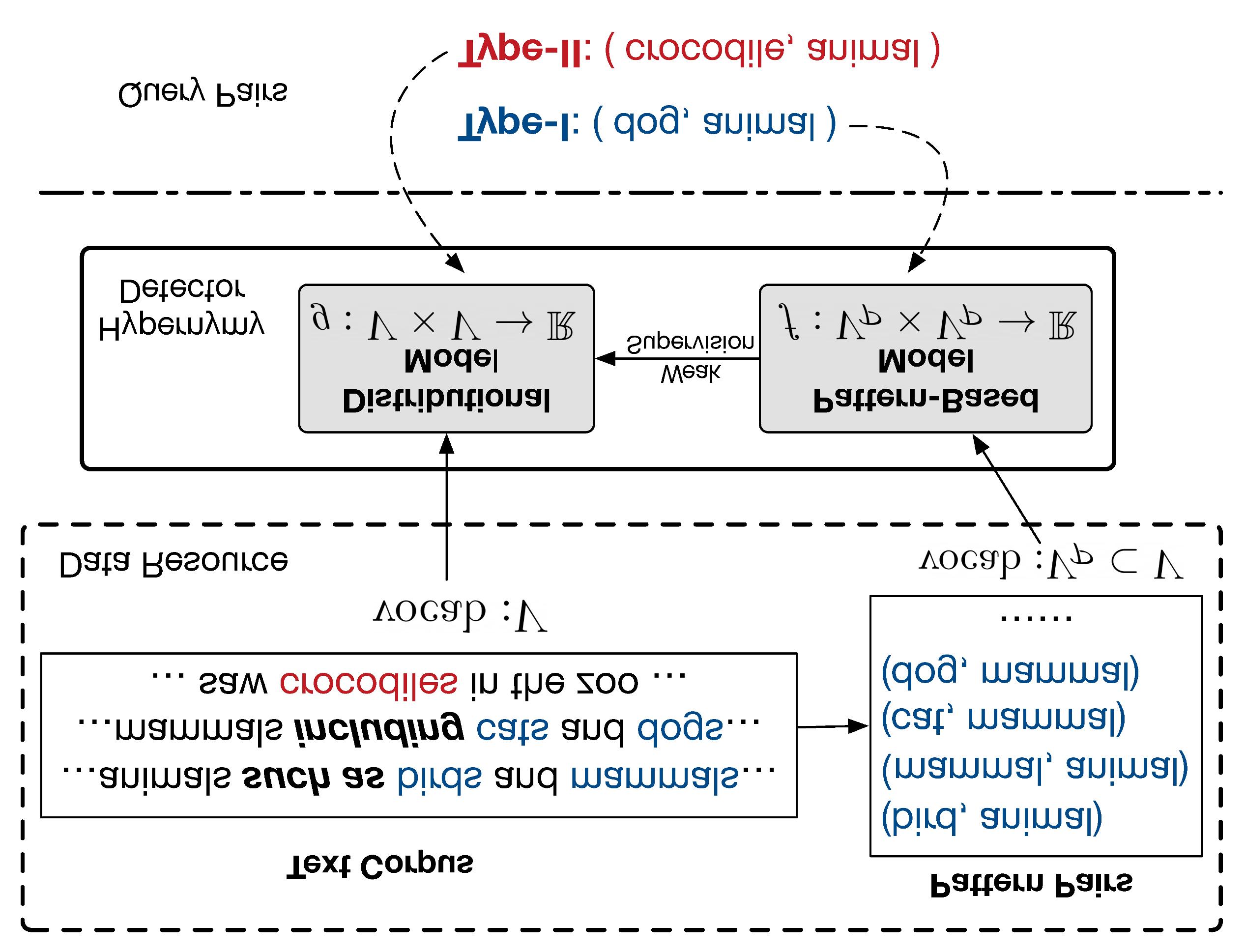

Machine learning techniques have been widely used in natural language processing (NLP). However, as revealed by many recent studies, machine learning models often inherit and amplify the societal biases in data. Various metrics have been proposed to quantify biases in model predictions. In particular, several of them evaluate disparity in model performance between protected groups and advantaged groups in the test corpus. However, we argue that evaluating bias at the corpus level is not enough for understanding how biases are embedded in a model. In fact, a model with similar aggregated performance between different groups on the entire data may behave differently on instances in a local region. To analyze and detect such local bias, we propose LOGAN, a new bias detection technique based on clustering. Experiments on toxicity classification and object classification tasks show that LOGAN identifies bias in a local region and allows us to better analyze the biases in model predictions.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

When Hearst Is not Enough: Improving Hypernymy Detection from Corpus with Distributional Models

Changlong Yu, Jialong Han, Peifeng Wang, Yangqiu Song, Hongming Zhang, Wilfred Ng, Shuming Shi,

CrowS-Pairs: A Challenge Dataset for Measuring Social Biases in Masked Language Models

Nikita Nangia, Clara Vania, Rasika Bhalerao, Samuel R. Bowman,

Intrinsic Probing through Dimension Selection

Lucas Torroba Hennigen, Adina Williams, Ryan Cotterell,

Cold-start Active Learning through Self-supervised Language Modeling

Michelle Yuan, Hsuan-Tien Lin, Jordan Boyd-Graber,