Which *BERT? A Survey Organizing Contextualized Encoders

Patrick Xia, Shijie Wu, Benjamin Van Durme

NLP Applications Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Pretrained contextualized text encoders are now a staple of the NLP community. We present a survey on language representation learning with the aim of consolidating a series of shared lessons learned across a variety of recent efforts. While significant advancements continue at a rapid pace, we find that enough has now been discovered, in different directions, that we can begin to organize advances according to common themes. Through this organization, we highlight important considerations when interpreting recent contributions and choosing which model to use.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

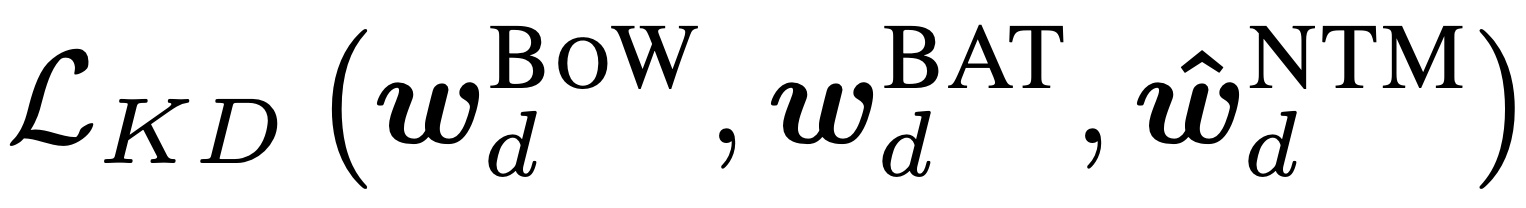

Improving Neural Topic Models using Knowledge Distillation

Alexander Miserlis Hoyle, Pranav Goel, Philip Resnik,

Incremental Neural Coreference Resolution in Constant Memory

Patrick Xia, João Sedoc, Benjamin Van Durme,

Intrinsic Probing through Dimension Selection

Lucas Torroba Hennigen, Adina Williams, Ryan Cotterell,

Multi-Stage Pre-training for Low-Resource Domain Adaptation

Rong Zhang, Revanth Gangi Reddy, Md Arafat Sultan, Vittorio Castelli, Anthony Ferritto, Radu Florian, Efsun Sarioglu Kayi, Salim Roukos, Avi Sil, Todd Ward,