VolTAGE: Volatility Forecasting via Text Audio Fusion with Graph Convolution Networks for Earnings Calls

Ramit Sawhney, Piyush Khanna, Arshiya Aggarwal, Taru Jain, Puneet Mathur, Rajiv Ratn Shah

Speech and Multimodality Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Natural language processing has recently made stock movement forecasting and volatility forecasting advances, leading to improved financial forecasting. Transcripts of companies' earnings calls are well studied for risk modeling, offering unique investment insight into stock performance. However, vocal cues in the speech of company executives present an underexplored rich source of natural language data for estimating financial risk. Additionally, most existing approaches ignore the correlations between stocks. Building on existing work, we introduce a neural model for stock volatility prediction that accounts for stock interdependence via graph convolutions while fusing verbal, vocal, and financial features in a semi-supervised multi-task risk forecasting formulation. Our proposed model, VolTAGE, outperforms existing methods demonstrating the effectiveness of multimodal learning for volatility prediction.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Public Sentiment Drift Analysis Based on Hierarchical Variational Auto-encoder

Wenyue Zhang, Xiaoli Li, Yang Li, Suge Wang, Deyu Li, Jian Liao, Jianxing Zheng,

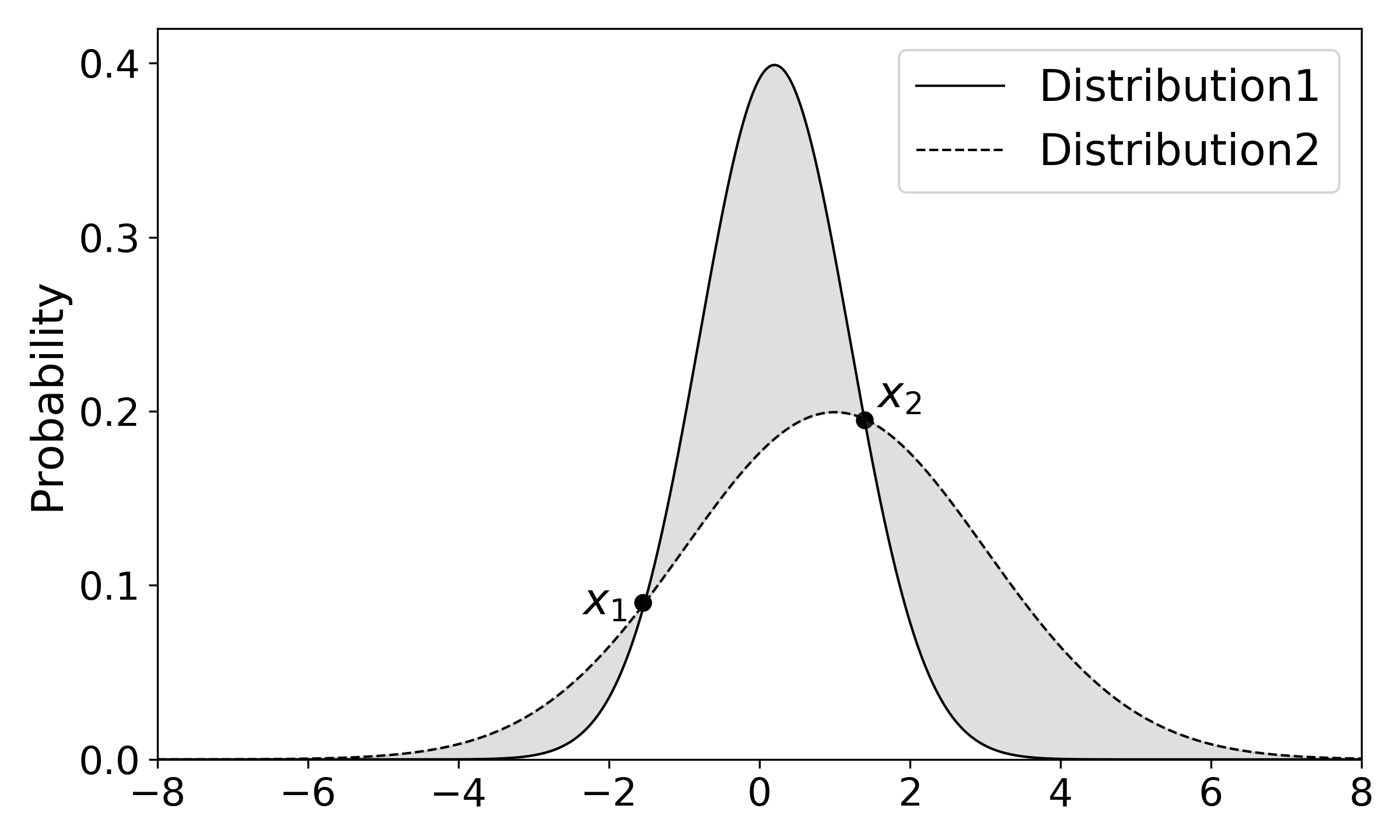

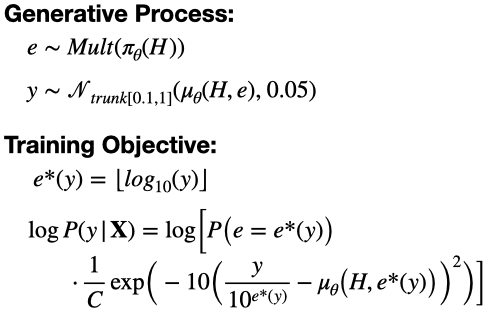

An Empirical Investigation of Contextualized Number Prediction

Taylor Berg-Kirkpatrick, Daniel Spokoyny,

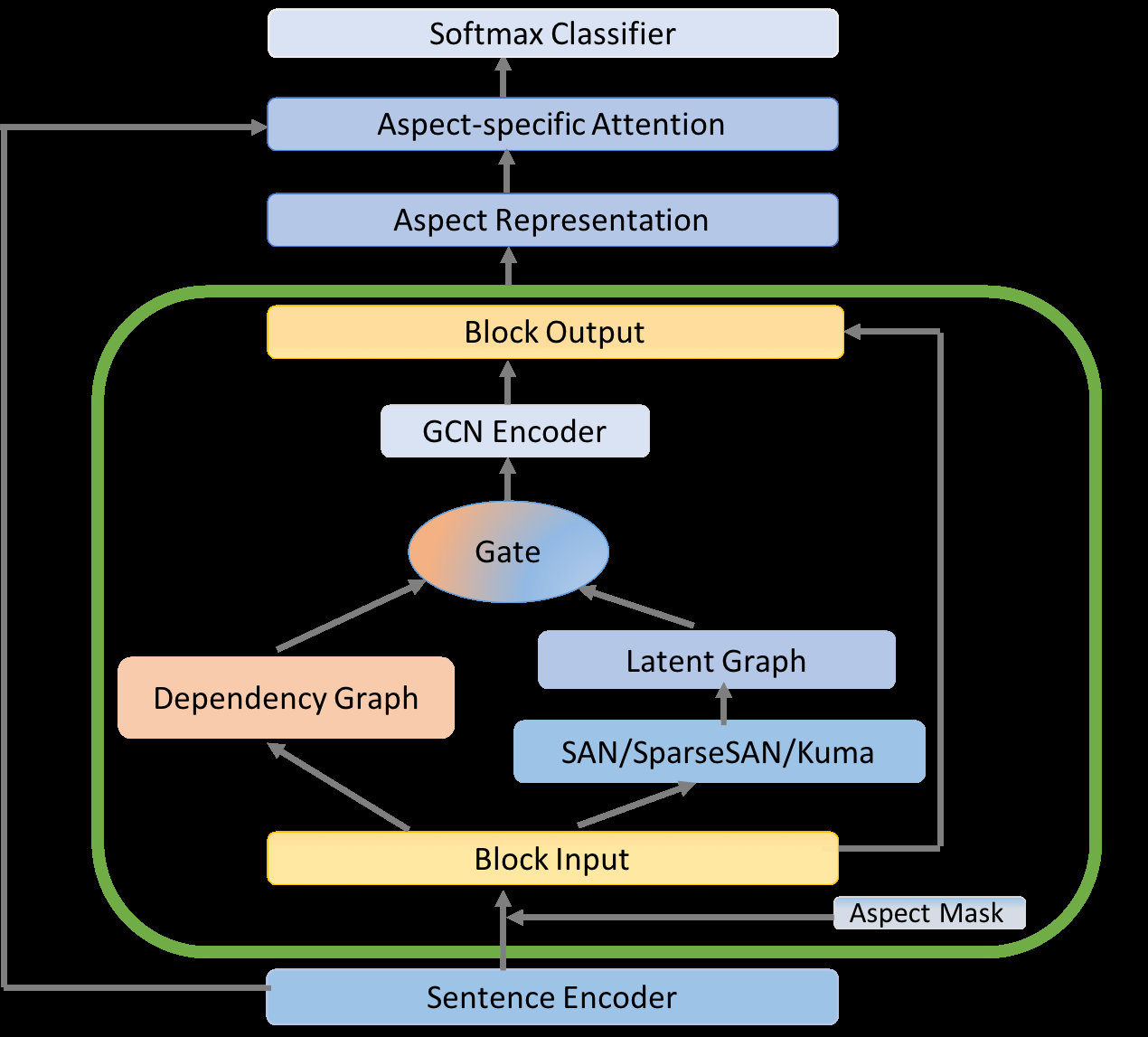

Inducing Target-Specific Latent Structures for Aspect Sentiment Classification

Chenhua Chen, Zhiyang Teng, Yue Zhang,