Widget Captioning: Generating Natural Language Description for Mobile User Interface Elements

Yang Li, Gang Li, Luheng He, Jingjie Zheng, Hong Li, Zhiwei Guan

Speech and Multimodality Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Natural language descriptions of user interface (UI) elements such as alternative text are crucial for accessibility and language-based interaction in general. Yet, these descriptions are constantly missing in mobile UIs. We propose widget captioning, a novel task for automatically generating language descriptions for UI elements from multimodal input including both the image and the structural representations of user interfaces. We collected a large-scale dataset for widget captioning with crowdsourcing. Our dataset contains 162,860 language phrases created by human workers for annotating 61,285 UI elements across 21,750 unique UI screens. We thoroughly analyze the dataset, and train and evaluate a set of deep model configurations to investigate how each feature modality as well as the choice of learning strategies impact the quality of predicted captions. The task formulation and the dataset as well as our benchmark models contribute a solid basis for this novel multimodal captioning task that connects language and user interfaces.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

STORIUM: A Dataset and Evaluation Platform for Machine-in-the-Loop Story Generation

Nader Akoury, Shufan Wang, Josh Whiting, Stephen Hood, Nanyun Peng, Mohit Iyyer,

Sound Natural: Content Rephrasing in Dialog Systems

Arash Einolghozati, Anchit Gupta, Keith Diedrick, Sonal Gupta,

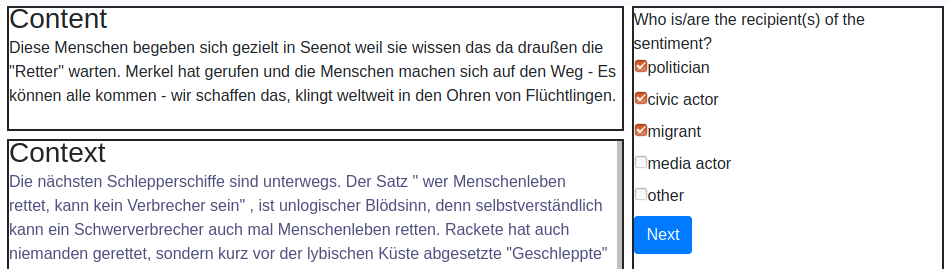

HUMAN: Hierarchical Universal Modular ANnotator

Moritz Wolf, Dana Ruiter, Ashwin Geet D'Sa, Liane Reiners, Jan Alexandersson, Dietrich Klakow,