Word Rotator's Distance

Sho Yokoi, Ryo Takahashi, Reina Akama, Jun Suzuki, Kentaro Inui

Machine Learning for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

One key principle for assessing textual similarity is measuring the degree of semantic overlap between texts by considering the word alignment. Such alignment-based approaches are both intuitive and interpretable; however, they are empirically inferior to the simple cosine similarity between general-purpose sentence vectors. We focus on the fact that the norm of word vectors is a good proxy for word importance, and the angle of them is a good proxy for word similarity. However, alignment-based approaches do not distinguish the norm and direction, whereas sentence-vector approaches automatically use the norm as the word importance. Accordingly, we propose decoupling word vectors into their norm and direction then computing the alignment-based similarity with the help of earth mover's distance (optimal transport), which we refer to as word rotator's distance. Furthermore, we demonstrate how to grow the norm and direction of word vectors (vector converter); this is a new systematic approach derived from the sentence-vector estimation methods, which can significantly improve the performance of the proposed method. On several STS benchmarks, the proposed methods outperform not only alignment-based approaches but also strong baselines. The source code is avaliable at https://github.com/eumesy/wrd

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Don't Neglect the Obvious: On the Role of Unambiguous Words in Word Sense Disambiguation

Daniel Loureiro, Jose Camacho-Collados,

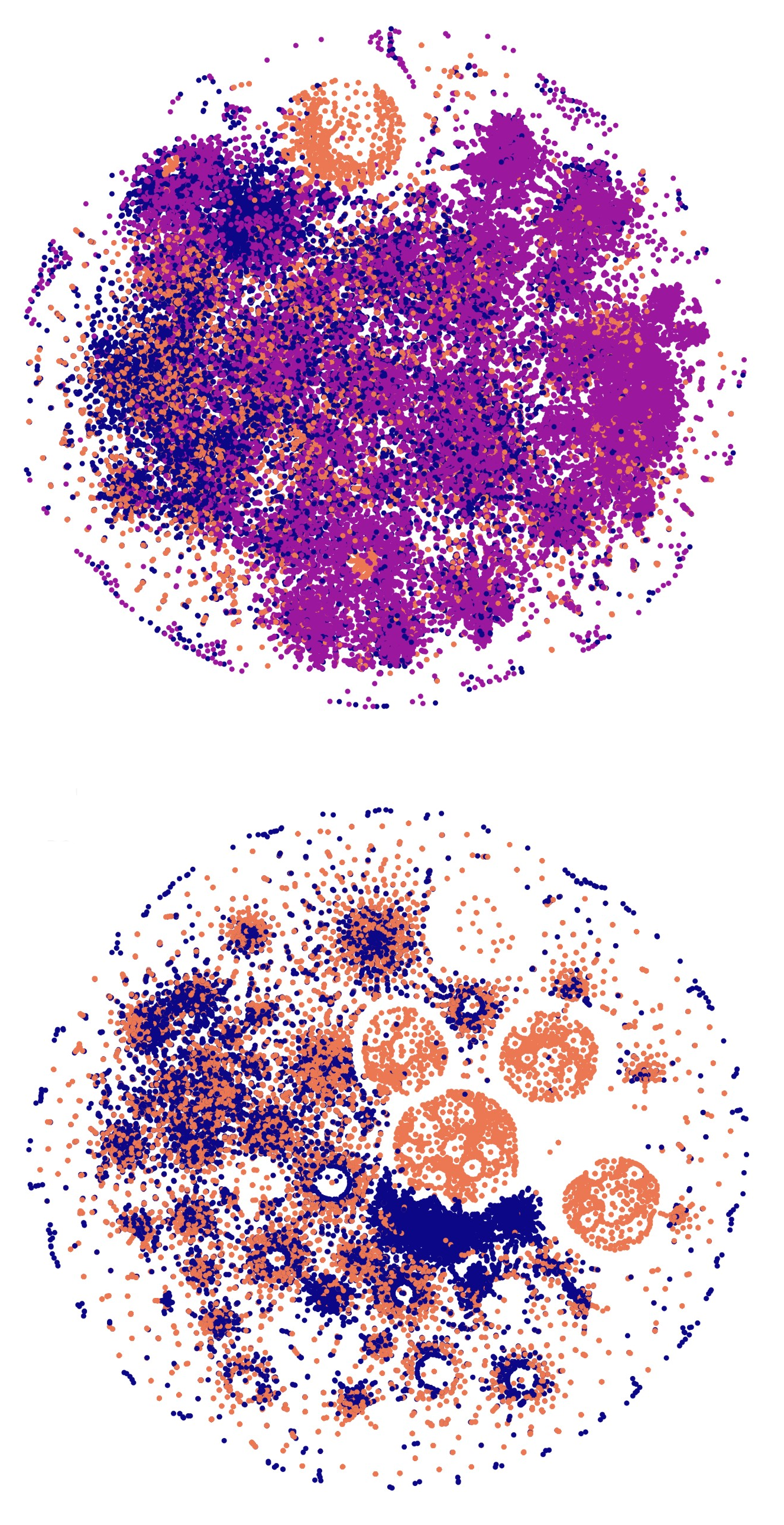

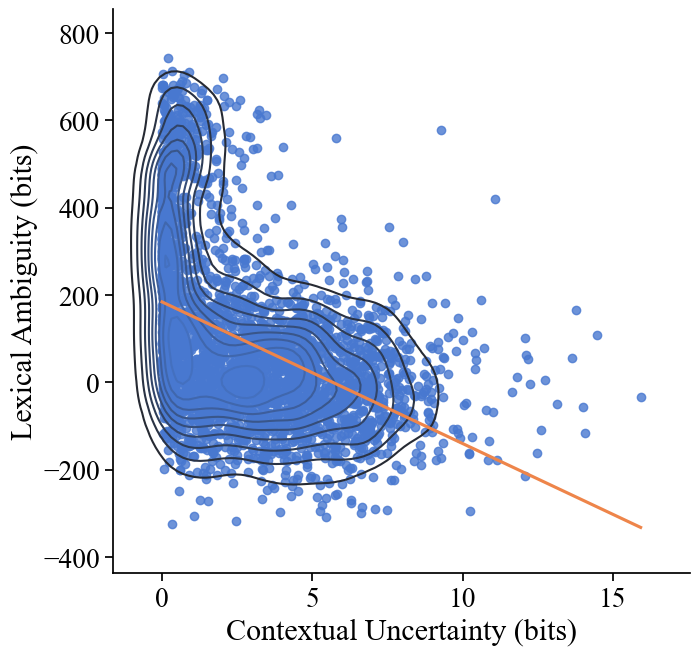

Speakers Fill Lexical Semantic Gaps with Context

Tiago Pimentel, Rowan Hall Maudslay, Damian Blasi, Ryan Cotterell,

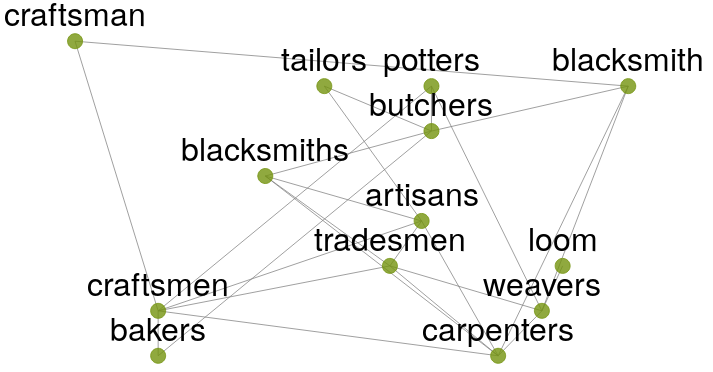

Embedding Words in Non-Vector Space with Unsupervised Graph Learning

Max Ryabinin, Sergei Popov, Liudmila Prokhorenkova, Elena Voita,