Are All Good Word Vector Spaces Isomorphic?

Ivan Vulić, Sebastian Ruder, Anders Søgaard

Interpretability and Analysis of Models for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

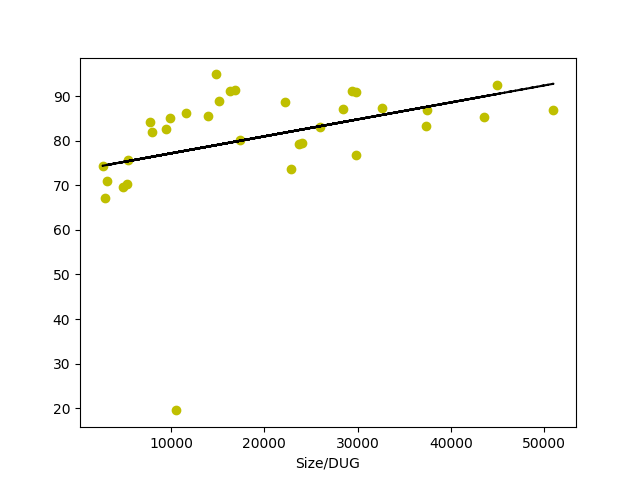

Existing algorithms for aligning cross-lingual word vector spaces assume that vector spaces are approximately isomorphic. As a result, they perform poorly or fail completely on non-isomorphic spaces. Such non-isomorphism has been hypothesised to result from typological differences between languages. In this work, we ask whether non-isomorphism is also crucially a sign of degenerate word vector spaces. We present a series of experiments across diverse languages which show that variance in performance across language pairs is not only due to typological differences, but can mostly be attributed to the size of the monolingual resources available, and to the properties and duration of monolingual training (e.g. "under-training").

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

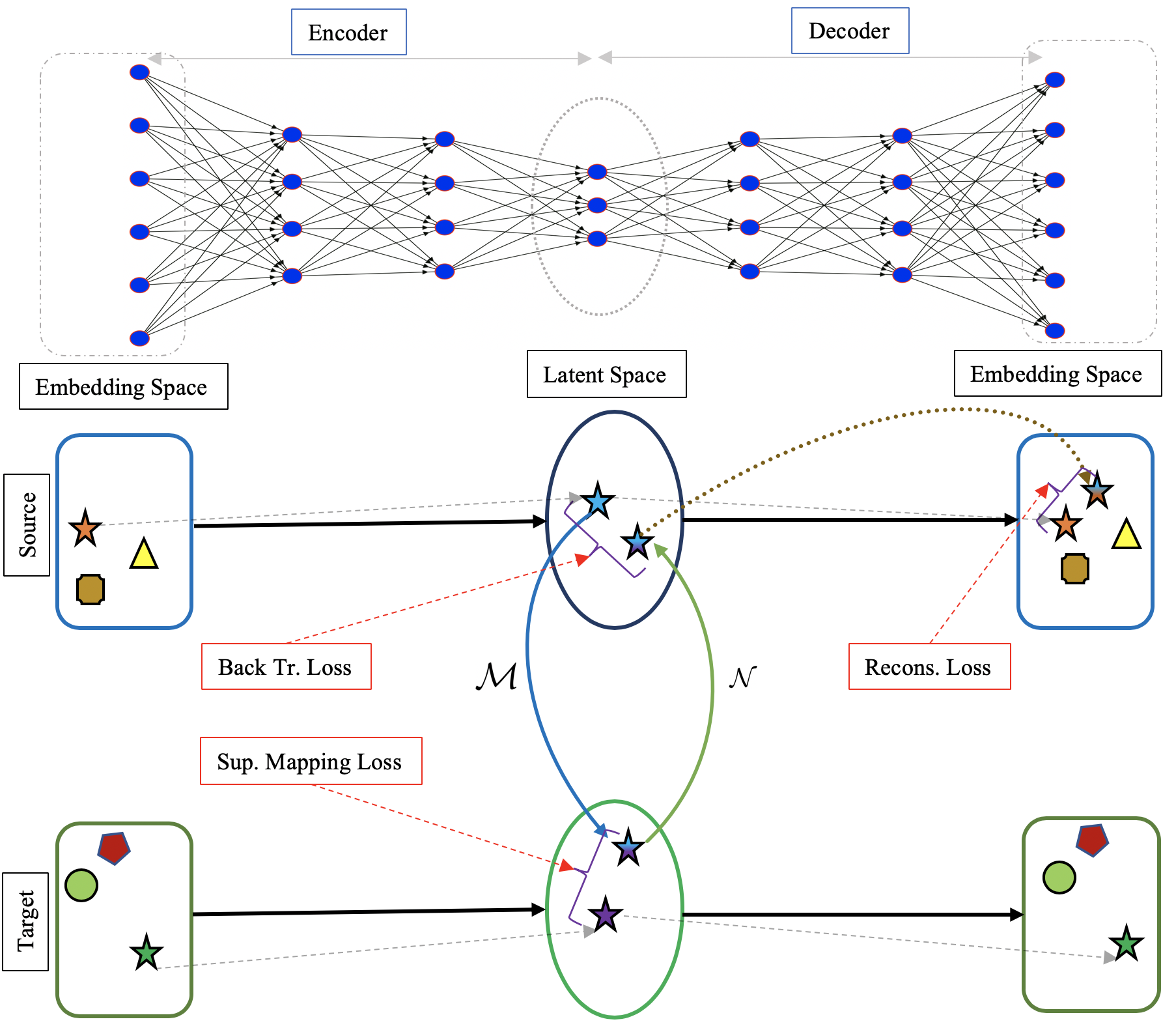

LNMap: Departures from Isomorphic Assumption in Bilingual Lexicon Induction Through Non-Linear Mapping in Latent Space

Tasnim Mohiuddin, M Saiful Bari, Shafiq Joty,

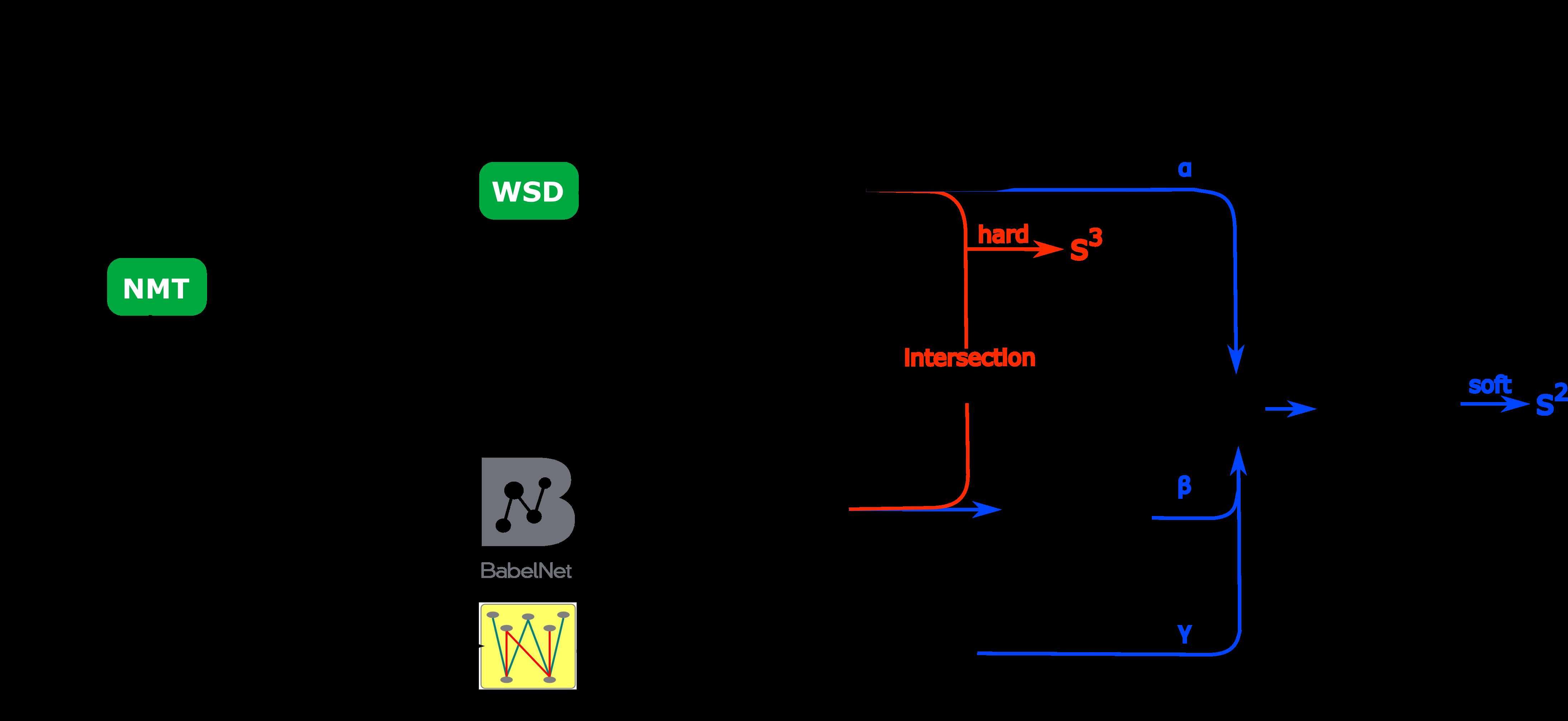

Improving Word Sense Disambiguation with Translations

Yixing Luan, Bradley Hauer, Lili Mou, Grzegorz Kondrak,

A Simple Approach to Learning Unsupervised Multilingual Embeddings

Pratik Jawanpuria, Mayank Meghwanshi, Bamdev Mishra,