Improving Grammatical Error Correction Models with Purpose-Built Adversarial Examples

Lihao Wang, Xiaoqing Zheng

Machine Learning for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

A sequence-to-sequence (seq2seq) learning with neural networks empirically shows to be an effective framework for grammatical error correction (GEC), which takes a sentence with errors as input and outputs the corrected one. However, the performance of GEC models with the seq2seq framework heavily relies on the size and quality of the corpus on hand. We propose a method inspired by adversarial training to generate more meaningful and valuable training examples by continually identifying the weak spots of a model, and to enhance the model by gradually adding the generated adversarial examples to the training set. Extensive experimental results show that such adversarial training can improve both the generalization and robustness of GEC models.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

CAT-Gen: Improving Robustness in NLP Models via Controlled Adversarial Text Generation

Tianlu Wang, Xuezhi Wang, Yao Qin, Ben Packer, Kang Li, Jilin Chen, Alex Beutel, Ed Chi,

Pronoun-Targeted Fine-tuning for NMT with Hybrid Losses

Prathyusha Jwalapuram, Shafiq Joty, Youlin Shen,

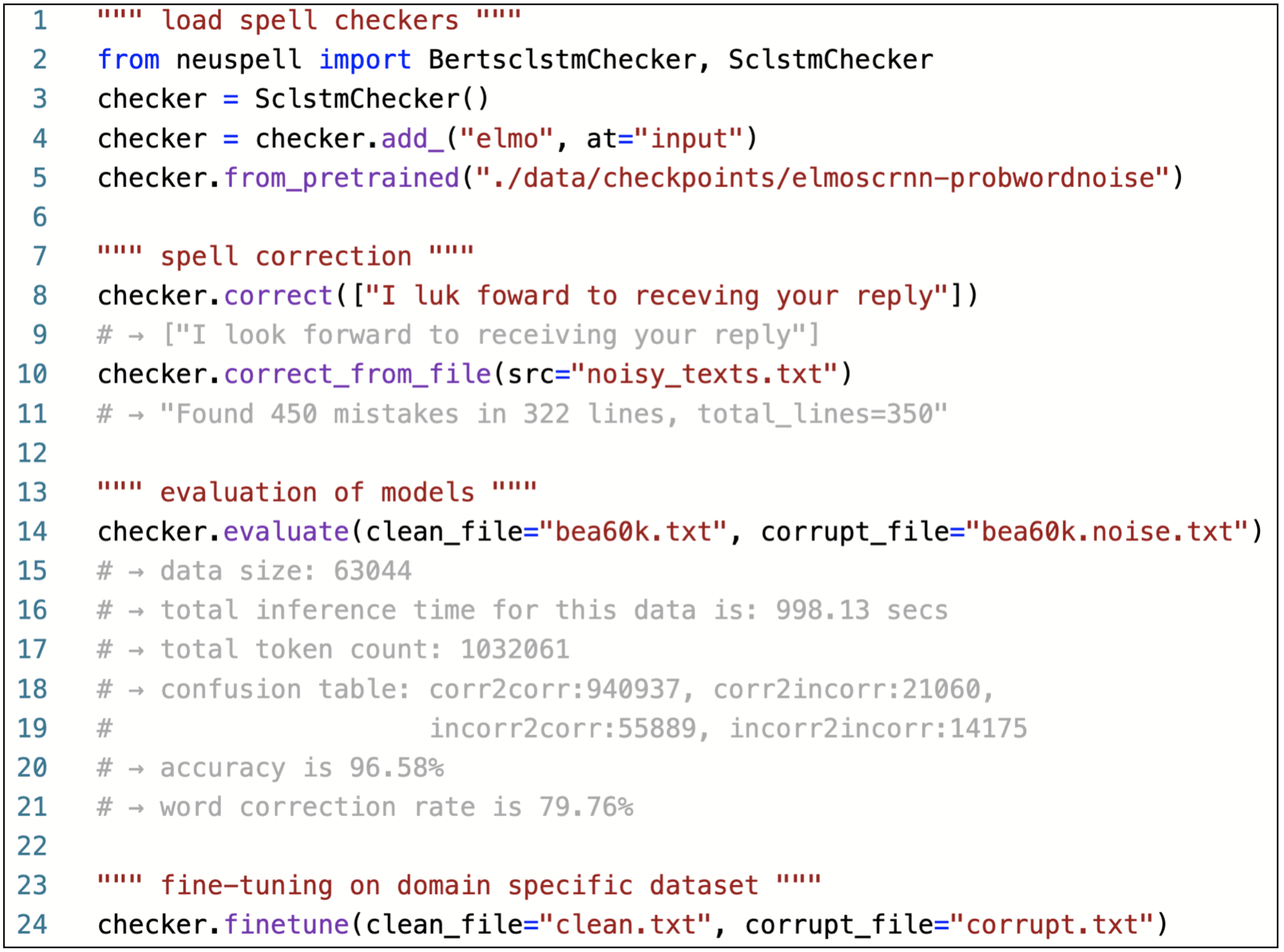

NeuSpell: A Neural Spelling Correction Toolkit

Sai Muralidhar Jayanthi, Danish Pruthi, Graham Neubig,

Improving Text Generation with Student-Forcing Optimal Transport

Jianqiao Li, Chunyuan Li, Guoyin Wang, Hao Fu, Yuhchen Lin, Liqun Chen, Yizhe Zhang, Chenyang Tao, Ruiyi Zhang, Wenlin Wang, Dinghan Shen, Qian Yang, Lawrence Carin,