Variational Hierarchical Dialog Autoencoder for Dialog State Tracking Data Augmentation

Kang Min Yoo, Hanbit Lee, Franck Dernoncourt, Trung Bui, Walter Chang, Sang-goo Lee

Machine Learning for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

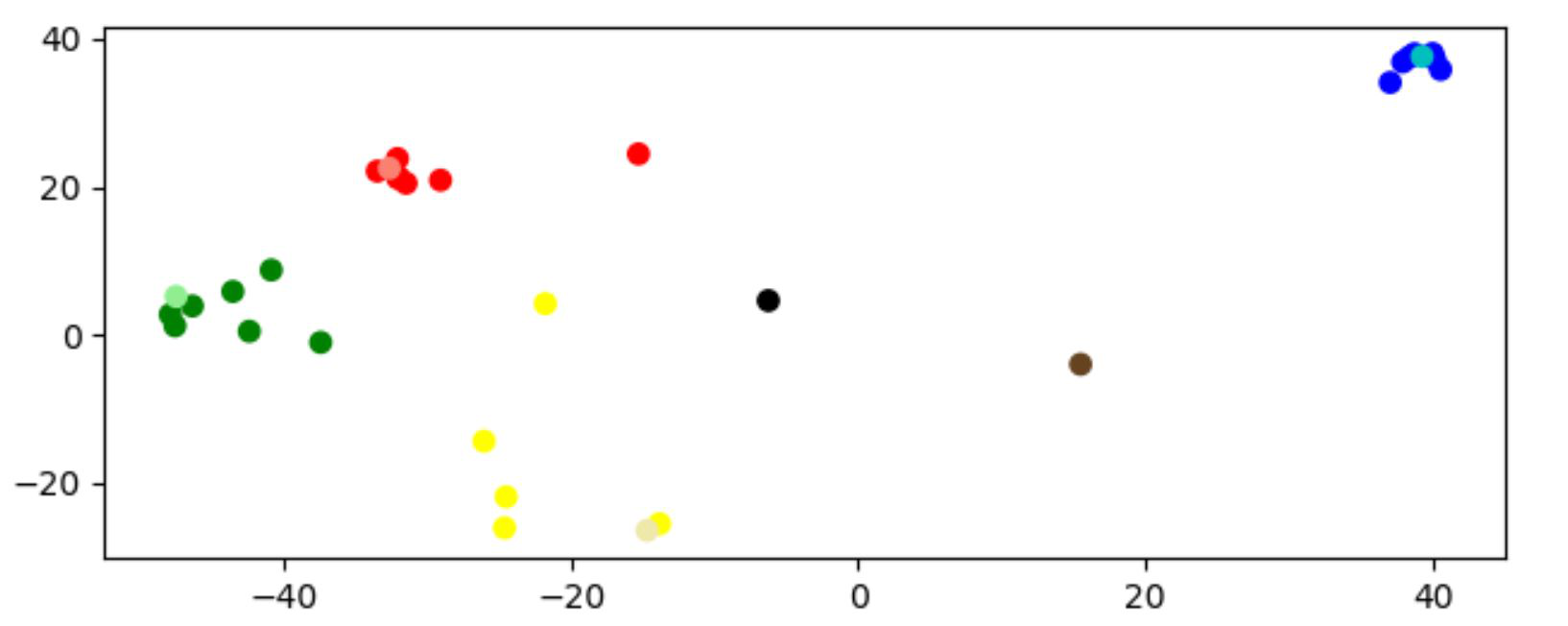

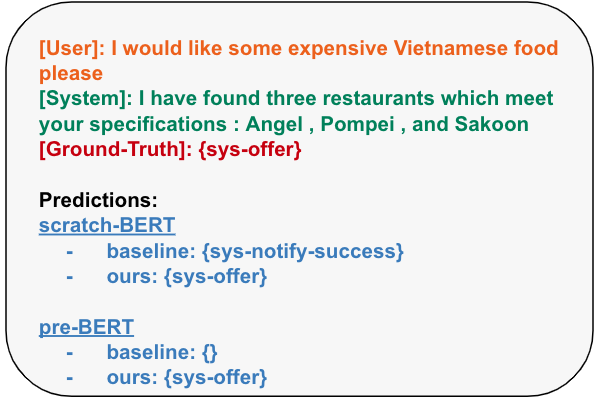

Recent works have shown that generative data augmentation, where synthetic samples generated from deep generative models complement the training dataset, benefit NLP tasks. In this work, we extend this approach to the task of dialog state tracking for goaloriented dialogs. Due to the inherent hierarchical structure of goal-oriented dialogs over utterances and related annotations, the deep generative model must be capable of capturing the coherence among different hierarchies and types of dialog features. We propose the Variational Hierarchical Dialog Autoencoder (VHDA) for modeling the complete aspects of goal-oriented dialogs, including linguistic features and underlying structured annotations, namely speaker information, dialog acts, and goals. The proposed architecture is designed to model each aspect of goal-oriented dialogs using inter-connected latent variables and learns to generate coherent goal-oriented dialogs from the latent spaces. To overcome training issues that arise from training complex variational models, we propose appropriate training strategies. Experiments on various dialog datasets show that our model improves the downstream dialog trackers’ robustness via generative data augmentation. We also discover additional benefits of our unified approach to modeling goal-oriented dialogs – dialog response generation and user simulation, where our model outperforms previous strong baselines.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Simple Data Augmentation with the Mask Token Improves Domain Adaptation for Dialog Act Tagging

Semih Yavuz, Kazuma Hashimoto, Wenhao Liu, Nitish Shirish Keskar, Richard Socher, Caiming Xiong,

VD-BERT: A Unified Vision and Dialog Transformer with BERT

Yue Wang, Shafiq Joty, Michael Lyu, Irwin King, Caiming Xiong, Steven C.H. Hoi,

A Probabilistic End-To-End Task-Oriented Dialog Model with Latent Belief States towards Semi-Supervised Learning

Yichi Zhang, Zhijian Ou, Min Hu, Junlan Feng,

Generating Dialogue Responses from a Semantic Latent Space

Wei-Jen Ko, Avik Ray, Yilin Shen, Hongxia Jin,