Unsupervised Text Style Transfer with Padded Masked Language Models

Eric Malmi, Aliaksei Severyn, Sascha Rothe

Language Generation Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

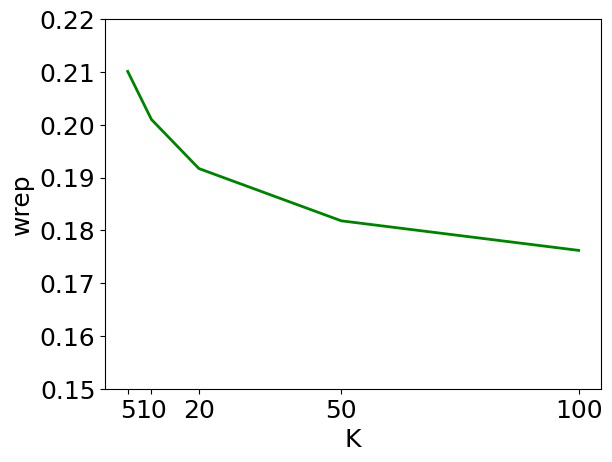

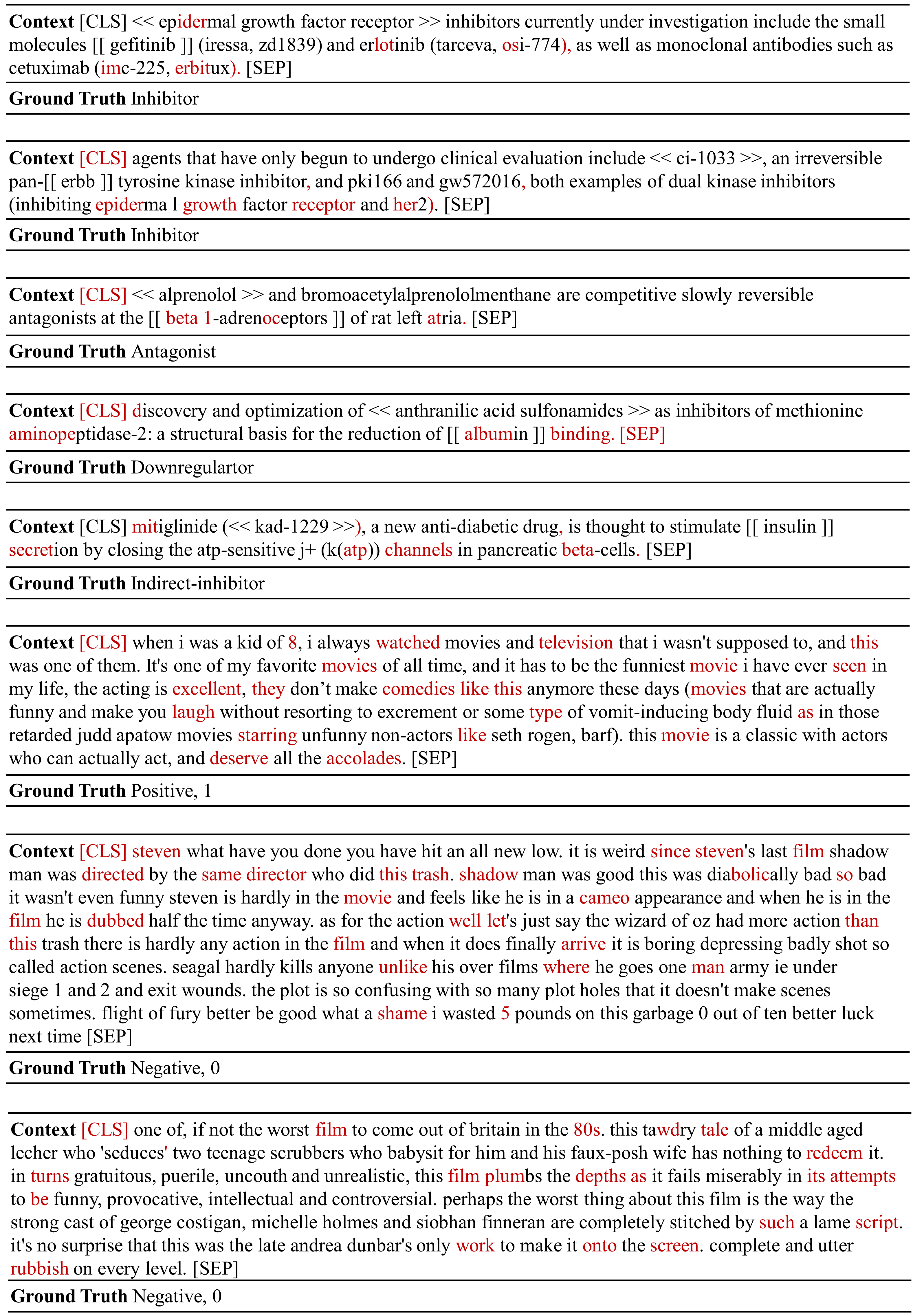

We propose Masker, an unsupervised text-editing method for style transfer. To tackle cases when no parallel source--target pairs are available, we train masked language models (MLMs) for both the source and the target domain. Then we find the text spans where the two models disagree the most in terms of likelihood. This allows us to identify the source tokens to delete to transform the source text to match the style of the target domain. The deleted tokens are replaced with the target MLM, and by using a padded MLM variant, we avoid having to predetermine the number of inserted tokens. Our experiments on sentence fusion and sentiment transfer demonstrate that Masker performs competitively in a fully unsupervised setting. Moreover, in low-resource settings, it improves supervised methods' accuracy by over 10 percentage points when pre-training them on silver training data generated by Masker.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Improving Text Generation with Student-Forcing Optimal Transport

Jianqiao Li, Chunyuan Li, Guoyin Wang, Hao Fu, Yuhchen Lin, Liqun Chen, Yizhe Zhang, Chenyang Tao, Ruiyi Zhang, Wenlin Wang, Dinghan Shen, Qian Yang, Lawrence Carin,

Neural Mask Generator: Learning to Generate Adaptive Word Maskings for Language Model Adaptation

Minki Kang, Moonsu Han, Sung Ju Hwang,

Substance over Style: Document-Level Targeted Content Transfer

Allison Hegel, Sudha Rao, Asli Celikyilmaz, Bill Dolan,