XL-AMR: Enabling Cross-Lingual AMR Parsing with Transfer Learning Techniques

Rexhina Blloshmi, Rocco Tripodi, Roberto Navigli

Semantics: Sentence-level Semantics, Textual Inference and Other areas Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

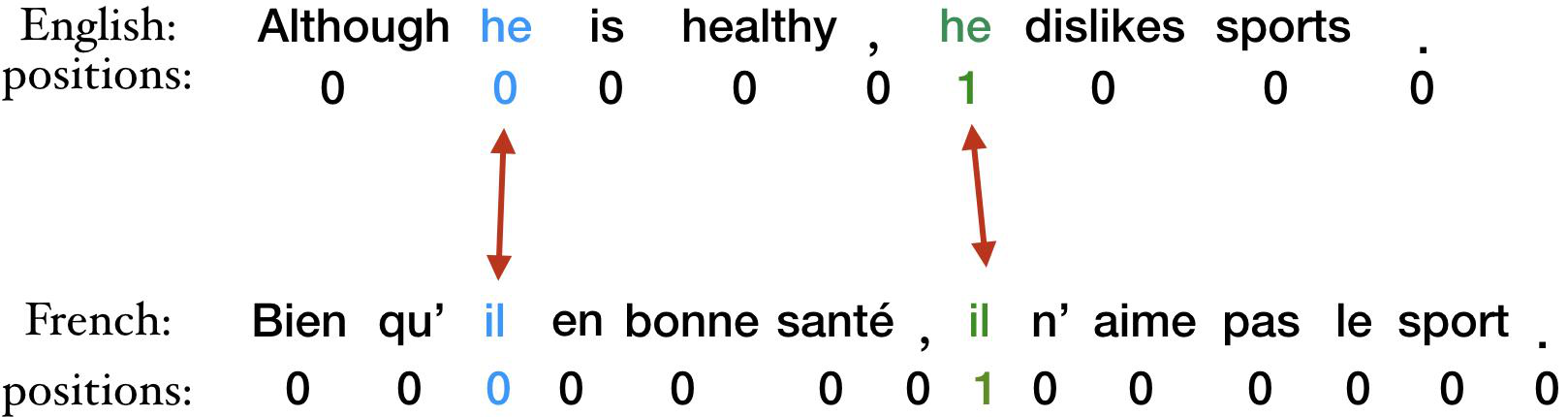

Abstract Meaning Representation (AMR) is a popular formalism of natural language that represents the meaning of a sentence as a semantic graph. It is agnostic about how to derive meanings from strings and for this reason it lends itself well to the encoding of semantics across languages. However, cross-lingual AMR parsing is a hard task, because training data are scarce in languages other than English and the existing English AMR parsers are not directly suited to being used in a cross-lingual setting. In this work we tackle these two problems so as to enable cross-lingual AMR parsing: we explore different transfer learning techniques for producing automatic AMR annotations across languages and develop a cross-lingual AMR parser, XL-AMR. This can be trained on the produced data and does not rely on AMR aligners or source-copy mechanisms as is commonly the case in English AMR parsing. The results of XL-AMR significantly surpass those previously reported in Chinese, German, Italian and Spanish. Finally we provide a qualitative analysis which sheds light on the suitability of AMR across languages. We release XL-AMR at github.com/SapienzaNLP/xl-amr.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Improving AMR Parsing with Sequence-to-Sequence Pre-training

Dongqin Xu, Junhui Li, Muhua Zhu, Min Zhang, Guodong Zhou,

Reusing a Pretrained Language Model on Languages with Limited Corpora for Unsupervised NMT

Alexandra Chronopoulou, Dario Stojanovski, Alexander Fraser,