Surprisal Predicts Code-Switching in Chinese-English Bilingual Text

Jesús Calvillo, Le Fang, Jeremy Cole, David Reitter

Linguistic Theories, Cognitive Modeling and Psycholinguistics Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Why do bilinguals switch languages within a sentence? The present observational study asks whether word surprisal and word entropy predict code-switching in bilingual written conversation. We describe and model a new dataset of Chinese-English text with 1476 clean code-switched sentences, translated back into Chinese. The model includes known control variables together with word surprisal and word entropy. We found that word surprisal, but not entropy, is a significant predictor that explains code-switching above and beyond other well-known predictors. We also found sentence length to be a significant predictor, which has been related to sentence complexity. We propose high cognitive effort as a reason for code-switching, as it leaves fewer resources for inhibition of the alternative language. We also corroborate previous findings, but this time using a computational model of surprisal, a new language pair, and doing so for written language.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

CSP:Code-Switching Pre-training for Neural Machine Translation

Zhen Yang, Bojie Hu, Ambyera Han, Shen Huang, Qi Ju,

Learning Music Helps You Read: Using Transfer to Study Linguistic Structure in Language Models

Isabel Papadimitriou, Dan Jurafsky,

Grammatical Error Correction in Low Error Density Domains: A New Benchmark and Analyses

Simon Flachs, Ophélie Lacroix, Helen Yannakoudakis, Marek Rei, Anders Søgaard,

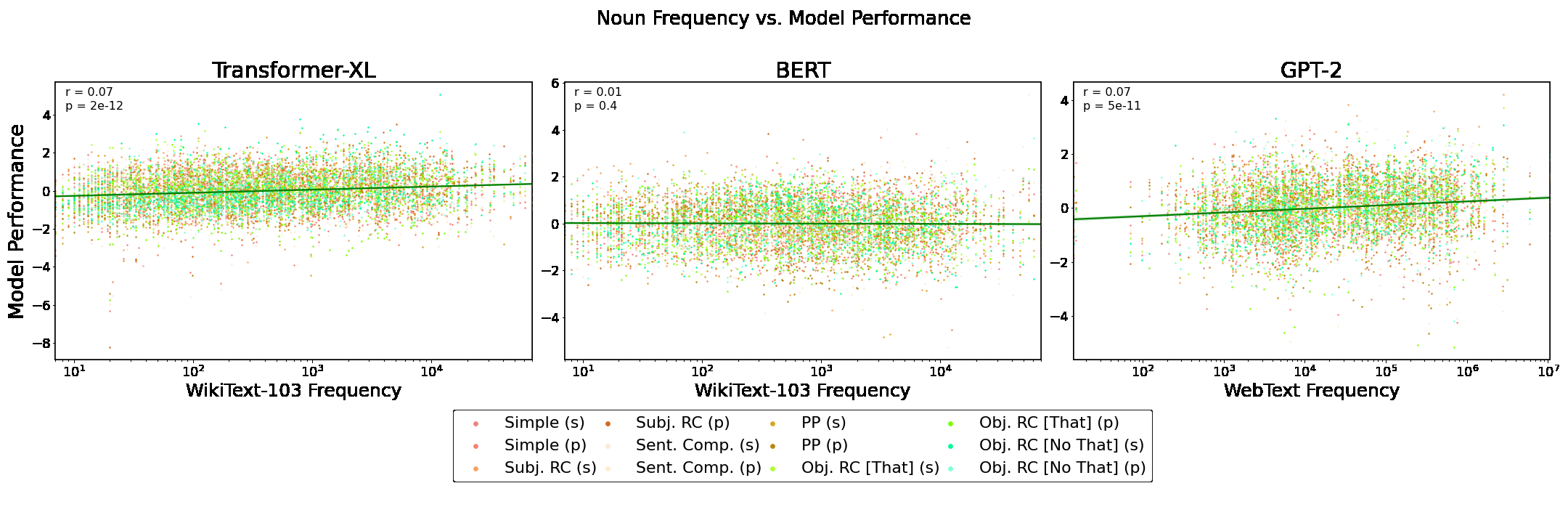

Word Frequency Does Not Predict Grammatical Knowledge in Language Models

Charles Yu, Ryan Sie, Nicolas Tedeschi, Leon Bergen,