Exploring the Linear Subspace Hypothesis in Gender Bias Mitigation

Francisco Vargas, Ryan Cotterell

Machine Learning for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

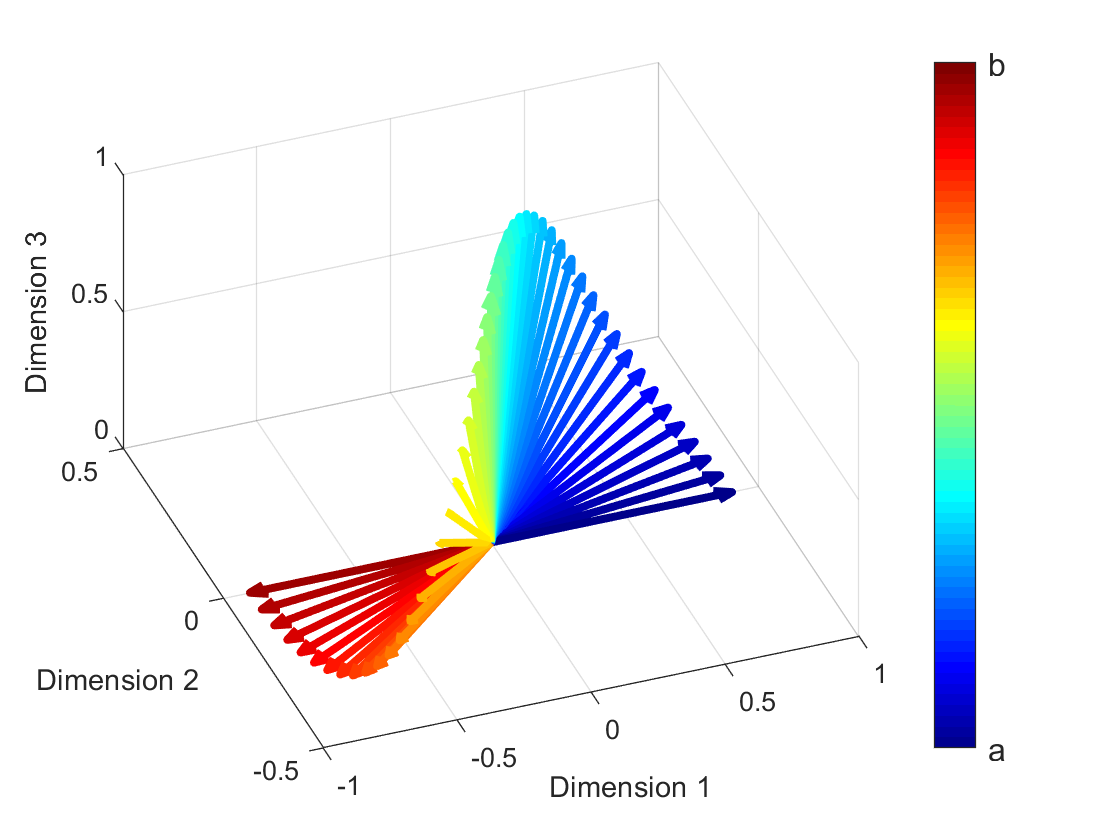

Bolukbasi et al. (2016) presents one of the first gender bias mitigation techniques for word embeddings. Their method takes pre-trained word embeddings as input and attempts to isolate a linear subspace that captures most of the gender bias in the embeddings. As judged by an analogical evaluation task, their method virtually eliminates gender bias in the embeddings. However, an implicit and untested assumption of their method is that the bias subspace is actually linear. In this work, we generalize their method to a kernelized, non-linear version. We take inspiration from kernel principal component analysis and derive a non-linear bias isolation technique. We discuss and overcome some of the practical drawbacks of our method for non-linear gender bias mitigation in word embeddings and analyze empirically whether the bias subspace is actually linear. Our analysis shows that gender bias is in fact well captured by a linear subspace, justifying the assumption of Bolukbasi et al. (2016).

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Methods for Numeracy-Preserving Word Embeddings

Dhanasekar Sundararaman, Shijing Si, Vivek Subramanian, Guoyin Wang, Devamanyu Hazarika, Lawrence Carin,

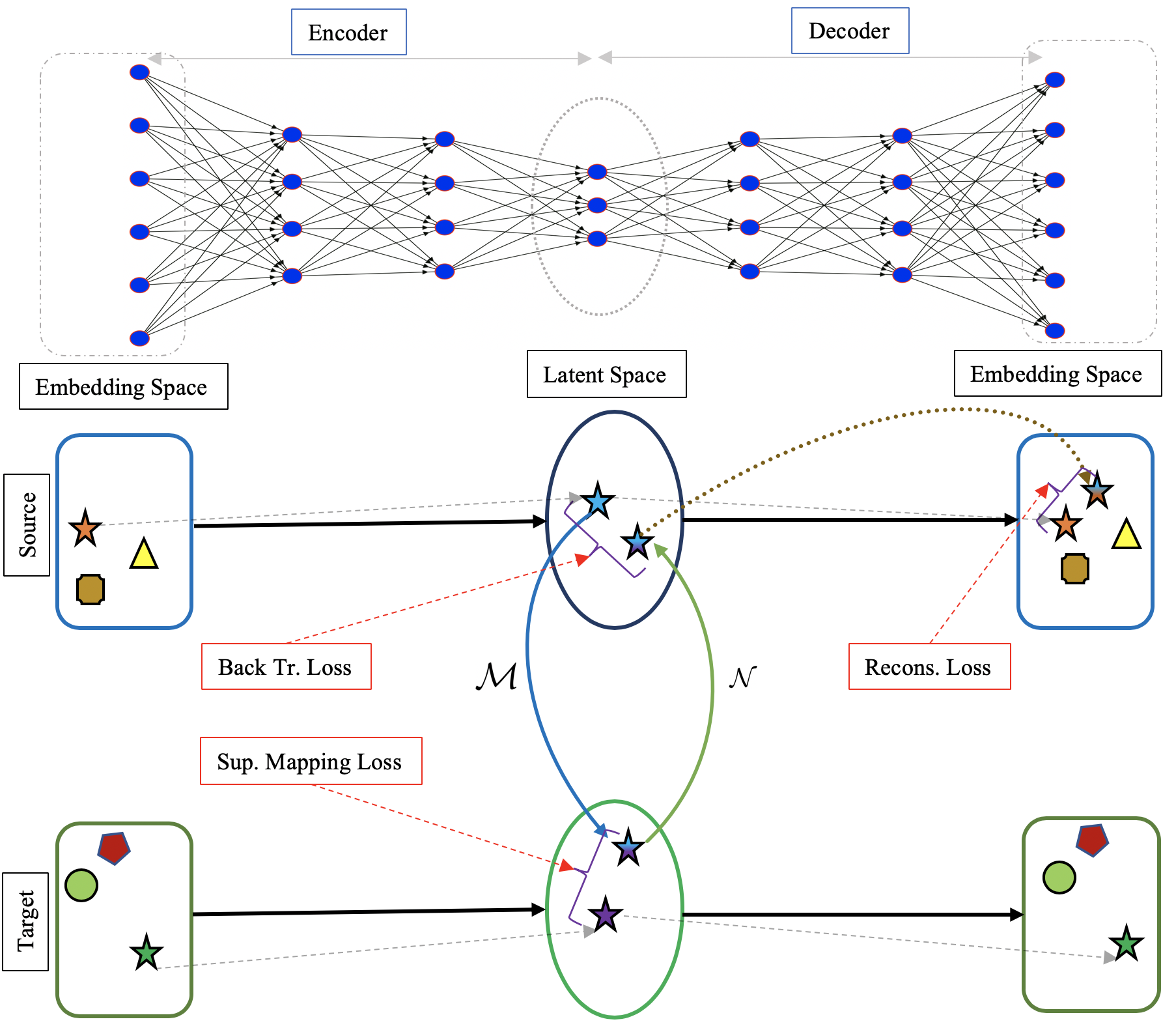

LNMap: Departures from Isomorphic Assumption in Bilingual Lexicon Induction Through Non-Linear Mapping in Latent Space

Tasnim Mohiuddin, M Saiful Bari, Shafiq Joty,

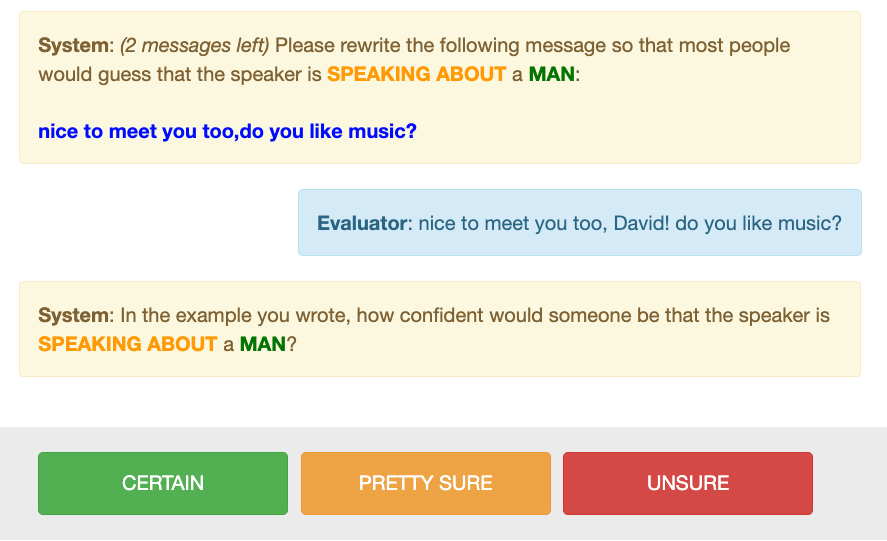

Multi-Dimensional Gender Bias Classification

Emily Dinan, Angela Fan, Ledell Wu, Jason Weston, Douwe Kiela, Adina Williams,

What Do Position Embeddings Learn? An Empirical Study of Pre-Trained Language Model Positional Encoding

Yu-An Wang, Yun-Nung Chen,