CoRefi: A Crowd Sourcing Suite for Coreference Annotation

Ari Bornstein, Arie Cattan, Ido Dagan

Demo Paper

Abstract:

Coreference annotation is an important, yet expensive and time consuming, task, which often involved expert annotators trained on complex decision guidelines. To enable cheaper and more efficient annotation, we present CoRefi, a web-based coreference annotation suite, oriented for crowdsourcing. Beyond the core coreference annotation tool, CoRefi provides guided onboarding for the task as well as a novel algorithm for a reviewing phase. CoRefi is open source and directly embeds into any website, including popular crowdsourcing platforms. CoRefi Demo: aka.ms/corefi Video Tour: aka.ms/corefivideo Github Repo: https://github.com/aribornstein/corefi

Similar Papers

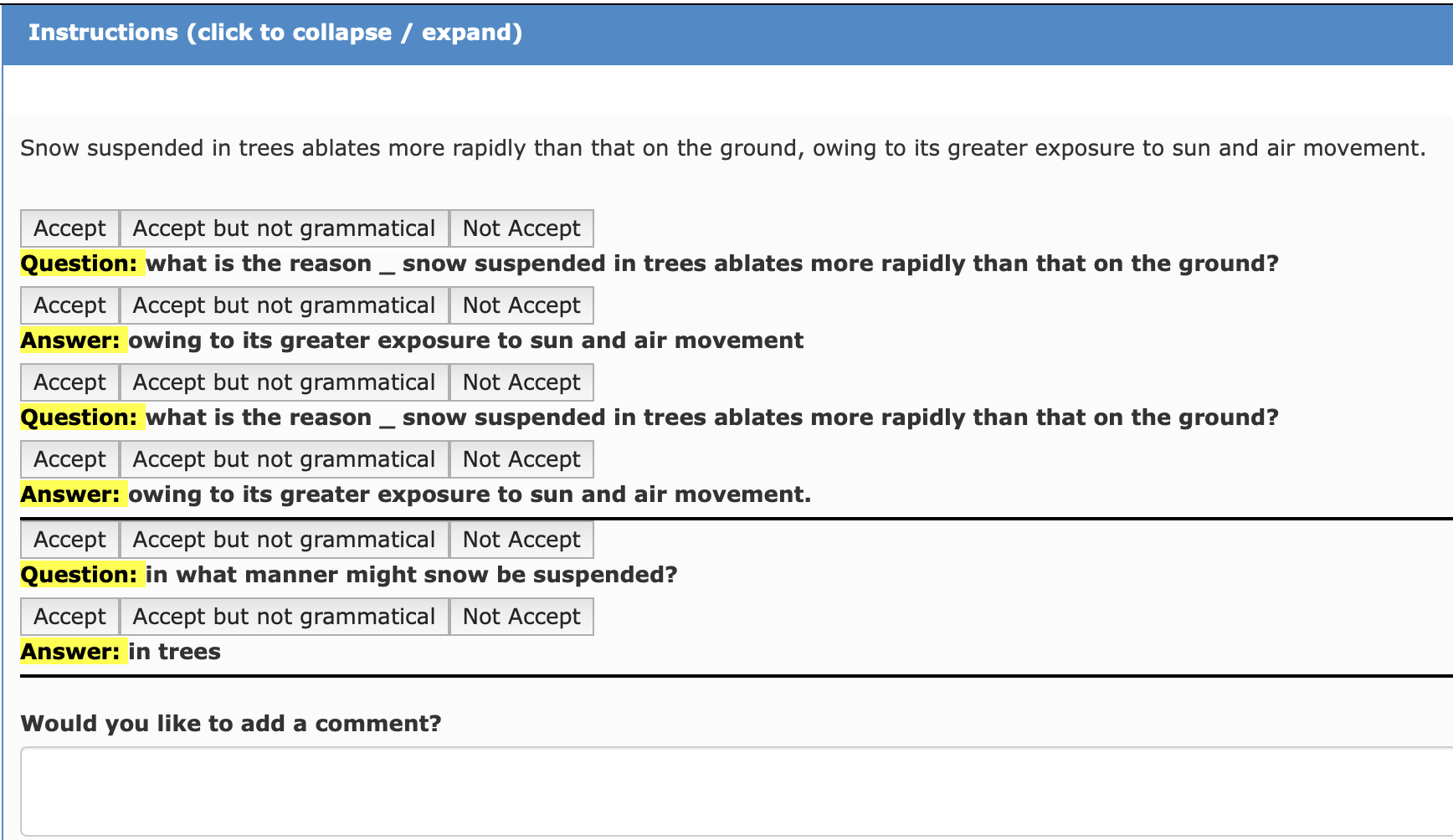

QADiscourse - Discourse Relations as QA Pairs: Representation, Crowdsourcing and Baselines

Valentina Pyatkin, Ayal Klein, Reut Tsarfaty, Ido Dagan,

Coreferential Reasoning Learning for Language Representation

Deming Ye, Yankai Lin, Jiaju Du, Zhenghao Liu, Peng Li, Maosong Sun, Zhiyuan Liu,

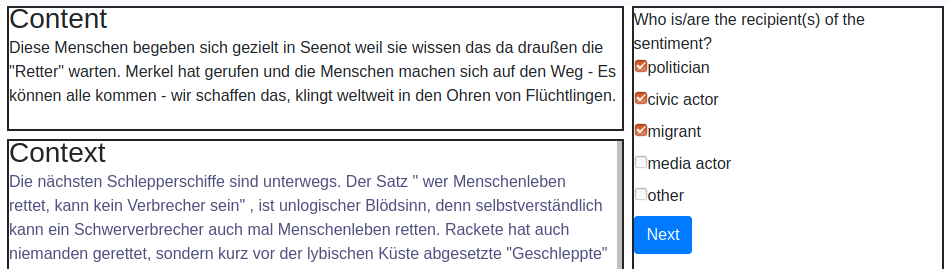

HUMAN: Hierarchical Universal Modular ANnotator

Moritz Wolf, Dana Ruiter, Ashwin Geet D'Sa, Liane Reiners, Jan Alexandersson, Dietrich Klakow,