Acrostic Poem Generation

Rajat Agarwal, Katharina Kann

Language Generation Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

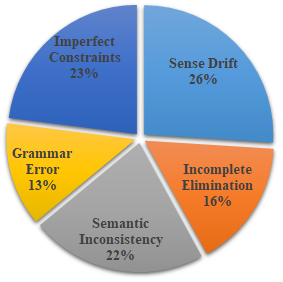

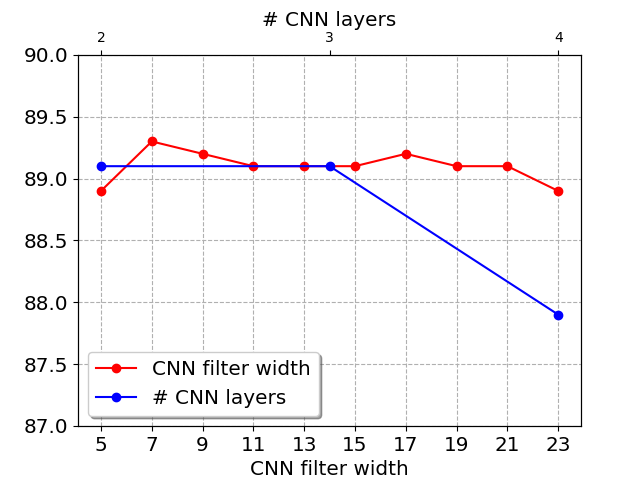

We propose a new task in the area of computational creativity: acrostic poem generation in English. Acrostic poems are poems that contain a hidden message; typically, the first letter of each line spells out a word or short phrase. We define the task as a generation task with multiple constraints: given an input word, 1) the initial letters of each line should spell out the provided word, 2) the poem's semantics should also relate to it, and 3) the poem should conform to a rhyming scheme. We further provide a baseline model for the task, which consists of a conditional neural language model in combination with a neural rhyming model. Since no dedicated datasets for acrostic poem generation exist, we create training data for our task by first training a separate topic prediction model on a small set of topic-annotated poems and then predicting topics for additional poems. Our experiments show that the acrostic poems generated by our baseline are received well by humans and do not lose much quality due to the additional constraints. Last, we confirm that poems generated by our model are indeed closely related to the provided prompts, and that pretraining on Wikipedia can boost performance.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

The role of context in neural pitch accent detection in English

Elizabeth Nielsen, Mark Steedman, Sharon Goldwater,

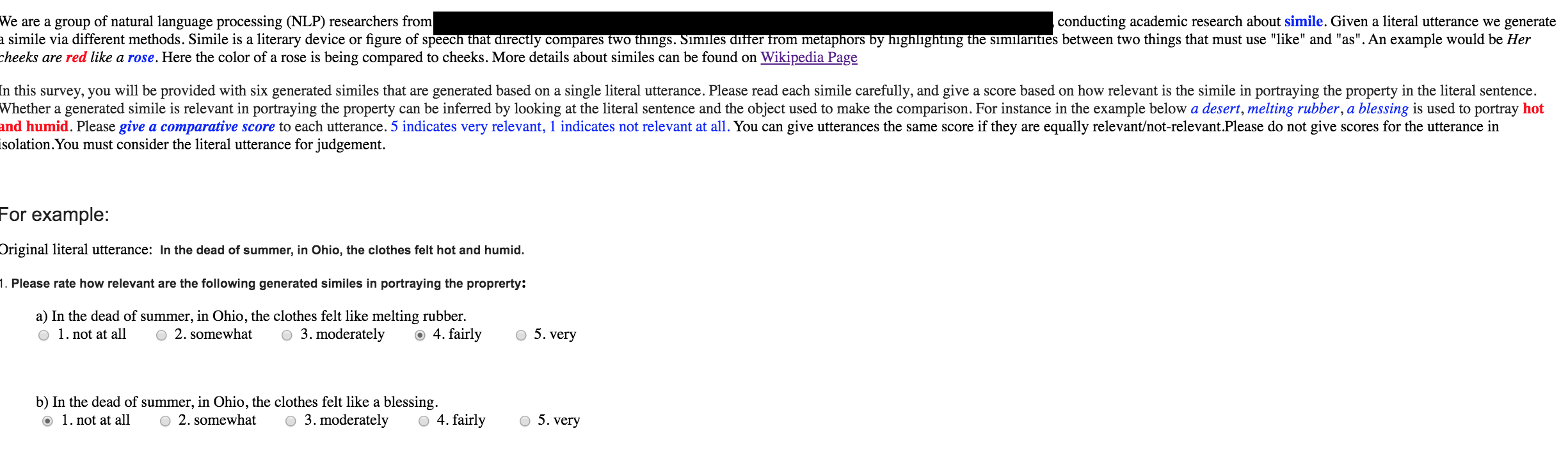

Generating similes effortlessly like a Pro: A Style Transfer Approach for Simile Generation

Tuhin Chakrabarty, Smaranda Muresan, Nanyun Peng,

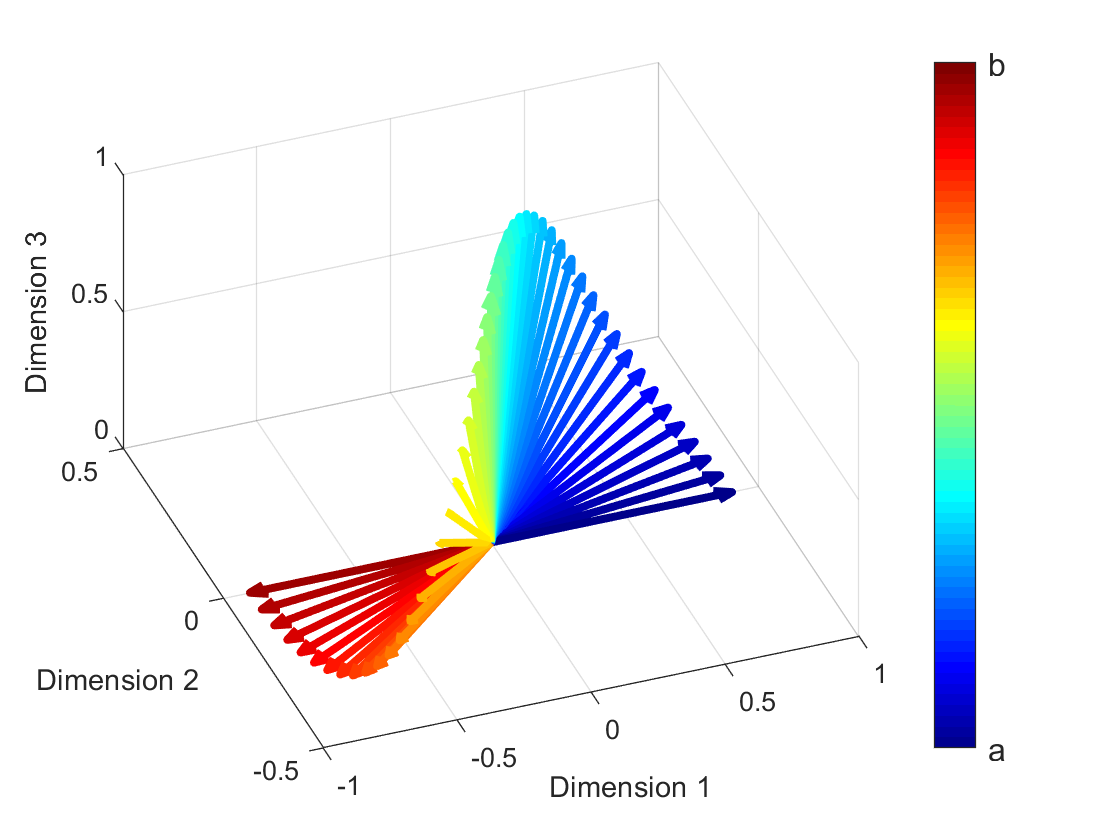

Methods for Numeracy-Preserving Word Embeddings

Dhanasekar Sundararaman, Shijing Si, Vivek Subramanian, Guoyin Wang, Devamanyu Hazarika, Lawrence Carin,